backup job failed with err -11 on 2 of 6 VM's

- Thread starter Tealk

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

This setting does not apply for containers unfortunately. It's still not clear what the root cause of the issue is or if it's actually two different issues. I was able to reproduce the issue for VMs, but only on an upgraded node. A fresh PVE 7 install running same kernel/Qemu and AFAICT same LVM configuration does not exhibit the problem. But having a workaround for VMs is at least a first step.Should I also try the setting for the ct's?

damn and i just changed 3 nodes to ctBut having a workaround for VMs is at least a first step.

but thanks for the quick help <3

//Edit

The backup also finished successfully.

Last edited:

Thanks for reporting to both of you! @Zelario could you also share the storage configuration and for the VM configuration for the affected VMs? Is there any LVM involved?

So the backup did indeed work after adding the "aio=threads", here are my configs

/etc/pve/storage.cfg

Code:

dir: local

path /var/lib/vz

content backup,iso,vztmpl

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

lvm: hdd

vgname hdd

content rootdir,images

nodes pve

shared 0

dir: usb1

path /media/usb-drv-1/

content images,backup

prune-backups keep-last=10

shared 0

cifs: NAS1

path /mnt/pve/NAS1

server 192.168.2.15

share ProxMox

content rootdir,iso,backup,images

prune-backups keep-last=2

username pmoxVM config

Code:

agent: 1

bios: ovmf

boot: order=sata0;ide2;net0

cores: 2

efidisk0: hdd:vm-122-disk-1,size=4M

ide2: none,media=cdrom

machine: q35

memory: 4096

name: WINSRV2019

net0: e1000=DE:3A:41:20:9F:F0,bridge=vmbr0

numa: 0

onboot: 1

ostype: win10

sata0: hdd:vm-122-disk-0,size=100G,aio=threads

scsihw: virtio-scsi-pci

smbios1: uuid=672b1b6c-cf3b-468b-8576-7538b27497b0

sockets: 1

vmgenid: 74b0c341-2da5-4440-b277-fcfee9762b70I have the same

The log from PBS side before and after adding

Before with

After working:

err -11 error after upgrade to PVE 7.0. Not on all VM backup jobs but few of them. From same SDD to same PBS storage. Work again after adding aio=threadsThe log from PBS side before and after adding

aio=threadsBefore with

err -11:

Code:

ProxmoxBackup Server 2.0-4

()

2021-07-21T10:20:09-04:00: starting new backup on datastore 'pbs-backup': "vm/100/2021-07-21T14:20:06Z"

2021-07-21T10:20:09-04:00: download 'index.json.blob' from previous backup.

2021-07-21T10:20:09-04:00: register chunks in 'drive-scsi0.img.fidx' from previous backup.

2021-07-21T10:20:09-04:00: download 'drive-scsi0.img.fidx' from previous backup.

2021-07-21T10:20:09-04:00: created new fixed index 1 ("vm/100/2021-07-21T14:20:06Z/drive-scsi0.img.fidx")

2021-07-21T10:20:09-04:00: add blob "/mnt/pbs_backup/vm/100/2021-07-21T14:20:06Z/qemu-server.conf.blob" (342 bytes, comp: 342)

2021-07-21T10:20:10-04:00: backup failed: connection error: bytes remaining on stream

2021-07-21T10:20:10-04:00: removing failed backup

2021-07-21T10:20:10-04:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection: broken pipe

2021-07-21T10:20:10-04:00: TASK ERROR: connection error: bytes remaining on stream

2021-07-21T10:20:10-04:00: POST /fixed_chunk: 400 Bad Request: backup already marked as finished.After working:

Code:

ProxmoxBackup Server 2.0-4

()

2021-07-21T10:33:10-04:00: starting new backup on datastore 'pbs-backup': "vm/100/2021-07-21T14:33:06Z"

2021-07-21T10:33:10-04:00: download 'index.json.blob' from previous backup.

2021-07-21T10:33:10-04:00: register chunks in 'drive-scsi0.img.fidx' from previous backup.

2021-07-21T10:33:10-04:00: download 'drive-scsi0.img.fidx' from previous backup.

2021-07-21T10:33:10-04:00: created new fixed index 1 ("vm/100/2021-07-21T14:33:06Z/drive-scsi0.img.fidx")

2021-07-21T10:33:10-04:00: add blob "/mnt/pbs_backup/vm/100/2021-07-21T14:33:06Z/qemu-server.conf.blob" (350 bytes, comp: 350)

2021-07-21T10:34:01-04:00: Upload statistics for 'drive-scsi0.img.fidx'

2021-07-21T10:34:01-04:00: UUID: 141f2be7d7bf4850a666d137e43a7d36

2021-07-21T10:34:01-04:00: Checksum: cd36dcabac0773ad305e37d972fc02487ed3eb37c24bafcf94a07cb0774b0974

2021-07-21T10:34:01-04:00: Size: 21474836480

2021-07-21T10:34:01-04:00: Chunk count: 5120

2021-07-21T10:34:01-04:00: Upload size: 3787456512 (17%)

2021-07-21T10:34:01-04:00: Duplicates: 4217+24 (82%)

2021-07-21T10:34:01-04:00: Compression: 36%

2021-07-21T10:34:01-04:00: successfully closed fixed index 1

2021-07-21T10:34:01-04:00: add blob "/mnt/pbs_backup/vm/100/2021-07-21T14:33:06Z/index.json.blob" (327 bytes, comp: 327)

2021-07-21T10:34:01-04:00: successfully finished backup

2021-07-21T10:34:01-04:00: backup finished successfully

2021-07-21T10:34:01-04:00: TASK OK

Last edited:

yes the option works great so far i have had no more problems with vm'saio=threads

i hope there is a solution for LXC's soon too

Last edited:

Both have to do with IO and threads, but yes, it's a coincidenceis it just a coincidence that the options are so similar?

View attachment 27938

Could you provide the configurations of some affected containers? Which template(s) did you use? Could you try using a differentyes the option works great so far i have had no more problems with vm's

i hope there is a solution for LXC's soon too

tmpdir (can be set via the CLI options for the vzdump command) if you have some other directory-based storage attached?With which command do I get the config output? qm config xxx does not seem to workCould you provide the configurations of some affected containers?

The Debian10 which was given by ProxmoxWhich template(s) did you use?

I just don't understand, I should only change the temp dir when I have multiple storages included?Could you try using a differenttmpdir(can be set via the CLI options for thevzdumpcommand) if you have some other directory-based storage attached?

and how does that work? In the wiki I find no option to the command

https://pve.proxmox.com/wiki/Command_line_tools#vzdump

With which command do I get the config output? qm config xxx does not seem to work

pct config <ID>.I mean if you have a directory that's not on the same disk/filesystem as your root file system (or to be preciseThe Debian10 which was given by Proxmox

I just don't understand, I should only change the temp dir when I have multiple storages included?

/var/tmp which is used by default). I mean, using a different directory on the same disk/filesystem probably behaves the same, but you can still try if you want to.SeeView attachment 28049

and how does that work? In the wiki I find no option to the command

https://pve.proxmox.com/wiki/Command_line_tools#vzdump

man vzdump. The vzdump command is printed right on top of the backup log. So the easiest is to copy that, add a --tmpdir </some/path> at the end, and execute it in the CLI.

Code:

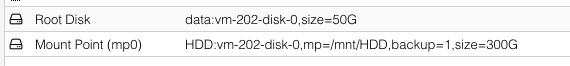

pct config 202

arch: amd64

cores: 8

hostname: WEB

memory: 16384

mp0: HDD:vm-202-disk-0,mp=/mnt/HDD,backup=1,size=300G

net0: name=eth0,bridge=vmbr0,firewall=1,gw=168.119.32.115,hwaddr=56:76:EF:9B:20:C2,ip=5.9.187.34/32,type=veth

ostype: debian

rootfs: data:vm-202-disk-0,size=50G

swap: 16384

unprivileged: 1

Code:

pct config 203

arch: amd64

cores: 6

hostname: Fediverse

memory: 16384

mp0: HDD:vm-203-disk-0,mp=/home/mastodon/live/public/system,backup=1,size=100G

net0: name=eth0,bridge=vmbr0,firewall=1,gw=168.119.32.115,hwaddr=2A:16:B9:F4:65:2B,ip=5.9.187.35/32,type=veth

ostype: debian

rootfs: data:vm-203-disk-0,size=50G

swap: 16384

unprivileged: 1no i only have a file system that is on the root systemI mean if you have a directory that's not on the same disk/filesystem as your root file system

Still the smame with a other pathSo the easiest is to copy that, add a--tmpdir </some/path>at the end, and execute it in the CLI.

Code:

vzdump 202 --quiet 1 --compress zstd --tmpdir /home/testtemp --mode snapshot --mailnotification failure --mailto my@mail --storage ProxBack

202: 2021-07-26 10:41:24 INFO: Starting Backup of VM 202 (lxc)

202: 2021-07-26 10:41:24 INFO: status = running

202: 2021-07-26 10:41:24 INFO: CT Name: WEB

202: 2021-07-26 10:41:24 INFO: including mount point rootfs ('/') in backup

202: 2021-07-26 10:41:24 INFO: including mount point mp0 ('/mnt/HDD') in backup

202: 2021-07-26 10:41:24 INFO: mode failure - some volumes do not support snapshots

202: 2021-07-26 10:41:24 INFO: trying 'suspend' mode instead

202: 2021-07-26 10:41:24 INFO: backup mode: suspend

202: 2021-07-26 10:41:24 INFO: ionice priority: 7

202: 2021-07-26 10:41:24 INFO: CT Name: WEB

202: 2021-07-26 10:41:24 INFO: including mount point rootfs ('/') in backup

202: 2021-07-26 10:41:24 INFO: including mount point mp0 ('/mnt/HDD') in backup

202: 2021-07-26 10:41:24 INFO: starting first sync /proc/1002299/root/ to /home/testtemp/vzdumptmp15631_202/

202: 2021-07-26 10:42:14 ERROR: Backup of VM 202 failed - command 'rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative '--exclude=/tmp/?*' '--exclude=/var/tmp/?*' '--exclude=/var/run/?*.pid' /proc/1002299/root//./ /proc/1002299/root//./mnt/HDD /home/testtemp/vzdumptmp15631_202/' failed: exit code 11I still wasn't able to reproduce the issue. Would you mind running

and share the file it produces, i.e.

Code:

strace 2> /tmp/vzdump_lxc_trace.txt vzdump 202 --quiet 1 --compress zstd --mode snapshot --mailnotification failure --mailto my@mail --storage ProxBack/tmp/vzdump_lxc_trace.txt? You might need to install strace first.FYI: i created the containers after i updated to v7I still wasn't able to reproduce the issue. Would you mind running

here the file

Attachments

Last edited:

Assuming you did not restart the container (otherwise use

work?

If it also fails, please provide the trace like last time:

lxc-info -n 148 -p and use the PID it outputs instead of 1002299). Does running

Code:

rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative /proc/1002299/root//./mnt/HDD /tmp/rsync_testIf it also fails, please provide the trace like last time:

Code:

strace 2> /tmp/rsync_strace.txt rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative /proc/1002299/root//./mnt/HDD /tmp/rsync_testI can not do that, I do not have enough memory on the root system diskrsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative /proc/1002299/root//./mnt/HDD /tmp/rsync_test

But basically the same command is used by the vzdump backup itself. That is, with the container's root file system included too and not just the mount point like here.

EDIT: and that

EDIT: and that

/var/tmp/vzdumptmpXYZ is used as the target. So could it be that you simply run out of space during the backup?

Last edited:

So the files for the backup are first written to the host system and then to the backup server?

The HDD to be backed up has over 100GB and on the host system only 7GB are free, so I can't do the rsync, at least not locally.

The HDD to be backed up has over 100GB and on the host system only 7GB are free, so I can't do the rsync, at least not locally.

Yes, forSo the files for the backup are first written to the host system and then to the backup server?

The HDD to be backed up has over 100GB and on the host system only 7GB are free, so I can't do the rsync, at least not locally.

suspend mode everything needs to be copied to a temporary directory first for consistency. If not all mount points/rootfs support snapshots, then suspend mode is used as a fallback, even if you set snapshot mode.ok then I set the backup to stop and report the result, but I have the backup done at night because of the availability of the services

Last edited: