Hi, für einen blk_pwrite failed gibt es zwei übliche Fehlerquellen. Entweder Zieldisk voll oder Zieldisk ist defekt.

Backup funktioniert neuerdings nicht mehr

- Thread starter markyman

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Guten Morgen,

auch der neue Restore schlägt fehl:

Jemand noch eine Idee?

restore vma archive: zstd -q -d -c /mnt/pve/BackupNFSServer/dump/vzdump-qemu-105-2024_09_27-22_04_10.vma.zst | vma extract -v -r /var/tmp/vzdumptmp2875559.fifo - /var/tmp/vzdumptmp2875559

CFG: size: 571 name: qemu-server.conf

DEV: dev_id=1 size: 42949672960 devname: drive-scsi0

DEV: dev_id=2 size: 1000204886016 devname: drive-scsi1

CTIME: Fri Sep 27 22:05:15 2024

WARNING: You have not turned on protection against thin pools running out of space.

WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

Logical volume "vm-105-disk-0" created.

WARNING: Sum of all thin volume sizes (<1.86 TiB) exceeds the size of thin pool pve/data and the size of whole volume group (<1.82 TiB).

new volume ID is 'local-lvm:vm-105-disk-0'

Rounding up size to full physical extent <931.52 GiB

WARNING: You have not turned on protection against thin pools running out of space.

WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

Logical volume "vm-105-disk-1" created.

WARNING: Sum of all thin volume sizes (<2.77 TiB) exceeds the size of thin pool pve/data and the size of whole volume group (<1.82 TiB).

new volume ID is 'local-lvm:vm-105-disk-1'

map 'drive-scsi0' to '/dev/pve/vm-105-disk-0' (write zeros = 0)

map 'drive-scsi1' to '/dev/pve/vm-105-disk-1' (write zeros = 0)

progress 1% (read 10431561728 bytes, duration 86 sec)

progress 2% (read 20863123456 bytes, duration 178 sec)

progress 3% (read 31294685184 bytes, duration 268 sec)

progress 4% (read 41726181376 bytes, duration 362 sec)

progress 5% (read 52157743104 bytes, duration 453 sec)

progress 6% (read 62589304832 bytes, duration 541 sec)

progress 7% (read 73020866560 bytes, duration 630 sec)

progress 8% (read 83452362752 bytes, duration 718 sec)

progress 9% (read 93883924480 bytes, duration 806 sec)

progress 10% (read 104315486208 bytes, duration 894 sec)

progress 11% (read 114747047936 bytes, duration 982 sec)

progress 12% (read 125178544128 bytes, duration 1071 sec)

progress 13% (read 135610105856 bytes, duration 1159 sec)

progress 14% (read 146041667584 bytes, duration 1247 sec)

progress 15% (read 156473229312 bytes, duration 1335 sec)

progress 16% (read 166904725504 bytes, duration 1426 sec)

progress 17% (read 177336287232 bytes, duration 1514 sec)

progress 18% (read 187767848960 bytes, duration 1603 sec)

progress 19% (read 198199410688 bytes, duration 1691 sec)

progress 20% (read 208630906880 bytes, duration 1779 sec)

progress 21% (read 219062468608 bytes, duration 1868 sec)

progress 22% (read 229494030336 bytes, duration 1956 sec)

progress 23% (read 239925592064 bytes, duration 2044 sec)

progress 24% (read 250357088256 bytes, duration 2141 sec)

progress 25% (read 260788649984 bytes, duration 2229 sec)

progress 26% (read 271220211712 bytes, duration 2318 sec)

progress 27% (read 281651773440 bytes, duration 2406 sec)

progress 28% (read 292083269632 bytes, duration 2494 sec)

progress 29% (read 302514831360 bytes, duration 2582 sec)

progress 30% (read 312946393088 bytes, duration 2670 sec)

progress 31% (read 323377954816 bytes, duration 2758 sec)

progress 32% (read 333809451008 bytes, duration 2846 sec)

progress 33% (read 344241012736 bytes, duration 2934 sec)

progress 34% (read 354672574464 bytes, duration 3022 sec)

progress 35% (read 365104136192 bytes, duration 3110 sec)

progress 36% (read 375535632384 bytes, duration 3198 sec)

progress 37% (read 385967194112 bytes, duration 3286 sec)

progress 38% (read 396398755840 bytes, duration 3374 sec)

progress 39% (read 406830317568 bytes, duration 3462 sec)

progress 40% (read 417261813760 bytes, duration 3550 sec)

progress 41% (read 427693375488 bytes, duration 3639 sec)

progress 42% (read 438124937216 bytes, duration 3747 sec)

progress 43% (read 448556498944 bytes, duration 3835 sec)

progress 44% (read 458987995136 bytes, duration 3923 sec)

progress 45% (read 469419556864 bytes, duration 4012 sec)

progress 46% (read 479851118592 bytes, duration 4100 sec)

progress 47% (read 490282680320 bytes, duration 4188 sec)

progress 48% (read 500714176512 bytes, duration 4269 sec)

progress 49% (read 511145738240 bytes, duration 4357 sec)

progress 50% (read 521577299968 bytes, duration 4445 sec)

progress 51% (read 532008861696 bytes, duration 4533 sec)

progress 52% (read 542440357888 bytes, duration 4621 sec)

progress 53% (read 552871919616 bytes, duration 4709 sec)

progress 54% (read 563303481344 bytes, duration 4797 sec)

progress 55% (read 573735043072 bytes, duration 4885 sec)

progress 56% (read 584166539264 bytes, duration 4976 sec)

progress 57% (read 594598100992 bytes, duration 5064 sec)

progress 58% (read 605029662720 bytes, duration 5152 sec)

progress 59% (read 615461224448 bytes, duration 5240 sec)

progress 60% (read 625892720640 bytes, duration 5327 sec)

progress 61% (read 636324282368 bytes, duration 5419 sec)

progress 62% (read 646755844096 bytes, duration 5507 sec)

progress 63% (read 657187405824 bytes, duration 5595 sec)

progress 64% (read 667618902016 bytes, duration 5683 sec)

progress 65% (read 678050463744 bytes, duration 5771 sec)

progress 66% (read 688482025472 bytes, duration 5859 sec)

progress 67% (read 698913587200 bytes, duration 5947 sec)

progress 68% (read 709345083392 bytes, duration 6035 sec)

progress 69% (read 719776645120 bytes, duration 6122 sec)

progress 70% (read 730208206848 bytes, duration 6211 sec)

progress 71% (read 740639768576 bytes, duration 6299 sec)

progress 72% (read 751071264768 bytes, duration 6386 sec)

progress 73% (read 761502826496 bytes, duration 6473 sec)

progress 74% (read 771934388224 bytes, duration 6561 sec)

progress 75% (read 782365949952 bytes, duration 6650 sec)

progress 76% (read 792797446144 bytes, duration 6738 sec)

progress 77% (read 803229007872 bytes, duration 6826 sec)

progress 78% (read 813660569600 bytes, duration 6914 sec)

progress 79% (read 824092131328 bytes, duration 7003 sec)

progress 80% (read 834523627520 bytes, duration 7091 sec)

progress 81% (read 844955189248 bytes, duration 7180 sec)

progress 82% (read 855386750976 bytes, duration 7326 sec)

progress 83% (read 865818312704 bytes, duration 7414 sec)

progress 84% (read 876249808896 bytes, duration 7503 sec)

progress 85% (read 886681370624 bytes, duration 7591 sec)

progress 86% (read 897112932352 bytes, duration 7679 sec)

progress 87% (read 907544494080 bytes, duration 7768 sec)

progress 88% (read 917975990272 bytes, duration 7856 sec)

progress 89% (read 928407552000 bytes, duration 7945 sec)

progress 90% (read 938839113728 bytes, duration 8033 sec)

progress 91% (read 949270675456 bytes, duration 8122 sec)

progress 92% (read 959702171648 bytes, duration 8211 sec)

progress 93% (read 970133733376 bytes, duration 8300 sec)

progress 94% (read 980565295104 bytes, duration 8389 sec)

progress 95% (read 990996856832 bytes, duration 8477 sec)

progress 96% (read 1001428353024 bytes, duration 8562 sec)

progress 97% (read 1011859914752 bytes, duration 8586 sec)

progress 98% (read 1022291476480 bytes, duration 8617 sec)

progress 99% (read 1032723038208 bytes, duration 8645 sec)

progress 100% (read 1043154534400 bytes, duration 8646 sec)

vma: restore failed - vma blk_flush drive-scsi0 failed

/bin/bash: line 1: 2875569 Done zstd -q -d -c /mnt/pve/BackupNFSServer/dump/vzdump-qemu-105-2024_09_27-22_04_10.vma.zst

2875570 Trace/breakpoint trap | vma extract -v -r /var/tmp/vzdumptmp2875559.fifo - /var/tmp/vzdumptmp2875559

Logical volume "vm-105-disk-0" successfully removed.

temporary volume 'local-lvm:vm-105-disk-0' sucessfuly removed

Logical volume "vm-105-disk-1" successfully removed.

temporary volume 'local-lvm:vm-105-disk-1' sucessfuly removed

no lock found trying to remove 'create' lock

error before or during data restore, some or all disks were not completely restored. VM 105 state is NOT cleaned up.

TASK ERROR: command 'set -o pipefail && zstd -q -d -c /mnt/pve/BackupNFSServer/dump/vzdump-qemu-105-2024_09_27-22_04_10.vma.zst | vma extract -v -r /var/tmp/vzdumptmp2875559.fifo - /var/tmp/vzdumptmp2875559' failed: exit code 133

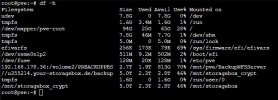

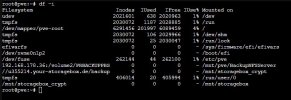

Ok, nun habe ich mal ein wenig geschaut und mir ist was aufgefallen:

Festplatte scheint ja voll zu sein oder?

Warum habe ich dann in dieser Übersicht zb. den LXC meiner Nextcloud 2x drin??

Auch VM sind doppelt:

Last edited:

Erweitere deinen Datenspeicher..

Schau in die LXC und VM Configs nach, welche Resource wirklich zugeordnet sind.

Mache dafür eine Matrix: X-Achse: LXC, VM Namen und Y-Achse: Dateien und streiche an , was wo gebraucht - referenziert - wird.

Was dann noch - ohne Markierung - in der Auflistung der Y-Achse steht, kann gelöscht werden.

Dann muss der Platz auch unter

Liegen da noch Logs, die gelöscht werden könnten?

Bsp.:

Schau in die LXC und VM Configs nach, welche Resource wirklich zugeordnet sind.

Mache dafür eine Matrix: X-Achse: LXC, VM Namen und Y-Achse: Dateien und streiche an , was wo gebraucht - referenziert - wird.

Was dann noch - ohne Markierung - in der Auflistung der Y-Achse steht, kann gelöscht werden.

Dann muss der Platz auch unter

/var/tmp/ verfügbar sein, schon überprüft?Liegen da noch Logs, die gelöscht werden könnten?

Bsp.:

journalctl --vacuum-size 64MGuten Morgen, auf den ersten Anschein schon, du hast die an du hast die Analyse schon teilweise durchgeführt. Ich schaue aber immer und könnte auch sagen gerne über die Konsole auf die realen Konflikt files unter /etc/pve/... da liegen sie für die VMs und lxc getrennt. Was auch noch kaputt erscheint ist: da ist eine Konfig für die vm105 und noch eine Datei für eine vm106 . das würde ich auch bereinigen.

Nur in der Praxis, der einfachste Schutz von den Ausfallzeiten ist ZFS Raid 1 = ZFS mirror. dazu brauchen wir dann zwei Festplatten, in deinem Fall geht das mit den Crucial MX500 1 Terabyte gut. Mache ich auch auf einem meiner Proxmox VE. Ist es denn geplant auf ein Mini PC zu verzichten und mal einen Mikro ATX PC aufzubauen mit entsprechend 6-10 SATA Schnittstellen und eventuell noch zwei m.2 NVMe? AMD Ryzen B550 AM4, ASRock B550M Pro4, Mainboard mit z.B. Ryzen 5 5600G.

https://www.alternate.de/AMD/Ryzen-5-5600G-Prozessor/html/product/100096774

https://www.alternate.de/ASRock/B550M-Pro4-Mainboard/html/product/1648303

DDR4 Speicher kostet ja heute auch nicht mehr die Welt und man kann einfach 32 oder 64 GB gleich installieren.

https://www.alternate.de/AMD/Ryzen-5-5600G-Prozessor/html/product/100096774

https://www.alternate.de/ASRock/B550M-Pro4-Mainboard/html/product/1648303

DDR4 Speicher kostet ja heute auch nicht mehr die Welt und man kann einfach 32 oder 64 GB gleich installieren.

Last edited:

Hi,

danke für deine Antwort.

Also ich bin mal hingegangen und habe mir nun mal die Ausgaben deiner Befehle angesehen. Wie ja schon festgestellt ist die VM100 ja doppelt, warum auch immer. Jetzt hatte ich als Probe ja mal das Restore der PBS VM auf meinem NAS versucht was ja auch fehlgeschlagen ist. Nun, mit den ganzen Info´s habe ich festgestellt das auf meinem NAS auch zu wenig Platz war. Da lagen, warum auch immer, schon diverse VM´s. Gut, diese gelöscht, also den NAS mal bereinigt und schon hat nun der Restore zumindest mal auf meinem NAS funktioniert und die VM PBS 105 funktioniert wieder. Jetzt habe ich ja auf meiner ROOT Platte des PVE noch vieleicht 900gb Platz, das Backup des PBS sind aber schon 990 ( und ja noch evtl. gepackt ) was ja, nicht passen würde. Problem ist, das ich das Backup der VM 105 mit allen Daten erledigt habe also auch mit Datendisk. Nun werde ich die Datendisk aus dem Backup rausnehmen, ein neues Backup Anlegen und somit hat das Backup dann nur eine geringe größe und der Restore vom PBS sollte kein Problem sein.

Ne, einen anderen Pc oder Server möchte ich nicht anschaffen, für mein Case reicht der Dell mini PC. Meine Nextcloud hat zzt. 700gb, da ist noch genügend Platz und was den Ausfall angeht langt mein Szenario ja: 1x Sicherung Interne SSD, 1x Externe, 1x NAs, Nas selbst schiebt in Zeitabständen ein Backup zum Hetzner Speicher. Das sollte reichen.

Wo siehst du die Files? ( da ist eine Konfig für die vm105 und noch eine Datei für eine vm106 . das würde ich auch bereinigen.)

danke für deine Antwort.

Also ich bin mal hingegangen und habe mir nun mal die Ausgaben deiner Befehle angesehen. Wie ja schon festgestellt ist die VM100 ja doppelt, warum auch immer. Jetzt hatte ich als Probe ja mal das Restore der PBS VM auf meinem NAS versucht was ja auch fehlgeschlagen ist. Nun, mit den ganzen Info´s habe ich festgestellt das auf meinem NAS auch zu wenig Platz war. Da lagen, warum auch immer, schon diverse VM´s. Gut, diese gelöscht, also den NAS mal bereinigt und schon hat nun der Restore zumindest mal auf meinem NAS funktioniert und die VM PBS 105 funktioniert wieder. Jetzt habe ich ja auf meiner ROOT Platte des PVE noch vieleicht 900gb Platz, das Backup des PBS sind aber schon 990 ( und ja noch evtl. gepackt ) was ja, nicht passen würde. Problem ist, das ich das Backup der VM 105 mit allen Daten erledigt habe also auch mit Datendisk. Nun werde ich die Datendisk aus dem Backup rausnehmen, ein neues Backup Anlegen und somit hat das Backup dann nur eine geringe größe und der Restore vom PBS sollte kein Problem sein.

Ne, einen anderen Pc oder Server möchte ich nicht anschaffen, für mein Case reicht der Dell mini PC. Meine Nextcloud hat zzt. 700gb, da ist noch genügend Platz und was den Ausfall angeht langt mein Szenario ja: 1x Sicherung Interne SSD, 1x Externe, 1x NAs, Nas selbst schiebt in Zeitabständen ein Backup zum Hetzner Speicher. Das sollte reichen.

Wo siehst du die Files? ( da ist eine Konfig für die vm105 und noch eine Datei für eine vm106 . das würde ich auch bereinigen.)

Guten Morgen, muss los...

Unter /etc/pve/lxc/, /etc/pve/qemu-server/ finden sich die *.conf Dateien der LXC der VM.

Hier bewege ich mir fast ausschließlich, da es Dinge gibt, die man (ich) besser direkt administriere.

Darauf bezog sich auch die Matrix der LXC/VM und der angelegen Daten.

Es lohnt sich auch auf der Konsole zu bewegen. Ich habe extra die Unterverzeichnisse nicht angegeben..Ich schaue aber immer und könnte auch sagen gerne über die Konsole auf die realen Konflikt files unter /etc/pve/... da liegen sie für die VMs und lxc getrennt.

Unter /etc/pve/lxc/, /etc/pve/qemu-server/ finden sich die *.conf Dateien der LXC der VM.

Hier bewege ich mir fast ausschließlich, da es Dinge gibt, die man (ich) besser direkt administriere.

Darauf bezog sich auch die Matrix der LXC/VM und der angelegen Daten.

Die Fragezeichen sind dort da der PBS ja tot war und deshalb keine Verbindung bestand. Nun nachdem ich ihn wiederhergestellt habe sind die Fragezeichen weg.In deinem 1. Log zum PBS geht hervor, dass die Anmeldedaten zum PBS falsch sind, deshalb auch die Fragezeichen an den pBS-Datastores in der Seitenleiste deines PVE.