Hi,

Recently we changed our network storage with a new server and connected a new storage via SMB/CIFS.

Now when running the backup schedule or manually we get an error always at the second 15% with same error-name/code as below. We tried to use local storage or different storage but it keeps giving the same error even if we move the disk to a different storage.

The error is:

INFO: starting new backup job: vzdump 107 --compress zstd --mode snapshot --node inegielc1-proxa --remove 0 --notes-template '{{guestname}}' --storage VM-Library-PRD

INFO: Starting Backup of VM 107 (qemu)

INFO: Backup started at 2023-10-12 16:34:16

INFO: status = running

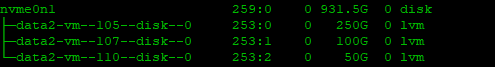

INFO: include disk 'scsi0' 'data2:vm-107-disk-0' 100G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating vzdump archive '/mnt/pve/VM-Library-PRD/dump/vzdump-qemu-107-2023_10_12-16_34_16.vma.zst'

INFO: started backup task '04a05974-7183-436a-8ae7-ce17a4aee2be'

INFO: resuming VM again

INFO: 2% (2.2 GiB of 100.0 GiB) in 3s, read: 767.3 MiB/s, write: 218.2 MiB/s

|...

INFO: 15% (15.2 GiB of 100.0 GiB) in 36s, read: 276.4 MiB/s, write: 276.2 MiB/s

INFO: 15% (15.4 GiB of 100.0 GiB) in 37s, read: 183.1 MiB/s, write: 181.3 MiB/s

ERROR: job failed with err -61 - No data available

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 107 failed - job failed with err -61 - No data available

INFO: Failed at 2023-10-12 16:35:02

INFO: Backup job finished with errors

TASK ERROR: job errors

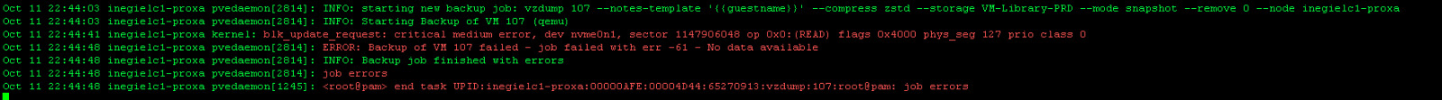

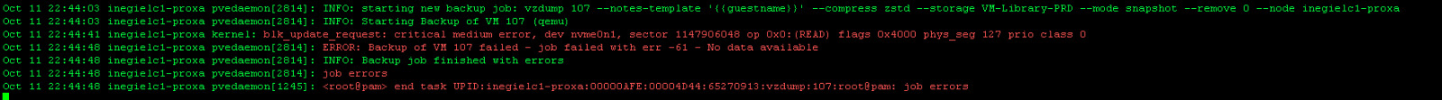

In the logs we see:

The config of the VM is:

root@inegielc1-proxa:~# qm config 107

boot: order=scsi0;ide2;net0

cores: 2

ide2: none,media=cdrom

memory: 4092

meta: creation-qemu=6.1.0,ctime=1662992740

name: inegielc1-zabpb

net0: virtio=16:EF:8C:C8:6D:72,bridge=vmbr1,firewall=1,tag=255

numa: 0

onboot: 1

ostype: l26

scsi0: data2:vm-107-disk-0,cache=none,size=100G

scsihw: virtio-scsi-pci

smbios1: uuid=da1ea533-60a3-484c-b6d0-d410ae2923b7

sockets: 1

vmgenid: 9b453976-7037-46c0-90a8-f47b97a7b3c8

Our version is:

root@inegielc1-proxa:~# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.116-1-pve)

pve-manager: 7.4-16 (running version: 7.4-16/0f39f621)

pve-kernel-5.15: 7.4-6

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.15.116-1-pve: 5.15.116-1

pve-kernel-5.15.108-1-pve: 5.15.108-2

pve-kernel-5.15.64-1-pve: 5.15.64-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-5-pve: 5.11.22-10

pve-kernel-5.11.22-3-pve: 5.11.22-7

pve-kernel-5.11.22-1-pve: 5.11.22-2

ceph-fuse: 15.2.13-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.3-1

proxmox-backup-file-restore: 2.4.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.2

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-6

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-5

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve1

What we tried:

Recently we changed our network storage with a new server and connected a new storage via SMB/CIFS.

Now when running the backup schedule or manually we get an error always at the second 15% with same error-name/code as below. We tried to use local storage or different storage but it keeps giving the same error even if we move the disk to a different storage.

The error is:

INFO: starting new backup job: vzdump 107 --compress zstd --mode snapshot --node inegielc1-proxa --remove 0 --notes-template '{{guestname}}' --storage VM-Library-PRD

INFO: Starting Backup of VM 107 (qemu)

INFO: Backup started at 2023-10-12 16:34:16

INFO: status = running

INFO: include disk 'scsi0' 'data2:vm-107-disk-0' 100G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating vzdump archive '/mnt/pve/VM-Library-PRD/dump/vzdump-qemu-107-2023_10_12-16_34_16.vma.zst'

INFO: started backup task '04a05974-7183-436a-8ae7-ce17a4aee2be'

INFO: resuming VM again

INFO: 2% (2.2 GiB of 100.0 GiB) in 3s, read: 767.3 MiB/s, write: 218.2 MiB/s

|...

INFO: 15% (15.2 GiB of 100.0 GiB) in 36s, read: 276.4 MiB/s, write: 276.2 MiB/s

INFO: 15% (15.4 GiB of 100.0 GiB) in 37s, read: 183.1 MiB/s, write: 181.3 MiB/s

ERROR: job failed with err -61 - No data available

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 107 failed - job failed with err -61 - No data available

INFO: Failed at 2023-10-12 16:35:02

INFO: Backup job finished with errors

TASK ERROR: job errors

In the logs we see:

The config of the VM is:

root@inegielc1-proxa:~# qm config 107

boot: order=scsi0;ide2;net0

cores: 2

ide2: none,media=cdrom

memory: 4092

meta: creation-qemu=6.1.0,ctime=1662992740

name: inegielc1-zabpb

net0: virtio=16:EF:8C:C8:6D:72,bridge=vmbr1,firewall=1,tag=255

numa: 0

onboot: 1

ostype: l26

scsi0: data2:vm-107-disk-0,cache=none,size=100G

scsihw: virtio-scsi-pci

smbios1: uuid=da1ea533-60a3-484c-b6d0-d410ae2923b7

sockets: 1

vmgenid: 9b453976-7037-46c0-90a8-f47b97a7b3c8

Our version is:

root@inegielc1-proxa:~# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.116-1-pve)

pve-manager: 7.4-16 (running version: 7.4-16/0f39f621)

pve-kernel-5.15: 7.4-6

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.15.116-1-pve: 5.15.116-1

pve-kernel-5.15.108-1-pve: 5.15.108-2

pve-kernel-5.15.64-1-pve: 5.15.64-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-5-pve: 5.11.22-10

pve-kernel-5.11.22-3-pve: 5.11.22-7

pve-kernel-5.11.22-1-pve: 5.11.22-2

ceph-fuse: 15.2.13-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.3-1

proxmox-backup-file-restore: 2.4.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.2

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-6

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-5

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve1

What we tried:

- Restart or Shutdown VM and Proxmox Server.

Use a manual backup instead of schedule (other have no problems) - Select a different disk, even a local attached one.

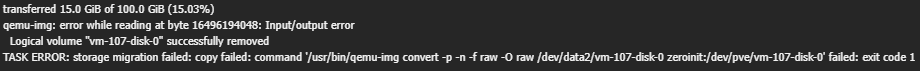

- Move disk --> we also get an error: qemu-img: error while reading at byte 16496194048: Input/output error

- Set Async IO to threads.

- Stop the VM and use manual backup with also stop mode and GZIP compression (instead of ZSTD)