Hello,

I have the latest version of proxmox with several VM.

One of them is trunenas for backuping many stuff such as camera records.

I get the hddintbkp by passthrough the 2nd Hdd of the proxmox server on the VM truneas which is used only for storage backups and mount it as NAS point.

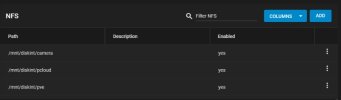

On truenas VM:

If you have any ideas, welcome

I have the latest version of proxmox with several VM.

One of them is trunenas for backuping many stuff such as camera records.

I get the hddintbkp by passthrough the 2nd Hdd of the proxmox server on the VM truneas which is used only for storage backups and mount it as NAS point.

Code:

root@pve:~# lsblk -o +MODEL,SERIAL,WWN

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT MODEL SERIAL WWN

sda 8:0 0 1.8T 0 disk WDC_WD20SPZX-08UA7 WD-WX12E90D6Y78 0x50014ee2139aed6c

├─sda1 8:1 0 2G 0 part 0x50014ee2139aed6c

└─sda2 8:2 0 1.8T 0 part 0x50014ee2139aed6c

nvme0n1 259:0 0 953.9G 0 disk INTEL SSDPEKNU010TZ BTKA204007L61P0B eui.0000000001000000e4d25c247cf35401

├─nvme0n1p1 259:1 0 1007K 0 part eui.0000000001000000e4d25c247cf35401

├─nvme0n1p2 259:2 0 1G 0 part /boot/efi eui.0000000001000000e4d25c247cf35401

└─nvme0n1p3 259:3 0 952.9G 0 part eui.0000000001000000e4d25c247cf35401

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

└─pve-root 253:1 0 944.9G 0 lvm /

root@pve:~#

root@pve:~# cat /etc/fstab

# <file system> <mount point> <type> <options> <dump> <pass>

/dev/pve/root / ext4 errors=remount-ro 0 1

UUID=53FE-879F /boot/efi vfat defaults 0 1

/dev/pve/swap none swap sw 0 0

proc /proc proc defaults 0 0

192.168.1.19:/mnt/diskint/pve /mnt/hddintbkp/ nfs auto,_netdev,,nofail 0 0

root@pve:~#On truenas VM:

If you have any ideas, welcome

Last edited: