Hi there,

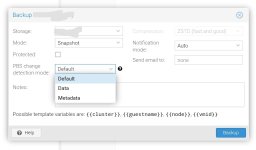

I am running PBS to backup some CTs and VMs running ob PVE. As the storage has over 1.5TB and is placed on classical, rotaing hdds a backup-jobs runs nearly 6 hours. I used the default settings for the backup-job and used "Default" as "Change detection mode".

Now I tried "Metadata" as change detection mode and the backup was (with the second run) extremely faster.

This brings me to the question if using "Metadata" as change detection mode is save or if there are any caveats that could kill my backups? As this seems to be much better, from the perspection of speed, I wonder if it could be a loss not reading all blocks.

Can you give me an hint on that? Thanks a lot!

I am running PBS to backup some CTs and VMs running ob PVE. As the storage has over 1.5TB and is placed on classical, rotaing hdds a backup-jobs runs nearly 6 hours. I used the default settings for the backup-job and used "Default" as "Change detection mode".

Now I tried "Metadata" as change detection mode and the backup was (with the second run) extremely faster.

This brings me to the question if using "Metadata" as change detection mode is save or if there are any caveats that could kill my backups? As this seems to be much better, from the perspection of speed, I wonder if it could be a loss not reading all blocks.

Can you give me an hint on that? Thanks a lot!