Now.. this is the wealth of information that I desire!! Love it, thank-you Beisser, Meyergru, very informative and helpful. I'm just making a second image of the W11 machine (in case it's ever needed) and I've downloaded the three ProxMox images (6.4, 8.4 & 9.1) so will experiment today working through those and testing this NIC.

Ofc, if that doesn't bear any fruits, then it looks like I'm going to start looking into those replacements that you guys have suggested

EDIT :

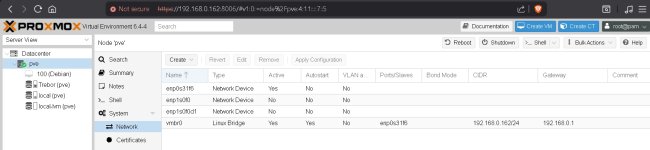

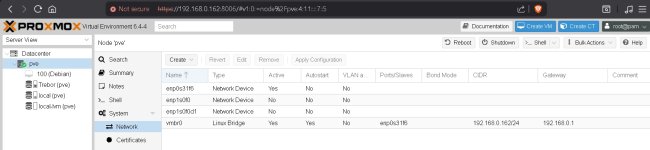

EDIT : Starting with the oldest, I'm now running a Debian Trixie VM inside of PVE 6.4

I've googled 'show me network adaptors' and came up with this -

https://serverfault.com/questions/239807/how-to-list-all-physically-installed-network-cards-debian a wealth of different methods. However, I've realised, that I don't think this is going to help me. I fear my 60GB SSD (which wasn't a 60, it was, in reality, only a 40GB) is going to be too small for me to test with. Unless ofc I'm over looking something (always a possibility) and I'd appreciate some input please.

Ideally I'd like to crank up a 10GB connection, by connecting the NIC to my switch (which has 4 SPF ports) and then using some terminal black magic to see if that is working. However, in terms of actually doing this, I'm kinda lost :-(

Then by my repetition of moving forward in terms of PVE releases, to try the newer builds.

Please can somebody guide me forward?