Hi,

OK, I have a Proxmox 6.4 cluster I set up.

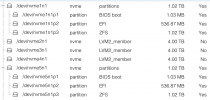

The boot volume is a mirrored ZFS setup, with 2 x 1TB disks (/dev/nvme1n1 and /dev/nvme5n1).

I fat-fingered it, and accidentally ran ceph-volume lvm zap on one of the two disks (/dev/nvme5n1) accidentally *sad face*.

The ZFS volume itself on the disk seems to be intact - ceph-volume hit a resource busy issue.

However, I've noticed that the EFI volume (/dev/nvme5n1p2) from that disk (/dev/nvme5n1) is gone:

Firstly - what is the impact of this?

And secondly - is there any way to restore/rebuild this EFI volume please?

Thanks,

Victor

OK, I have a Proxmox 6.4 cluster I set up.

The boot volume is a mirrored ZFS setup, with 2 x 1TB disks (/dev/nvme1n1 and /dev/nvme5n1).

I fat-fingered it, and accidentally ran ceph-volume lvm zap on one of the two disks (/dev/nvme5n1) accidentally *sad face*.

The ZFS volume itself on the disk seems to be intact - ceph-volume hit a resource busy issue.

Code:

--> Zapping: /dev/nvme5n1

Running command: /usr/bin/dd if=/dev/zero of=/dev/nvme5n1p2 bs=1M count=10 conv=fsync

stderr: 10+0 records in

10+0 records out

stderr: 10485760 bytes (10 MB, 10 MiB) copied, 0.00988992 s, 1.1 GB/s

--> Destroying partition since --destroy was used: /dev/nvme5n1p2

Running command: /usr/sbin/parted /dev/nvme5n1 --script -- rm 2

stderr: wipefs: error: /dev/nvme5n1p3: probing initialization failed: Device or resource busy

--> failed to wipefs device, will try again to workaround probable race condition

stderr: wipefs: error: /dev/nvme5n1p3: probing initialization failed: Device or resource busy

--> failed to wipefs device, will try again to workaround probable race condition

stderr: wipefs: error: /dev/nvme5n1p3: probing initialization failed: Device or resource busy

--> failed to wipefs device, will try again to workaround probable race condition

stderr: wipefs: error: /dev/nvme5n1p3: probing initialization failed: Device or resource busy

--> failed to wipefs device, will try again to workaround probable race condition

stderr: wipefs: error: /dev/nvme5n1p3: probing initialization failed: Device or resource busy

--> failed to wipefs device, will try again to workaround probable race condition

stderr: wipefs: error: /dev/nvme5n1p3: probing initialization failed: Device or resource busy

--> failed to wipefs device, will try again to workaround probable race condition

stderr: wipefs: error: /dev/nvme5n1p3: probing initialization failed: Device or resource busy

--> failed to wipefs device, will try again to workaround probable race condition

stderr: wipefs: error: /dev/nvme5n1p3: probing initialization failed: Device or resource busy

--> failed to wipefs device, will try again to workaround probable race condition

--> RuntimeError: could not complete wipefs on device: /dev/nvme5n1p3However, I've noticed that the EFI volume (/dev/nvme5n1p2) from that disk (/dev/nvme5n1) is gone:

Firstly - what is the impact of this?

And secondly - is there any way to restore/rebuild this EFI volume please?

Thanks,

Victor