All VMs locking up after latest PVE update

- Thread starter jro

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

For the past 2 months we've hit this all VMs freezing couple times.That regression came in with 5.2-1 (the first 5.2 release) and was fixed with 5.2-4, so all versions in-between were affected.

Why do you ask?

Last freeze occured last night on 7-node cluster (storage: CEPH, backups to PBS) - right after PBS backup finished on one node, all VMs started to freeze with the following message in logs:

Code:

May 10 22:03:04 pm-pm1.pminner.cluster.eu pvestatd[3545]: VM 82075205 qmp command failed - VM 82075205 qmp command 'query-proxmox-support' failed - unable t

o connect to VM 82075205 qmp socket - timeout after 31 retries

May 10 22:03:07 pm-pm1.pminner.cluster.eu pvestatd[3545]: VM 82075202 qmp command failed - VM 82075202 qmp command 'query-proxmox-support' failed - unable t

o connect to VM 82075202 qmp socket - timeout after 31 retries

May 10 22:03:10 pm-pm1.pminner.cluster.eu pvestatd[3545]: VM 82076201 qmp command failed - VM 82076201 qmp command 'query-proxmox-support' failed - unable t

o connect to VM 82076201 qmp socket - timeout after 31 retriesThis resulted all VMs being in freeze state on that node. The Proxmox itself responded to SSH/WEB GUI, so we had to run full node reboot in order to get things back online.

and all nodes run the following:

Code:

# dpkg -l|grep pve-qemu-kvm

ii pve-qemu-kvm 5.2.0-3 amd64 Full virtualization on x86 hardwareaccording to quoted message from Thomas, 5.2.0-3 should not be affected, however it looks like it is affected.

Right now we've disabled PBS backups to avoid VM freezing in the future. I'm also unsure what to do next - has this been fixed on most recent version of Proxmox? (currently we're running pve-manager/6.3-6/2184247e)

To quote again:

> ... and was fixed with 5.2-4, so all versions in-between were affected.

You posted output showing you are running 5.2-3, so you would be affected... Upgrade your packages and restart/live-migrate your VMs.

> ... and was fixed with 5.2-4, so all versions in-between were affected.

You posted output showing you are running 5.2-3, so you would be affected... Upgrade your packages and restart/live-migrate your VMs.

PVE Manager Version pve-manager/6.4-6/be2fa32c

pve-qemu-kvm 5.2.0-6 amd64 Full virtualization on x86 hardware

syslog

May 20 02:22:09 R540 pvestatd[1716]: VM 207 qmp command failed - VM 207 qmp command 'query-proxmox-support' failed - unable to connect to VM 207 qmp socket - timeout after 31 retries

...

backup failed and VMs are stopped or freezed

any ideas?

pve-qemu-kvm 5.2.0-6 amd64 Full virtualization on x86 hardware

syslog

May 20 02:22:09 R540 pvestatd[1716]: VM 207 qmp command failed - VM 207 qmp command 'query-proxmox-support' failed - unable to connect to VM 207 qmp socket - timeout after 31 retries

...

backup failed and VMs are stopped or freezed

any ideas?

I have sometimes the same problem with VMs. which disk drive is rellocated on NFS Storage.

At first I have faced with problem one month ago with server on my second work, but I was thought that there is problem with HDD on NFS Server (Another proxmox with ZFS RAIDz1-0).

But now there problem also contains me.

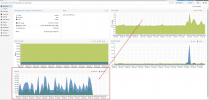

At this moment in Proxmox GUI there is high DISK IO in Dashboard on All VMs and not on Host, but doesn't responds only one VM with log records VM 1271 qmp command 'query-proxmox-support' failed - unable to connect to VM 1271 qmp socket - timeout after 31 retries

After manually stopping and starting this VM - the problem is gone.

At first I have faced with problem one month ago with server on my second work, but I was thought that there is problem with HDD on NFS Server (Another proxmox with ZFS RAIDz1-0).

But now there problem also contains me.

At this moment in Proxmox GUI there is high DISK IO in Dashboard on All VMs and not on Host, but doesn't responds only one VM with log records VM 1271 qmp command 'query-proxmox-support' failed - unable to connect to VM 1271 qmp socket - timeout after 31 retries

After manually stopping and starting this VM - the problem is gone.

Code:

Jan 20 21:25:05 brabus pvestatd[2071]: VM 1271 qmp command failed - VM 1271 qmp command 'query-proxmox-support' failed - unable to connect to VM 1271 qmp socket - timeout after 31 retries

Jan 20 21:25:05 brabus pvedaemon[2025903]: <root@pam> successful auth for user 'apiproxmox@pve'

Jan 20 21:25:05 brabus pvestatd[2071]: status update time (6.169 seconds)

Jan 20 21:25:15 brabus pvestatd[2071]: VM 1271 qmp command failed - VM 1271 qmp command 'query-proxmox-support' failed - unable to connect to VM 1271 qmp socket - timeout after 31 retries

Jan 20 21:25:15 brabus pvestatd[2071]: status update time (6.151 seconds)

Jan 20 21:25:20 brabus pvedaemon[2025903]: <root@pam> successful auth for user 'apiproxmox@pve'

Jan 20 21:25:25 brabus pvestatd[2071]: VM 1271 qmp command failed - VM 1271 qmp command 'query-proxmox-support' failed - unable to connect to VM 1271 qmp socket - timeout after 31 retries

Jan 20 21:25:26 brabus pvestatd[2071]: status update time (6.166 seconds)

Jan 20 21:25:28 brabus pvedaemon[2026132]: <root@pam> successful auth for user 'zhitomirskiy@hms'

Jan 20 21:25:35 brabus pvestatd[2071]: VM 1271 qmp command failed - VM 1271 qmp command 'query-proxmox-support' failed - unable to connect to VM 1271 qmp socket - timeout after 31 retries

Jan 20 21:25:35 brabus pvestatd[2071]: status update time (6.152 seconds)

Jan 20 21:25:35 brabus pvedaemon[2025903]: <root@pam> successful auth for user 'apiproxmox@pve'

Jan 20 21:25:45 brabus pvestatd[2071]: VM 1271 qmp command failed - VM 1271 qmp command 'query-proxmox-support' failed - unable to connect to VM 1271 qmp socket - timeout after 31 retriesproxmox-ve: 7.1-1 (running kernel: 5.13.19-2-pve)

pve-manager: 7.1-8 (running version: 7.1-8/5b267f33)

pve-kernel-helper: 7.1-6

pve-kernel-5.13: 7.1-5

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 14.2.21-1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: not correctly installed

ifupdown2: 3.1.0-1+pmx3

ksmtuned: 4.20150326

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.0

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-14

libpve-guest-common-perl: 4.0-3

libpve-http-server-perl: 4.0-4

libpve-storage-perl: 7.0-15

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.3.0-1

proxmox-backup-client: 2.1.2-1

proxmox-backup-file-restore: 2.1.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-4

pve-cluster: 7.1-3

pve-container: 4.1-3

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-4

pve-ha-manager: 3.3-1

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.0-3

pve-xtermjs: 4.12.0-1

qemu-server: 7.1-4

smartmontools: 7.2-pve2

spiceterm: 3.2-2

swtpm: 0.7.0~rc1+2

vncterm: 1.7-1

pve-manager: 7.1-8 (running version: 7.1-8/5b267f33)

pve-kernel-helper: 7.1-6

pve-kernel-5.13: 7.1-5

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 14.2.21-1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: not correctly installed

ifupdown2: 3.1.0-1+pmx3

ksmtuned: 4.20150326

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.0

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-14

libpve-guest-common-perl: 4.0-3

libpve-http-server-perl: 4.0-4

libpve-storage-perl: 7.0-15

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.3.0-1

proxmox-backup-client: 2.1.2-1

proxmox-backup-file-restore: 2.1.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-4

pve-cluster: 7.1-3

pve-container: 4.1-3

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-4

pve-ha-manager: 3.3-1

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.0-3

pve-xtermjs: 4.12.0-1

qemu-server: 7.1-4

smartmontools: 7.2-pve2

spiceterm: 3.2-2

swtpm: 0.7.0~rc1+2

vncterm: 1.7-1

Last edited:

At this moment in Proxmox GUI where is high DISK IO in Dashboard on All VMs and not Host, but doesn't responds only VM one with log records VM 1271 qmp command 'query-proxmox-support' failed - unable to connect to VM 1271 qmp socket - timeout after 31 retries

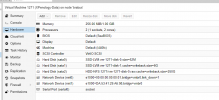

Can you please also post the config of an affected VM (up .. i have the same error.

qm config VMID), thanks!YesCan you please also post the config of an affected VM (qm config VMID), thanks!

But there is no any specific settings in VM.

By the way - I'm not using Proxmox Backup Server.

Code:

balloon: 256

boot: c

bootdisk: sata0

cores: 2

hotplug: disk,network,usb

memory: 1024

name: XPenology-Data

net0: e1000=00:00:00:00:00:01,bridge=vmbr0,link_down=1

net1: e1000=DA:53:41:29:A6:98,bridge=vmbr0

numa: 0

onboot: 1

ostype: l26

sata0: SSD-LVM:vm-1271-disk-0,size=52M

sata1: SSD-LVM:vm-1271-disk-1,cache=writeback,size=6G

sata2: HDD-NFS:1271/vm-1271-disk-0.raw,cache=writeback,size=250G

scsihw: virtio-scsi-pci

serial0: socket

smbios1: uuid=e545f02a-16ce-4d20-bf21-838a98f7c4d0

sockets: 1

startup: order=71,up=5

tablet: 0

Last edited:

So NFS then, maybe even to theBy the way - I'm not using Proxmox Backup Server.

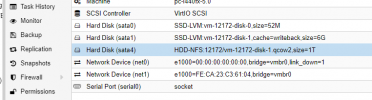

HDD-NFS storage? Can you please also post the output of pvesm status?You are right, NFS -> HDD-NFSSo NFS then, maybe even to theHDD-NFSstorage? Can you please also post the output ofpvesm status?

Code:

root@brabus:~# pvesm status

Name Type Status Total Used Available %

Backup dir active 7751366384 1854768748 5505879976 23.93%

HDD-NFS nfs active 3221225472 505371648 2715853824 15.69%

SSD-LVM lvm active 234426368 120905728 113520640 51.58%

Template dir active 7751366384 1854768748 5505879976 23.93%

local dir disabled 0 0 0 N/A

Code:

root@crocus:~# pvesm status

Name Type Status Total Used Available %

Backup nfs active 7751366656 1854768128 5505880064 23.93%

HDD-NFS nfs active 3221225472 505371648 2715853824 15.69%

HDD-ZFS zfspool active 13696708589 3039088118 10657620471 22.19%

SSD-LVM lvm active 31436800 19398656 12038144 61.71%

Template nfs active 7751366656 1854768128 5505880064 23.93%

local dir disabled 0 0 0 N/A

Code:

root@crocus:~# zpool status

pool: zfs

state: ONLINE

scan: scrub repaired 0B in 03:38:02 with 0 errors on Sun Jan 9 04:02:03 2022

config:

NAME STATE READ WRITE CKSUM

zfs ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

wwn-0x5000c500b48a21ab-part1 ONLINE 0 0 0

wwn-0x5000c500b48ce0e7-part1 ONLINE 0 0 0

wwn-0x5000c500b4a09e92-part1 ONLINE 0 0 0

wwn-0x5000c500b4a3fd5f-part1 ONLINE 0 0 0

wwn-0x5000c500b4a5c689-part1 ONLINE 0 0 0

errors: No known data errors

Code:

root@crocus:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zfs 11.6T 9.93T 192K /zfs

zfs/acrus-logs 3.11M 9.93T 3.11M /zfs/acrus-logs

zfs/andy 3.60G 9.93T 3.60G /zfs/andy

zfs/elacrus 9.00G 1015G 9.00G /zfs/elacrus

zfs/iscsi 8.16T 18.1T 9.61G -

zfs/nfs 482G 2.53T 482G /zfs/nfs

zfs/prs 62.9G 437G 62.9G /zfs/prs

zfs/public 49.9G 9.93T 49.9G /zfs/public

zfs/tmp 50.3M 1024G 50.3M /zfs/tmp

zfs/zfs 2.83T 9.93T 179K /zfs/zfs

zfs/zfs/subvol-1320-disk-0 188G 312G 188G /zfs/zfs/subvol-1320-disk-0

zfs/zfs/subvol-1320-disk-1 2.64T 9.36T 2.64T /zfs/zfs/subvol-1320-disk-1

zfs/zfs/subvol-1320-disk-2 3.23G 96.8G 3.23G /zfs/zfs/subvol-1320-disk-2

zfs/zfs/subvol-1320-disk-3 762M 99.3G 762M /zfs/zfs/subvol-1320-disk-3

Last edited:

Update.

After changing Cache from Writeback to Default (No cache) on each VM which disks are located on NFS there is no any problem.

Uptime 6+ days.

With Writeback VM freezed every day.

This post https://forum.proxmox.com/threads/c...-lockup-cpu-0-stock-for-24s.84212/post-453811 navigates me to change Cache to Default.

After changing Cache from Writeback to Default (No cache) on each VM which disks are located on NFS there is no any problem.

Uptime 6+ days.

With Writeback VM freezed every day.

This post https://forum.proxmox.com/threads/c...-lockup-cpu-0-stock-for-24s.84212/post-453811 navigates me to change Cache to Default.

Hi, I just start having the same problem only on one VM 310, at backup, I got following error msg in syslog:

Apr 23 09:39:20 proxmox5 pvedaemon[1493255]: VM 310 qmp command failed - VM 310 qmp command 'query-proxmox-support' failed - unable to connect to VM 310 qmp socket - timeout after 31 retries

this issue only happened after I update last week, here is pve version:

proxmox-ve: 7.1-1 (running kernel: 5.13.19-6-pve)

pve-manager: 7.1-12 (running version: 7.1-12/b3c09de3)

pve-kernel-helper: 7.1-14

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-4-pve: 5.13.19-9

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.13.19-1-pve: 5.13.19-3

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-5-pve: 5.11.22-10

pve-kernel-5.11.22-4-pve: 5.11.22-9

ceph: 16.2.7

ceph-fuse: 16.2.7

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-7

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-5

libpve-guest-common-perl: 4.1-1

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.1-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.12-1

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-2

proxmox-backup-client: 2.1.6-1

proxmox-backup-file-restore: 2.1.6-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-9

pve-cluster: 7.1-3

pve-container: 4.1-4

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-6

pve-ha-manager: 3.3-3

pve-i18n: 2.6-2

pve-qemu-kvm: 6.2.0-3

pve-xtermjs: 4.16.0-1

qemu-server: 7.1-4

smartmontools: 7.2-1

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1

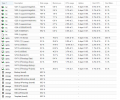

And here is output for qm config 310:

cores: 4

cpu: host

description:

ide2: none,media=cdrom

machine: q35

memory: 8064

meta: creation-qemu=6.1.0,ctime=1644172601

name: Phil-1

net0: virtio=12 7:83:29:B1:21,bridge=vmbr4,link_down=1

7:83:29:B1:21,bridge=vmbr4,link_down=1

net1: virtio=92:21:C6:48 A:80,bridge=vmbr3,firewall=1

A:80,bridge=vmbr3,firewall=1

numa: 0

ostype: l24

sata0: ssd_vm:vm-310-disk-0,discard=on,size=2032G

sata1: ssd_vm:vm-310-disk-1,discard=on,size=2032G

scsihw: virtio-scsi-pci

serial0: socket

smbios1: uuid=e5cd2046-680c-498d-9eca-a15b18580474

sockets: 1

vmgenid: c202190a-9ea9-4569-83ee-bd3d42c9c21e

any help would be much appreciated.

Apr 23 09:39:20 proxmox5 pvedaemon[1493255]: VM 310 qmp command failed - VM 310 qmp command 'query-proxmox-support' failed - unable to connect to VM 310 qmp socket - timeout after 31 retries

this issue only happened after I update last week, here is pve version:

proxmox-ve: 7.1-1 (running kernel: 5.13.19-6-pve)

pve-manager: 7.1-12 (running version: 7.1-12/b3c09de3)

pve-kernel-helper: 7.1-14

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-4-pve: 5.13.19-9

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.13.19-1-pve: 5.13.19-3

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-5-pve: 5.11.22-10

pve-kernel-5.11.22-4-pve: 5.11.22-9

ceph: 16.2.7

ceph-fuse: 16.2.7

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-7

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-5

libpve-guest-common-perl: 4.1-1

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.1-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.12-1

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-2

proxmox-backup-client: 2.1.6-1

proxmox-backup-file-restore: 2.1.6-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-9

pve-cluster: 7.1-3

pve-container: 4.1-4

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-6

pve-ha-manager: 3.3-3

pve-i18n: 2.6-2

pve-qemu-kvm: 6.2.0-3

pve-xtermjs: 4.16.0-1

qemu-server: 7.1-4

smartmontools: 7.2-1

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1

And here is output for qm config 310:

cores: 4

cpu: host

description:

ide2: none,media=cdrom

machine: q35

memory: 8064

meta: creation-qemu=6.1.0,ctime=1644172601

name: Phil-1

net0: virtio=12

net1: virtio=92:21:C6:48

numa: 0

ostype: l24

sata0: ssd_vm:vm-310-disk-0,discard=on,size=2032G

sata1: ssd_vm:vm-310-disk-1,discard=on,size=2032G

scsihw: virtio-scsi-pci

serial0: socket

smbios1: uuid=e5cd2046-680c-498d-9eca-a15b18580474

sockets: 1

vmgenid: c202190a-9ea9-4569-83ee-bd3d42c9c21e

any help would be much appreciated.

I have no NFS disk, only has ceph drive, I can not see why only this vm has this issue, rest of vm backup takes 20% longer than before, but not sure it worth to raise alarms or not.

Here is my output of pvesm status:

VM_Backup cifs active 28107205512 21264030312 684317 5200 75.65%

VM_Template cifs active 28107205512 21264030312 684317 5200 75.65%

local dir active 225517696 24933248 20058 4448 11.06%

local-vm-zfs0 zfspool disabled 0 0 0 N/A

local-zfs zfspool active 200584644 96 20058 4548 0.00%

ssd_vm rbd active 4672605015 880588887 379201 6128 18.85%

Here is my output of pvesm status:

VM_Backup cifs active 28107205512 21264030312 684317 5200 75.65%

VM_Template cifs active 28107205512 21264030312 684317 5200 75.65%

local dir active 225517696 24933248 20058 4448 11.06%

local-vm-zfs0 zfspool disabled 0 0 0 N/A

local-zfs zfspool active 200584644 96 20058 4548 0.00%

ssd_vm rbd active 4672605015 880588887 379201 6128 18.85%

A few days ago i ve joined the club as these gentlemen.

when i start vm i got in syslog:

May 22 08:29:02 hvn01 pvedaemon[10102]: Failed to run vncproxy.

May 22 08:29:02 hvn01 pvedaemon[1347]: <root@pam> end task UPID:hvn01:00002776:00028673:646B0BAA:vncproxy:100:root@pam: Failed to run vncproxy.

May 22 08:29:02 hvn01 pvestatd[1317]: VM 100 qmp command failed - VM 100 qmp command 'query-proxmox-support' failed - unable to connect to VM 100 qmp socket - timeout after 31 retries

May 22 08:29:02 hvn01 pvestatd[1317]: status update time (6.412 seconds)

the vm has no backup, no ceph .... its a router and when it goes down it does not matter , its a carp device.

proxmox ver 7.2.x

pveversion -v | grep -E "proxmox-ve|kvm|backup-client"

proxmox-ve: 7.2-1 (running kernel: 5.15.30-2-pve)

proxmox-backup-client: 2.1.8-1

pve-qemu-kvm: 6.2.0-5

root@hvn01:/var/log# pveversion -v

proxmox-ve: 7.2-1 (running kernel: 5.15.30-2-pve)

pve-manager: 7.2-3 (running version: 7.2-3/c743d6c1)

pve-kernel-helper: 7.2-2

pve-kernel-5.15: 7.2-1

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 15.2.16-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-8

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-6

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.2-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.12-1

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.1.8-1

proxmox-backup-file-restore: 2.1.8-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-10

pve-cluster: 7.2-1

pve-container: 4.2-1

pve-docs: 7.2-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.4-1

pve-ha-manager: 3.3-4

pve-i18n: 2.7-1

pve-qemu-kvm: 6.2.0-5

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-2

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1

anyone can point me the right direction ?

Thank you.

edit: solved , sorry for spam

when i start vm i got in syslog:

May 22 08:29:02 hvn01 pvedaemon[10102]: Failed to run vncproxy.

May 22 08:29:02 hvn01 pvedaemon[1347]: <root@pam> end task UPID:hvn01:00002776:00028673:646B0BAA:vncproxy:100:root@pam: Failed to run vncproxy.

May 22 08:29:02 hvn01 pvestatd[1317]: VM 100 qmp command failed - VM 100 qmp command 'query-proxmox-support' failed - unable to connect to VM 100 qmp socket - timeout after 31 retries

May 22 08:29:02 hvn01 pvestatd[1317]: status update time (6.412 seconds)

the vm has no backup, no ceph .... its a router and when it goes down it does not matter , its a carp device.

proxmox ver 7.2.x

pveversion -v | grep -E "proxmox-ve|kvm|backup-client"

proxmox-ve: 7.2-1 (running kernel: 5.15.30-2-pve)

proxmox-backup-client: 2.1.8-1

pve-qemu-kvm: 6.2.0-5

root@hvn01:/var/log# pveversion -v

proxmox-ve: 7.2-1 (running kernel: 5.15.30-2-pve)

pve-manager: 7.2-3 (running version: 7.2-3/c743d6c1)

pve-kernel-helper: 7.2-2

pve-kernel-5.15: 7.2-1

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 15.2.16-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-8

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-6

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.2-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.12-1

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.1.8-1

proxmox-backup-file-restore: 2.1.8-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-10

pve-cluster: 7.2-1

pve-container: 4.2-1

pve-docs: 7.2-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.4-1

pve-ha-manager: 3.3-4

pve-i18n: 2.7-1

pve-qemu-kvm: 6.2.0-5

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-2

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1

anyone can point me the right direction ?

Thank you.

edit: solved , sorry for spam

Last edited: