Could people experiencing the issue please check/post their `dmesg` and system journal from the time when the issue occurs?

All VMs locking up after latest PVE update

- Thread starter jro

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

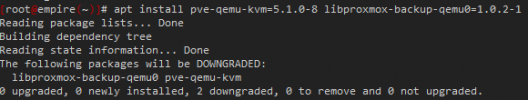

It turns out rolling back pve-manager wasn't sufficient, I was still having issues after I tried rebooting a few VMs. I rolled back libproxmox-backup-qemu and pve-qemu-kvm as well:

Stoiko, I didn't see your reply until after I had rolled back and rebooted, so I don't have a dmesg to share, but if it happens again, I will share. I did check dmesg back when I was having issues on Friday and didn't see anything glaring. I'll also share the system journal.

apt install pve-qemu-kvm=5.1.0-8 libproxmox-backup-qemu0=1.0.2-1.Stoiko, I didn't see your reply until after I had rolled back and rebooted, so I don't have a dmesg to share, but if it happens again, I will share. I did check dmesg back when I was having issues on Friday and didn't see anything glaring. I'll also share the system journal.

Note that I had relatively similar looking issue there with PVE running a PBS VM:

https://forum.proxmox.com/threads/p...er-70mn-pbs-server-crashed.85312/#post-375257

It also has an old CPU Atom C2550 (like other posters here) and I had qmp failed messages too but I found out this was becausse the kvm VM process was killed by OOM, may be other user have similar OOM.

I've now deactivated the swap inside the VM, for now no issue yet.

https://forum.proxmox.com/threads/p...er-70mn-pbs-server-crashed.85312/#post-375257

It also has an old CPU Atom C2550 (like other posters here) and I had qmp failed messages too but I found out this was becausse the kvm VM process was killed by OOM, may be other user have similar OOM.

I've now deactivated the swap inside the VM, for now no issue yet.

I've been having the same issue since upgrading to 6.3-4. I run a 3-node cluster using Dell Poweredge T620 and a couple of Optiplex 3070s (home lab). The issue only occurs on the T620.

When the issue occurs most (but not all) of VMs become unresponsive. Which VMs this occurs to is different each time. Console doesn't connect, I can't see any related issues in logs other than qmp timeouts.

The issue was occuring every evening during the PBS backup. The backup would start at 0400, from 0402+ I'd start to get the qmp timeout messages in logs. The backup would continue working (usually takes ~60 minutes to complete) for about 45 minutes until the backup itself starts to timeout.

After this occured 3 days running until I cancelled the backup task. This has not occured for 5 days.

Today I needed to shutdown a host so I migrated VMs from one host to another which triggered the "freeze".

To get out the freeze I need to stop the VM, which first times out then gets SIGKILL'd. Sometimes the VM is responsive on some level, like I may be able to ping it, but I can't SSH to it. Or a web service will respond at the frontend level (ie. default 404) but not if it needs to run a real web page. It is a similar feeling to when storage locks up and can no longer be written to. Unfortunately as I can't connect to the console I can't see what the VMs are doing.

When I restart the VM, I find that no logs have been written to in the VM, which lends some confirmation to it being storage related.

Summary of effected system:

I have not upgraded the zpool, so I am going to try as suggested, restarting with 5.4.78-2-pve and re-enabling backups.

Edit: boot option only gives me 5.4.98-1-pve as an alternative (but 5.4.78-2 is available as files in /boot). So I'll be testing 5.4.98-1-pve

Edit2: Found how to add a 3rd kernel (add kernel to "/etc/kernel/pve-efiboot-manual-kernels") -- so I'm now running 5.4.78-2-pve

Edit3:

** Same issue with kernel 5.4.78-2-pve **

Triggered a full backup. PBS starts at the top, and it isn't 25% through doing the first VM and I start to get "offline" notices for 4 other VMs.

Repeated for each of the effected VMs:

When the issue occurs most (but not all) of VMs become unresponsive. Which VMs this occurs to is different each time. Console doesn't connect, I can't see any related issues in logs other than qmp timeouts.

The issue was occuring every evening during the PBS backup. The backup would start at 0400, from 0402+ I'd start to get the qmp timeout messages in logs. The backup would continue working (usually takes ~60 minutes to complete) for about 45 minutes until the backup itself starts to timeout.

After this occured 3 days running until I cancelled the backup task. This has not occured for 5 days.

Today I needed to shutdown a host so I migrated VMs from one host to another which triggered the "freeze".

To get out the freeze I need to stop the VM, which first times out then gets SIGKILL'd. Sometimes the VM is responsive on some level, like I may be able to ping it, but I can't SSH to it. Or a web service will respond at the frontend level (ie. default 404) but not if it needs to run a real web page. It is a similar feeling to when storage locks up and can no longer be written to. Unfortunately as I can't connect to the console I can't see what the VMs are doing.

When I restart the VM, I find that no logs have been written to in the VM, which lends some confirmation to it being storage related.

Summary of effected system:

- Dell Poweredge T620

- Xeon E5-2643 v2

- LSI SAS3008 9300-8i

- ZFS RAID10, SATA SSD

- PERC H710P

- Direct passthrough to a VM, HW RAID

- Kernel 5.4.101-1-pve

- 20 VMs on the cluster, 13 on the T620 (Window 10, Server 2019, Debian, CentOS, pfSense freebsd)

- dmidecode.txt (dmidecode -t bios)

- kern.txt (dmesg/kernel)

- journatlctl.txt

- pveversion.txt (pveversion -v)

- lscpu.txt

I have not upgraded the zpool, so I am going to try as suggested, restarting with 5.4.78-2-pve and re-enabling backups.

Edit: boot option only gives me 5.4.98-1-pve as an alternative (but 5.4.78-2 is available as files in /boot). So I'll be testing 5.4.98-1-pve

Edit2: Found how to add a 3rd kernel (add kernel to "/etc/kernel/pve-efiboot-manual-kernels") -- so I'm now running 5.4.78-2-pve

Edit3:

** Same issue with kernel 5.4.78-2-pve **

Triggered a full backup. PBS starts at the top, and it isn't 25% through doing the first VM and I start to get "offline" notices for 4 other VMs.

Repeated for each of the effected VMs:

VM 230 qmp command failed - VM 230 qmp command 'query-proxmox-support' failed - unable to connect to VM 230 qmp socket - timeout after 31 retriesAttachments

Last edited:

During the night, I had all VMs of one node frozen with the qmp socket no longer responding.

The freeze happened during pbe backup.

What is weird is this output from one of the backup jobs:

Notice the line

2021-03-09 00:35:35 ERROR: PBS backups are not supported by the running QEMU version. Please make sure you've installed the latest version and the VM has been restarted.

This is a cluster and in order to upgrade the nodes, the VMs were migrated around, but not restarted.

Might there be a problem du to the VMs having beeen live-migrated to a newer version of qemu, which, I assume, is not the same as having been cold-booted with the new version of qemu?

The freeze happened during pbe backup.

What is weird is this output from one of the backup jobs:

Code:

2021-03-09 00:35:29 INFO: Starting Backup of VM 156068 (qemu)

2021-03-09 00:35:29 INFO: status = running

2021-03-09 00:35:29 INFO: VM Name: host.something.tld

2021-03-09 00:35:32 INFO: include disk 'scsi0' 'rbd_all:vm-156068-disk-0' 128G

2021-03-09 00:35:32 INFO: backup mode: snapshot

2021-03-09 00:35:32 INFO: ionice priority: 7

2021-03-09 00:35:32 INFO: creating Proxmox Backup Server archive 'vm/156068/2021-03-09T00:35:29Z'

2021-03-09 00:35:35 ERROR: PBS backups are not supported by the running QEMU version. Please make sure you've installed the latest version and the VM has been restarted.

2021-03-09 00:35:35 INFO: aborting backup job

2021-03-09 00:45:35 ERROR: VM 156068 qmp command 'backup-cancel' failed - unable to connect to VM 156068 qmp socket - timeout after 5990 retries

2021-03-09 00:45:35 ERROR: Backup of VM 156068 failed - PBS backups are not supported by the running QEMU version. Please make sure you've installed the latest version and the VM has been restarted.Notice the line

2021-03-09 00:35:35 ERROR: PBS backups are not supported by the running QEMU version. Please make sure you've installed the latest version and the VM has been restarted.

This is a cluster and in order to upgrade the nodes, the VMs were migrated around, but not restarted.

Might there be a problem du to the VMs having beeen live-migrated to a newer version of qemu, which, I assume, is not the same as having been cold-booted with the new version of qemu?

I have the same problem. The pve-manager downgrade did not help me, but helped downgrade:

- pve-manager = 6.3-3

- pve-qemu-kvm = 5.1.0-8

- libproxmox-backup-qemu0 = 1.0.2-1

and reboot

Additional information, after proxmox upgrading(pve-qemu-kvm), VM with windows 2019 lost the network adapter and found a new one (the network settings were lost from this). During the downgrade proxmox, the old adapter returned and the new one was lost.

And on both version:

pvesh get /nodes

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

...

pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.101-1-pve)

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-6

pve-kernel-helper: 6.3-6

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.101-1-pve: 5.4.101-1

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph-fuse: 14.2.16-pve1

corosync: 3.1.0-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-4

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-7

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.8-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-5

pve-cluster: 6.2-1

pve-container: 3.3-4

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-5

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.3-pve2

intel cpus

- pve-manager = 6.3-3

- pve-qemu-kvm = 5.1.0-8

- libproxmox-backup-qemu0 = 1.0.2-1

and reboot

Additional information, after proxmox upgrading(pve-qemu-kvm), VM with windows 2019 lost the network adapter and found a new one (the network settings were lost from this). During the downgrade proxmox, the old adapter returned and the new one was lost.

And on both version:

pvesh get /nodes

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

Invalid conversion in sprintf: "%.H" at /usr/share/perl5/PVE/Format.pm line 75.

...

pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.101-1-pve)

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-6

pve-kernel-helper: 6.3-6

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.101-1-pve: 5.4.101-1

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph-fuse: 14.2.16-pve1

corosync: 3.1.0-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-4

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-7

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.8-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-5

pve-cluster: 6.2-1

pve-container: 3.3-4

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-5

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.3-pve2

intel cpus

Last edited:

Yeah would be quite weird that pve-manager downgrade would fix anything in that direction, it has nothing directly to do with VMs.

Those sounds like better candidates, so if you run into this semi-regularly before, it would be great to test this out and confirm here that issues are gone as long as those are downgraded, thanks!

- pve-qemu-kvm = 5.1.0-8

- libproxmox-backup-qemu0 = 1.0.2-1

Those sounds like better candidates, so if you run into this semi-regularly before, it would be great to test this out and confirm here that issues are gone as long as those are downgraded, thanks!

Last edited:

We're currently having a hard time reproducing this issue, so in case anyone experiences it again (or would be willing to test it on a non-prod system), could you run the following command once the VMs hang and the journal gets the "QMP timeout" entries:

and post the output?

Edit: s/bt/thread apply all bt/

Bash:

apt install gdb pve-qemu-kvm-dbg # do this beforehand

# find a hung VMs PID via 'pgrep', 'ps' or similar, or 'qm list' while they're still running

# Example for finding the PID of VMID 102: pgrep -f 'kvm -id 102'

VM_PID=1234

gdb attach $VM_PID -ex='thread apply all bt' -ex='quit'and post the output?

Edit: s/bt/thread apply all bt/

Last edited by a moderator:

Here we go, a freezed OPNsense VM.

Code:

root@pve04:~# VM_PID=216327

root@pve04:~# gdb attach $VM_PID -ex='bt' -ex='quit'

GNU gdb (Debian 8.2.1-2+b3) 8.2.1

Copyright (C) 2018 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Type "show copying" and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

<http://www.gnu.org/software/gdb/bugs/>.

Find the GDB manual and other documentation resources online at:

<http://www.gnu.org/software/gdb/documentation/>.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

attach: No such file or directory.

Attaching to process 216327

[New LWP 216328]

[New LWP 216330]

[New LWP 216331]

[New LWP 216332]

[New LWP 216337]

[New LWP 216338]

[New LWP 216339]

[New LWP 216340]

[New LWP 216341]

[New LWP 216342]

[New LWP 216343]

[New LWP 216344]

[New LWP 216345]

[New LWP 216346]

[New LWP 216347]

[New LWP 216348]

[New LWP 216349]

[New LWP 216350]

[New LWP 216402]

[New LWP 216403]

[New LWP 216405]

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

__lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

103 ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S: No such file or directory.

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fedc38b6714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x561e12a96c78) at ../nptl/pthread_mutex_lock.c:80

#2 0x0000561e103e8e39 in qemu_mutex_lock_impl (mutex=0x561e12a96c78, file=0x561e1054a149 "../monitor/qmp.c", line=80) at ../util/qemu-thread-posix.c:79

#3 0x0000561e1036e686 in monitor_qmp_cleanup_queue_and_resume (mon=0x561e12a96b60) at ../monitor/qmp.c:80

#4 monitor_qmp_event (opaque=0x561e12a96b60, event=<optimized out>) at ../monitor/qmp.c:421

#5 0x0000561e1036c505 in tcp_chr_disconnect_locked (chr=0x561e1254f4b0) at ../chardev/char-socket.c:507

#6 0x0000561e1036c550 in tcp_chr_disconnect (chr=0x561e1254f4b0) at ../chardev/char-socket.c:517

#7 0x0000561e1036c59e in tcp_chr_hup (channel=<optimized out>, cond=<optimized out>, opaque=<optimized out>) at ../chardev/char-socket.c:557

#8 0x00007fedc524cdd8 in g_main_context_dispatch () from /usr/lib/x86_64-linux-gnu/libglib-2.0.so.0

#9 0x0000561e103ed848 in glib_pollfds_poll () at ../util/main-loop.c:221

#10 os_host_main_loop_wait (timeout=<optimized out>) at ../util/main-loop.c:244

#11 main_loop_wait (nonblocking=nonblocking@entry=0) at ../util/main-loop.c:520

#12 0x0000561e101ca0a1 in qemu_main_loop () at ../softmmu/vl.c:1678

#13 0x0000561e0ff3478e in main (argc=<optimized out>, argv=<optimized out>, envp=<optimized out>) at ../softmmu/main.c:50

A debugging session is active.

Inferior 1 [process 216327] will be detached.

Quit anyway? (y or n) y

Detaching from program: /usr/bin/qemu-system-x86_64, process 216327

[Inferior 1 (process 216327) detached]

Last edited:

Another one, CentOS7 with qemu-guest-agent.

Code:

root@pve04:~# VM_PID=7154

root@pve04:~# gdb attach $VM_PID -ex='bt' -ex='quit'

GNU gdb (Debian 8.2.1-2+b3) 8.2.1

Copyright (C) 2018 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Type "show copying" and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

<http://www.gnu.org/software/gdb/bugs/>.

Find the GDB manual and other documentation resources online at:

<http://www.gnu.org/software/gdb/documentation/>.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

attach: No such file or directory.

Attaching to process 7154

[New LWP 7155]

[New LWP 7160]

[New LWP 7161]

[New LWP 7162]

[New LWP 7167]

[New LWP 7168]

[New LWP 7169]

[New LWP 7170]

[New LWP 7171]

[New LWP 7172]

[New LWP 7173]

[New LWP 7179]

[New LWP 7180]

[New LWP 7181]

[New LWP 7191]

[New LWP 7192]

[New LWP 7196]

[New LWP 7197]

[New LWP 7458]

[New LWP 7459]

[New LWP 7460]

[New LWP 7461]

[New LWP 7466]

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

__lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

103 ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S: No such file or directory.

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007f6fcc3b0714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x556a467e13a8) at ../nptl/pthread_mutex_lock.c:80

#2 0x0000556a4439ae39 in qemu_mutex_lock_impl (mutex=0x556a467e13a8, file=0x556a444fc149 "../monitor/qmp.c", line=80) at ../util/qemu-thread-posix.c:79

#3 0x0000556a44320686 in monitor_qmp_cleanup_queue_and_resume (mon=0x556a467e1290) at ../monitor/qmp.c:80

#4 monitor_qmp_event (opaque=0x556a467e1290, event=<optimized out>) at ../monitor/qmp.c:421

#5 0x0000556a4431e505 in tcp_chr_disconnect_locked (chr=0x556a4652a4b0) at ../chardev/char-socket.c:507

#6 0x0000556a4431e550 in tcp_chr_disconnect (chr=0x556a4652a4b0) at ../chardev/char-socket.c:517

#7 0x0000556a4431e59e in tcp_chr_hup (channel=<optimized out>, cond=<optimized out>, opaque=<optimized out>) at ../chardev/char-socket.c:557

#8 0x00007f6fcdd46dd8 in g_main_context_dispatch () from /usr/lib/x86_64-linux-gnu/libglib-2.0.so.0

#9 0x0000556a4439f848 in glib_pollfds_poll () at ../util/main-loop.c:221

#10 os_host_main_loop_wait (timeout=<optimized out>) at ../util/main-loop.c:244

#11 main_loop_wait (nonblocking=nonblocking@entry=0) at ../util/main-loop.c:520

#12 0x0000556a4417c0a1 in qemu_main_loop () at ../softmmu/vl.c:1678

#13 0x0000556a43ee678e in main (argc=<optimized out>, argv=<optimized out>, envp=<optimized out>) at ../softmmu/main.c:50

A debugging session is active.

Inferior 1 [process 7154] will be detached.

Quit anyway? (y or n)Thanks, can you please also use this slightly extended version for dumping the backtrace of all threads:

Bash:

gdb attach $VM_PID -ex='thread apply all bt' -ex='quit'This is still much better than nothing, so thanks!

If it happens again we'd be really grateful for the

If it happens again we'd be really grateful for the

thread apply all bt output.Have the same situation. VM begin hanging while trying restore any VM onto ZFS pool.

Here is some logs.

About ZFS

Further logs in next messages

Here is some logs.

Code:

root@px3 ~ # pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.103-1-pve)

pve-manager: 6.3-6 (running version: 6.3-6/2184247e)

pve-kernel-5.4: 6.3-7

pve-kernel-helper: 6.3-7

pve-kernel-5.4.103-1-pve: 5.4.103-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.0-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: residual config

ifupdown2: 3.0.0-1+pve3

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-5

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-7

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

openvswitch-switch: 2.12.3-1

proxmox-backup-client: 1.0.10-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-6

pve-cluster: 6.2-1

pve-container: 3.3-4

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.2.0-3

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-8

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.3-pve2About ZFS

Code:

root@px3 ~ # zpool status

pool: local-zfs

state: ONLINE

scan: scrub repaired 0B in 00:00:01 with 0 errors on Wed Mar 17 16:38:56 2021

config:

NAME STATE READ WRITE CKSUM

local-zfs ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-ST10000NM0156-2AA111_ZA27Z0GK ONLINE 0 0 0

ata-ST10000NM0156-2AA111_ZA26AQV9 ONLINE 0 0 0

logs

nvme-KXG50ZNV512G_TOSHIBA_58MS101VTYST-part1 ONLINE 0 0 0

cache

nvme-KXG50ZNV512G_TOSHIBA_58MS101VTYST-part2 ONLINE 0 0 0

errors: No known data errors

root@px3 ~ # zpool iostat -v

capacity operations bandwidth

pool alloc free read write read write

---------------------------------------------- ----- ----- ----- ----- ----- -----

local-zfs 86.6G 9.01T 1 219 41.4K 8.07M

mirror 86.6G 9.01T 1 219 41.4K 8.06M

ata-ST10000NM0156-2AA111_ZA27Z0GK - - 0 73 20.3K 4.03M

ata-ST10000NM0156-2AA111_ZA26AQV9 - - 0 146 21.1K 4.03M

logs - - - - - -

nvme-KXG50ZNV512G_TOSHIBA_58MS101VTYST-part1 232K 69.5G 0 0 44 6.64K

cache - - - - - -

nvme-KXG50ZNV512G_TOSHIBA_58MS101VTYST-part2 40.0G 367G 5 14 39.2K 1.37M

---------------------------------------------- ----- ----- ----- ----- ----- -----Further logs in next messages

Last edited:

For test just done.

Downgrade packages pve-qemu-kvm=5.1.0-8 libproxmox-backup-qemu0=1.0.2-1

And try to restore VM again.

And I think I managed to collect debug data for you.

VM 320 - it is restoring machine at this moment of time.

Downgrade packages pve-qemu-kvm=5.1.0-8 libproxmox-backup-qemu0=1.0.2-1

And try to restore VM again.

And I think I managed to collect debug data for you.

VM 320 - it is restoring machine at this moment of time.

Code:

root@px3 ~ # qm list

VMID NAME STATUS MEM(MB) BOOTDISK(GB) PID

300 egorod-gw running 256 1.00 11032

320 VM 320 stopped 128 0.00 0

321 egomn-web running 4096 160.00 11215

390 billing-vma running 1024 32.00 11477

root@px3 ~ # pgrep -f 'kvm -id 300'

11032

root@px3 ~ # VM_PID=11032

root@px3 ~ # gdb attach $VM_PID -ex='thread apply all bt' -ex='quit'

GNU gdb (Debian 8.2.1-2+b3) 8.2.1

Copyright (C) 2018 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Type "show copying" and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

<http://www.gnu.org/software/gdb/bugs/>.

Find the GDB manual and other documentation resources online at:

<http://www.gnu.org/software/gdb/documentation/>.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

attach: No such file or directory.

Attaching to process 11032

[New LWP 11033]

[New LWP 11082]

[New LWP 11083]

[New LWP 11084]

[New LWP 11085]

[New LWP 11086]

[New LWP 11087]

[New LWP 11088]

[New LWP 11089]

[New LWP 11092]

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

0x00007fc7ca80a916 in __GI_ppoll (fds=0x55c2f1511150, nfds=2, timeout=<optimized out>, sigmask=0x0) at ../sysdeps/unix/sysv/linux/ppoll.c:39

39 ../sysdeps/unix/sysv/linux/ppoll.c: No such file or directory.

Thread 11 (Thread 0x7fc7867ff700 (LWP 11092)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x55c2f0d92608) at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x55c2f0d92618, cond=0x55c2f0d925e0) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x55c2f0d925e0, mutex=0x55c2f0d92618) at pthread_cond_wait.c:655

#3 0x000055c2ee31234b in ?? ()

#4 0x000055c2ee1fddd9 in ?? ()

#5 0x000055c2ee1fe708 in ?? ()

#6 0x000055c2ee311c1a in ?? ()

#7 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 10 (Thread 0x7fc79d5fa700 (LWP 11089)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x55c2f058b158) at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x55c2ee82f9e0, cond=0x55c2f058b130) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x55c2f058b130, mutex=0x55c2ee82f9e0) at pthread_cond_wait.c:655

#3 0x000055c2ee31234b in ?? ()

#4 0x000055c2edf92227 in ?? ()

#5 0x000055c2edf939a8 in ?? ()

#6 0x000055c2ee311c1a in ?? ()

#7 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 9 (Thread 0x7fc79ddfb700 (LWP 11088)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x55c2f0564578) at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x55c2ee82f9e0, cond=0x55c2f0564550) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x55c2f0564550, mutex=0x55c2ee82f9e0) at pthread_cond_wait.c:655

#3 0x000055c2ee31234b in ?? ()

#4 0x000055c2edf92227 in ?? ()

--Type <RET> for more, q to quit, c to continue without paging--c

#5 0x000055c2edf939a8 in ?? ()

#6 0x000055c2ee311c1a in ?? ()

#7 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 8 (Thread 0x7fc79e5fc700 (LWP 11087)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x55c2f053d99c) at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x55c2ee82f9e0, cond=0x55c2f053d970) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x55c2f053d970, mutex=0x55c2ee82f9e0) at pthread_cond_wait.c:655

#3 0x000055c2ee31234b in ?? ()

#4 0x000055c2edf92227 in ?? ()

#5 0x000055c2edf939a8 in ?? ()

#6 0x000055c2ee311c1a in ?? ()

#7 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 7 (Thread 0x7fc79edfd700 (LWP 11086)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x55c2f0516dfc) at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x55c2ee82f9e0, cond=0x55c2f0516dd0) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x55c2f0516dd0, mutex=0x55c2ee82f9e0) at pthread_cond_wait.c:655

#3 0x000055c2ee31234b in ?? ()

#4 0x000055c2edf92227 in ?? ()

#5 0x000055c2edf939a8 in ?? ()

#6 0x000055c2ee311c1a in ?? ()

#7 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 6 (Thread 0x7fc79f5fe700 (LWP 11085)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x55c2f04efa7c) at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x55c2ee82f9e0, cond=0x55c2f04efa50) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x55c2f04efa50, mutex=0x55c2ee82f9e0) at pthread_cond_wait.c:655

#3 0x000055c2ee31234b in ?? ()

#4 0x000055c2edf92227 in ?? ()

#5 0x000055c2edf939a8 in ?? ()

#6 0x000055c2ee311c1a in ?? ()

#7 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 5 (Thread 0x7fc79fdff700 (LWP 11084)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x55c2f04c8bec) at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x55c2ee82f9e0, cond=0x55c2f04c8bc0) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x55c2f04c8bc0, mutex=0x55c2ee82f9e0) at pthread_cond_wait.c:655

#3 0x000055c2ee31234b in ?? ()

#4 0x000055c2edf92227 in ?? ()

#5 0x000055c2edf939a8 in ?? ()

#6 0x000055c2ee311c1a in ?? ()

#7 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 4 (Thread 0x7fc7bcb29700 (LWP 11083)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x55c2f04a2008) at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x55c2ee82f9e0, cond=0x55c2f04a1fe0) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x55c2f04a1fe0, mutex=0x55c2ee82f9e0) at pthread_cond_wait.c:655

#3 0x000055c2ee31234b in ?? ()

#4 0x000055c2edf92227 in ?? ()

#5 0x000055c2edf939a8 in ?? ()

#6 0x000055c2ee311c1a in ?? ()

#7 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 3 (Thread 0x7fc7bd32a700 (LWP 11082)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x55c2f04512a8) at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x55c2ee82f9e0, cond=0x55c2f0451280) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x55c2f0451280, mutex=0x55c2ee82f9e0) at pthread_cond_wait.c:655

#3 0x000055c2ee31234b in ?? ()

#4 0x000055c2edf92227 in ?? ()

#5 0x000055c2edf939a8 in ?? ()

#6 0x000055c2ee311c1a in ?? ()

#7 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 2 (Thread 0x7fc7bdb2b700 (LWP 11033)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fc7ca8e7714 in __GI___pthread_mutex_lock (mutex=0x55c2ee82f9e0) at ../nptl/pthread_mutex_lock.c:80

#2 0x000055c2ee311e13 in ?? ()

#3 0x000055c2edf9389e in ?? ()

#4 0x000055c2ee31a35e in ?? ()

#5 0x000055c2ee311c1a in ?? ()

#6 0x00007fc7ca8e4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#7 0x00007fc7ca8154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 1 (Thread 0x7fc7bdc68880 (LWP 11032)):

#0 0x00007fc7ca80a916 in __GI_ppoll (fds=0x55c2f1511150, nfds=2, timeout=<optimized out>, sigmask=0x0) at ../sysdeps/unix/sysv/linux/ppoll.c:39

#1 0x000055c2ee329009 in ?? ()

#2 0x000055c2ee30fb31 in ?? ()

#3 0x000055c2ee30f0f7 in ?? ()

#4 0x000055c2ee278384 in ?? ()

#5 0x000055c2ee0bd32a in ?? ()

#6 0x000055c2ee0c19f0 in ?? ()

#7 0x000055c2ee0c5f1d in ?? ()

#8 0x000055c2ee080e96 in ?? ()

#9 0x000055c2ee07f8ab in ?? ()

#10 0x000055c2ee080e4c in ?? ()

#11 0x000055c2ee07ac7b in ?? ()

#12 0x000055c2ee080e4c in ?? ()

#13 0x000055c2ee07f8ab in ?? ()

#14 0x000055c2ee080e4c in ?? ()

#15 0x000055c2ee081500 in ?? ()

#16 0x000055c2ee0817a3 in ?? ()

#17 0x000055c2ee082a4a in ?? ()

#18 0x000055c2edf6b71b in ?? ()

#19 0x000055c2edfa1b3d in ?? ()

#20 0x000055c2edfa2258 in ?? ()

#21 0x000055c2edea0d7e in ?? ()

#22 0x00007fc7ca74009b in __libc_start_main (main=0x55c2edea0d70, argc=60, argv=0x7ffd90a96518, init=<optimized out>, fini=<optimized out>, rtld_fini=<optimized out>, stack_end=0x7ffd90a96508) at ../csu/libc-start.c:308

#23 0x000055c2edea164a in ?? ()

A debugging session is active.

Inferior 1 [process 11032] will be detached.

Quit anyway? (y or n)

Please answer y or n.

A debugging session is active.

Inferior 1 [process 11032] will be detached.

Quit anyway? (y or n) y

Detaching from program: /usr/bin/qemu-system-x86_64, process 11032

[Inferior 1 (process 11032) detached]

Last edited:

BTW for now VM 300 cannot start, while restore in progress. And it showed as running.

Code:

root@px3 ~ # qm stop 300

root@px3 ~ # qm start 300

start failed: command '/usr/bin/kvm -id 300 -name egorod-gw -no-shutdown -chardev 'socket,id=qmp,path=/var/run/qemu-server/300.qmp,server,nowait' -mon 'chardev=qmp,mode=control' -chardev 'socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5' -mon 'chardev=qmp-event,mode=control' -pidfile /var/run/qemu-server/300.pid -daemonize -smp '8,sockets=1,cores=8,maxcpus=8' -nodefaults -boot 'menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' -vnc unix:/var/run/qemu-server/300.vnc,password -cpu kvm64,enforce,+kvm_pv_eoi,+kvm_pv_unhalt,+lahf_lm,+sep -m 1024 -device 'pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e' -device 'pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f' -device 'piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2' -device 'usb-tablet,id=tablet,bus=uhci.0,port=1' -device 'VGA,id=vga,bus=pci.0,addr=0x2' -device 'virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3' -iscsi 'initiator-name=iqn.1993-08.org.debian:01:377e86fd838' -drive 'file=/dev/zvol/local-zfs/vm-300-disk-0,if=none,id=drive-ide0,cache=writeback,format=raw,aio=threads,detect-zeroes=on' -device 'ide-hd,bus=ide.0,unit=0,drive=drive-ide0,id=ide0,bootindex=100' -netdev 'type=tap,id=net0,ifname=tap300i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on' -device 'virtio-net-pci,mac=00:50:56:00:86:28,netdev=net0,bus=pci.0,addr=0x12,id=net0,bootindex=300' -netdev 'type=tap,id=net2,ifname=tap300i2,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on' -device 'virtio-net-pci,mac=7E:29:56:1A:4B:CA,netdev=net2,bus=pci.0,addr=0x14,id=net2,bootindex=301' -netdev 'type=tap,id=net3,ifname=tap300i3,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on' -device 'virtio-net-pci,mac=EE:F6:DE:40:46:7E,netdev=net3,bus=pci.0,addr=0x15,id=net3,bootindex=302' -machine 'type=pc+pve0'' failed: got timeout

root@px3 ~ # qm list

VMID NAME STATUS MEM(MB) BOOTDISK(GB) PID

300 egorod-gw running 1024 1.00 29462

320 VM 320 stopped 128 0.00 0

321 egomn-web running 4096 160.00 11215

390 billing-vma running 1024 32.00 11477

root@px3 ~ # VM_PID=29462

root@px3 ~ # gdb attach $VM_PID -ex='thread apply all bt' -ex='quit'

GNU gdb (Debian 8.2.1-2+b3) 8.2.1

Copyright (C) 2018 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Type "show copying" and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

<http://www.gnu.org/software/gdb/bugs/>.

Find the GDB manual and other documentation resources online at:

<http://www.gnu.org/software/gdb/documentation/>.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

attach: No such file or directory.

Attaching to process 29462

[New LWP 29463]

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

0x00007f7957067d0e in __libc_open64 (file=0x558d72ff8750 "/dev/zvol/local-zfs/vm-300-disk-0", oflag=526336) at ../sysdeps/unix/sysv/linux/open64.c:48

48 ../sysdeps/unix/sysv/linux/open64.c: No such file or directory.

Thread 2 (Thread 0x7f794a2a4700 (LWP 29463)):

#0 syscall () at ../sysdeps/unix/sysv/linux/x86_64/syscall.S:38

#1 0x0000558d70c509cb in ?? ()

#2 0x0000558d70c5833a in ?? ()

#3 0x0000558d70c4fc1a in ?? ()

#4 0x00007f795705dfa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#5 0x00007f7956f8e4cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 1 (Thread 0x7f794a3e1880 (LWP 29462)):

#0 0x00007f7957067d0e in __libc_open64 (file=0x558d72ff8750 "/dev/zvol/local-zfs/vm-300-disk-0", oflag=526336) at ../sysdeps/unix/sysv/linux/open64.c:48

#1 0x0000558d70c4ac34 in ?? ()

#2 0x0000558d70ba9b18 in ?? ()

#3 0x0000558d70b53fee in ?? ()

#4 0x0000558d70b5b0df in ?? ()

#5 0x0000558d70b5c37a in ?? ()

#6 0x0000558d70b5bbcb in ?? ()

#7 0x0000558d70b5c653 in ?? ()

#8 0x0000558d70ba6596 in ?? ()

#9 0x0000558d709488fb in ?? ()

#10 0x0000558d709498f6 in ?? ()

#11 0x0000558d708dea21 in ?? ()

#12 0x0000558d70c56dca in ?? ()

#13 0x0000558d708e405a in ?? ()

#14 0x0000558d707ded79 in ?? ()

#15 0x00007f7956eb909b in __libc_start_main (main=0x558d707ded70, argc=60, argv=0x7ffed8b049d8, init=<optimized out>, fini=<optimized out>, rtld_fini=<optimized out>, stack_end=0x7ffed8b049c8)

at ../csu/libc-start.c:308

#16 0x0000558d707df64a in ?? ()

A debugging session is active.

Inferior 1 [process 29462] will be detached.Attaching to process 1265699

[New LWP 1265700]

[New LWP 1265788]

[New LWP 1265789]

[New LWP 1265790]

[New LWP 1265791]

[New LWP 1265795]

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

__lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

103 ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S: No such file or directory.

Thread 7 (Thread 0x7fd21cdff700 (LWP 1265795)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x557fcc9b6838)

at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x557fcc9b6848, cond=0x557fcc9b6810) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=cond@entry=0x557fcc9b6810, mutex=mutex@entry=0x557fcc9b6848) at pthread_cond_wait.c:655

#3 0x0000557fcab2839f in qemu_cond_wait_impl (cond=0x557fcc9b6810, mutex=0x557fcc9b6848,

file=0x557fcab51486 "../ui/vnc-jobs.c", line=215) at ../util/qemu-thread-posix.c:174

#4 0x0000557fca6a371d in vnc_worker_thread_loop (queue=queue@entry=0x557fcc9b6810) at ../ui/vnc-jobs.c:215

#5 0x0000557fca6a3fb8 in vnc_worker_thread (arg=arg@entry=0x557fcc9b6810) at ../ui/vnc-jobs.c:325

#6 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#7 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 6 (Thread 0x7fd21f5fe700 (LWP 1265791)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb029d80 <qemu_global_mutex>)

at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb029d80 <qemu_global_mutex>,

file=0x557fcac28cca "../accel/kvm/kvm-all.c", line=2596) at ../util/qemu-thread-posix.c:79

#3 0x0000557fca96a66f in qemu_mutex_lock_iothread_impl (file=file@entry=0x557fcac28cca "../accel/kvm/kvm-all.c",

line=line@entry=2596) at ../softmmu/cpus.c:485

#4 0x0000557fca8ff5b2 in kvm_cpu_exec (cpu=cpu@entry=0x557fcb662060) at ../accel/kvm/kvm-all.c:2596

#5 0x0000557fca918725 in kvm_vcpu_thread_fn (arg=arg@entry=0x557fcb662060) at ../accel/kvm/kvm-cpus.c:49

#6 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#7 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 5 (Thread 0x7fd21fdff700 (LWP 1265790)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb029d80 <qemu_global_mutex>)

at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb029d80 <qemu_global_mutex>,

file=0x557fcac43528 "../softmmu/physmem.c", line=2729) at ../util/qemu-thread-posix.c:79

#3 0x0000557fca96a66f in qemu_mutex_lock_iothread_impl (file=file@entry=0x557fcac43528 "../softmmu/physmem.c",

line=line@entry=2729) at ../softmmu/cpus.c:485

#4 0x0000557fca95338e in prepare_mmio_access (mr=<optimized out>) at ../softmmu/physmem.c:2729

#5 0x0000557fca955dab in flatview_read_continue (fv=fv@entry=0x7fd218025100, addr=addr@entry=57478, attrs=...,

ptr=ptr@entry=0x7fd33aa9b000, len=len@entry=2, addr1=<optimized out>, l=<optimized out>, mr=0x557fcc35c950)

at ../softmmu/physmem.c:2820

#6 0x0000557fca956013 in flatview_read (fv=0x7fd218025100, addr=addr@entry=57478, attrs=attrs@entry=...,

buf=buf@entry=0x7fd33aa9b000, len=len@entry=2) at ../softmmu/physmem.c:2862

#7 0x0000557fca956150 in address_space_read_full (as=0x557fcb029aa0 <address_space_io>, addr=57478, attrs=...,

buf=0x7fd33aa9b000, len=2) at ../softmmu/physmem.c:2875

#8 0x0000557fca9562b5 in address_space_rw (as=<optimized out>, addr=addr@entry=57478, attrs=..., attrs@entry=...,

buf=<optimized out>, len=len@entry=2, is_write=is_write@entry=false) at ../softmmu/physmem.c:2903

#9 0x0000557fca8ff764 in kvm_handle_io (count=1, size=2, direction=<optimized out>, data=<optimized out>,

attrs=..., port=57478) at ../accel/kvm/kvm-all.c:2285

#10 kvm_cpu_exec (cpu=cpu@entry=0x557fcb63b820) at ../accel/kvm/kvm-all.c:2531

#11 0x0000557fca918725 in kvm_vcpu_thread_fn (arg=arg@entry=0x557fcb63b820) at ../accel/kvm/kvm-cpus.c:49

--Type <RET> for more, q to quit, c to continue without paging-- c

#12 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#13 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#14 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 4 (Thread 0x7fd32cbc7700 (LWP 1265789)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb029d80 <qemu_global_mutex>) at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb029d80 <qemu_global_mutex>, file=0x557fcac43528 "../softmmu/physmem.c", line=2729) at ../util/qemu-thread-posix.c:79

#3 0x0000557fca96a66f in qemu_mutex_lock_iothread_impl (file=file@entry=0x557fcac43528 "../softmmu/physmem.c", line=line@entry=2729) at ../softmmu/cpus.c:485

#4 0x0000557fca95338e in prepare_mmio_access (mr=<optimized out>) at ../softmmu/physmem.c:2729

#5 0x0000557fca953479 in flatview_write_continue (fv=fv@entry=0x7fd218022940, addr=addr@entry=4272230712, attrs=..., ptr=ptr@entry=0x7fd33aa9d028, len=len@entry=4, addr1=<optimized out>, l=<optimized out>, mr=0x557fcc81f610) at ../softmmu/physmem.c:2754

#6 0x0000557fca953626 in flatview_write (fv=0x7fd218022940, addr=addr@entry=4272230712, attrs=attrs@entry=..., buf=buf@entry=0x7fd33aa9d028, len=len@entry=4) at ../softmmu/physmem.c:2799

#7 0x0000557fca956220 in address_space_write (as=0x557fcb029a40 <address_space_memory>, addr=4272230712, attrs=..., buf=buf@entry=0x7fd33aa9d028, len=4) at ../softmmu/physmem.c:2891

#8 0x0000557fca9562aa in address_space_rw (as=<optimized out>, addr=<optimized out>, attrs=..., attrs@entry=..., buf=buf@entry=0x7fd33aa9d028, len=<optimized out>, is_write=<optimized out>) at ../softmmu/physmem.c:2901

#9 0x0000557fca8ff68a in kvm_cpu_exec (cpu=cpu@entry=0x557fcb614560) at ../accel/kvm/kvm-all.c:2541

#10 0x0000557fca918725 in kvm_vcpu_thread_fn (arg=arg@entry=0x557fcb614560) at ../accel/kvm/kvm-cpus.c:49

#11 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#12 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#13 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 3 (Thread 0x7fd32d3c8700 (LWP 1265788)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb029d80 <qemu_global_mutex>) at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb029d80 <qemu_global_mutex>, file=0x557fcac28cca "../accel/kvm/kvm-all.c", line=2596) at ../util/qemu-thread-posix.c:79

#3 0x0000557fca96a66f in qemu_mutex_lock_iothread_impl (file=file@entry=0x557fcac28cca "../accel/kvm/kvm-all.c", line=line@entry=2596) at ../softmmu/cpus.c:485

#4 0x0000557fca8ff5b2 in kvm_cpu_exec (cpu=cpu@entry=0x557fcb5c39c0) at ../accel/kvm/kvm-all.c:2596

#5 0x0000557fca918725 in kvm_vcpu_thread_fn (arg=arg@entry=0x557fcb5c39c0) at ../accel/kvm/kvm-cpus.c:49

#6 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#7 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 2 (Thread 0x7fd32dcca700 (LWP 1265700)):

#0 syscall () at ../sysdeps/unix/sysv/linux/x86_64/syscall.S:38

#1 0x0000557fcab28abb in qemu_futex_wait (val=<optimized out>, f=<optimized out>) at ../util/qemu-thread-posix.c:456

#2 qemu_event_wait (ev=ev@entry=0x557fcb045fe8 <rcu_call_ready_event>) at ../util/qemu-thread-posix.c:460

#3 0x0000557fcab1704a in call_rcu_thread (opaque=opaque@entry=0x0) at ../util/rcu.c:258

#4 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#5 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#6 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 1 (Thread 0x7fd32de28340 (LWP 1265699)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb4e08e8) at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb4e08e8, file=0x557fcac89149 "../monitor/qmp.c", line=80) at ../util/qemu-thread-posix.c:79

#3 0x0000557fcaaad686 in monitor_qmp_cleanup_queue_and_resume (mon=0x557fcb4e07d0) at ../monitor/qmp.c:80

#4 monitor_qmp_event (opaque=0x557fcb4e07d0, event=<optimized out>) at ../monitor/qmp.c:421

#5 0x0000557fcaaab505 in tcp_chr_disconnect_locked (chr=0x557fcb251fa0) at ../chardev/char-socket.c:507

#6 0x0000557fcaaab550 in tcp_chr_disconnect (chr=0x557fcb251fa0) at ../chardev/char-socket.c:517

#7 0x0000557fcaaab59e in tcp_chr_hup (channel=<optimized out>, cond=<optimized out>, opaque=<optimized out>) at ../chardev/char-socket.c:557

#8 0x00007fd33a5e0dd8 in g_main_context_dispatch () from /lib/x86_64-linux-gnu/libglib-2.0.so.0

#9 0x0000557fcab2c848 in glib_pollfds_poll () at ../util/main-loop.c:221

#10 os_host_main_loop_wait (timeout=<optimized out>) at ../util/main-loop.c:244

#11 main_loop_wait (nonblocking=nonblocking@entry=0) at ../util/main-loop.c:520

#12 0x0000557fca9090a1 in qemu_main_loop () at ../softmmu/vl.c:1678

#13 0x0000557fca67378e in main (argc=<optimized out>, argv=<optimized out>, envp=<optimized out>) at ../softmmu/main.c:50

proxmox-ve: 6.3-1 (running kernel: 5.4.103-1-pve)

pve-manager: 6.3-6 (running version: 6.3-6/2184247e)

pve-kernel-5.4: 6.3-7

pve-kernel-helper: 6.3-7

pve-kernel-5.4.103-1-pve: 5.4.103-1

pve-kernel-5.4.98-1-pve: 5.4.98-1

pve-kernel-5.4.34-1-pve: 5.4.34-2

ceph: 14.2.16-pve1

ceph-fuse: 14.2.16-pve1

corosync: 3.1.0-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: residual config

ifupdown2: 3.0.0-1+pve3

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-5

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-7

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.9-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-6

pve-cluster: 6.2-1

pve-container: 3.3-4

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.2.0-3

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-7

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

[New LWP 1265700]

[New LWP 1265788]

[New LWP 1265789]

[New LWP 1265790]

[New LWP 1265791]

[New LWP 1265795]

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

__lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

103 ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S: No such file or directory.

Thread 7 (Thread 0x7fd21cdff700 (LWP 1265795)):

#0 futex_wait_cancelable (private=0, expected=0, futex_word=0x557fcc9b6838)

at ../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0, mutex=0x557fcc9b6848, cond=0x557fcc9b6810) at pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=cond@entry=0x557fcc9b6810, mutex=mutex@entry=0x557fcc9b6848) at pthread_cond_wait.c:655

#3 0x0000557fcab2839f in qemu_cond_wait_impl (cond=0x557fcc9b6810, mutex=0x557fcc9b6848,

file=0x557fcab51486 "../ui/vnc-jobs.c", line=215) at ../util/qemu-thread-posix.c:174

#4 0x0000557fca6a371d in vnc_worker_thread_loop (queue=queue@entry=0x557fcc9b6810) at ../ui/vnc-jobs.c:215

#5 0x0000557fca6a3fb8 in vnc_worker_thread (arg=arg@entry=0x557fcc9b6810) at ../ui/vnc-jobs.c:325

#6 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#7 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 6 (Thread 0x7fd21f5fe700 (LWP 1265791)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb029d80 <qemu_global_mutex>)

at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb029d80 <qemu_global_mutex>,

file=0x557fcac28cca "../accel/kvm/kvm-all.c", line=2596) at ../util/qemu-thread-posix.c:79

#3 0x0000557fca96a66f in qemu_mutex_lock_iothread_impl (file=file@entry=0x557fcac28cca "../accel/kvm/kvm-all.c",

line=line@entry=2596) at ../softmmu/cpus.c:485

#4 0x0000557fca8ff5b2 in kvm_cpu_exec (cpu=cpu@entry=0x557fcb662060) at ../accel/kvm/kvm-all.c:2596

#5 0x0000557fca918725 in kvm_vcpu_thread_fn (arg=arg@entry=0x557fcb662060) at ../accel/kvm/kvm-cpus.c:49

#6 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#7 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 5 (Thread 0x7fd21fdff700 (LWP 1265790)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb029d80 <qemu_global_mutex>)

at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb029d80 <qemu_global_mutex>,

file=0x557fcac43528 "../softmmu/physmem.c", line=2729) at ../util/qemu-thread-posix.c:79

#3 0x0000557fca96a66f in qemu_mutex_lock_iothread_impl (file=file@entry=0x557fcac43528 "../softmmu/physmem.c",

line=line@entry=2729) at ../softmmu/cpus.c:485

#4 0x0000557fca95338e in prepare_mmio_access (mr=<optimized out>) at ../softmmu/physmem.c:2729

#5 0x0000557fca955dab in flatview_read_continue (fv=fv@entry=0x7fd218025100, addr=addr@entry=57478, attrs=...,

ptr=ptr@entry=0x7fd33aa9b000, len=len@entry=2, addr1=<optimized out>, l=<optimized out>, mr=0x557fcc35c950)

at ../softmmu/physmem.c:2820

#6 0x0000557fca956013 in flatview_read (fv=0x7fd218025100, addr=addr@entry=57478, attrs=attrs@entry=...,

buf=buf@entry=0x7fd33aa9b000, len=len@entry=2) at ../softmmu/physmem.c:2862

#7 0x0000557fca956150 in address_space_read_full (as=0x557fcb029aa0 <address_space_io>, addr=57478, attrs=...,

buf=0x7fd33aa9b000, len=2) at ../softmmu/physmem.c:2875

#8 0x0000557fca9562b5 in address_space_rw (as=<optimized out>, addr=addr@entry=57478, attrs=..., attrs@entry=...,

buf=<optimized out>, len=len@entry=2, is_write=is_write@entry=false) at ../softmmu/physmem.c:2903

#9 0x0000557fca8ff764 in kvm_handle_io (count=1, size=2, direction=<optimized out>, data=<optimized out>,

attrs=..., port=57478) at ../accel/kvm/kvm-all.c:2285

#10 kvm_cpu_exec (cpu=cpu@entry=0x557fcb63b820) at ../accel/kvm/kvm-all.c:2531

#11 0x0000557fca918725 in kvm_vcpu_thread_fn (arg=arg@entry=0x557fcb63b820) at ../accel/kvm/kvm-cpus.c:49

--Type <RET> for more, q to quit, c to continue without paging-- c

#12 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#13 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#14 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 4 (Thread 0x7fd32cbc7700 (LWP 1265789)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb029d80 <qemu_global_mutex>) at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb029d80 <qemu_global_mutex>, file=0x557fcac43528 "../softmmu/physmem.c", line=2729) at ../util/qemu-thread-posix.c:79

#3 0x0000557fca96a66f in qemu_mutex_lock_iothread_impl (file=file@entry=0x557fcac43528 "../softmmu/physmem.c", line=line@entry=2729) at ../softmmu/cpus.c:485

#4 0x0000557fca95338e in prepare_mmio_access (mr=<optimized out>) at ../softmmu/physmem.c:2729

#5 0x0000557fca953479 in flatview_write_continue (fv=fv@entry=0x7fd218022940, addr=addr@entry=4272230712, attrs=..., ptr=ptr@entry=0x7fd33aa9d028, len=len@entry=4, addr1=<optimized out>, l=<optimized out>, mr=0x557fcc81f610) at ../softmmu/physmem.c:2754

#6 0x0000557fca953626 in flatview_write (fv=0x7fd218022940, addr=addr@entry=4272230712, attrs=attrs@entry=..., buf=buf@entry=0x7fd33aa9d028, len=len@entry=4) at ../softmmu/physmem.c:2799

#7 0x0000557fca956220 in address_space_write (as=0x557fcb029a40 <address_space_memory>, addr=4272230712, attrs=..., buf=buf@entry=0x7fd33aa9d028, len=4) at ../softmmu/physmem.c:2891

#8 0x0000557fca9562aa in address_space_rw (as=<optimized out>, addr=<optimized out>, attrs=..., attrs@entry=..., buf=buf@entry=0x7fd33aa9d028, len=<optimized out>, is_write=<optimized out>) at ../softmmu/physmem.c:2901

#9 0x0000557fca8ff68a in kvm_cpu_exec (cpu=cpu@entry=0x557fcb614560) at ../accel/kvm/kvm-all.c:2541

#10 0x0000557fca918725 in kvm_vcpu_thread_fn (arg=arg@entry=0x557fcb614560) at ../accel/kvm/kvm-cpus.c:49

#11 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#12 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#13 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 3 (Thread 0x7fd32d3c8700 (LWP 1265788)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb029d80 <qemu_global_mutex>) at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb029d80 <qemu_global_mutex>, file=0x557fcac28cca "../accel/kvm/kvm-all.c", line=2596) at ../util/qemu-thread-posix.c:79

#3 0x0000557fca96a66f in qemu_mutex_lock_iothread_impl (file=file@entry=0x557fcac28cca "../accel/kvm/kvm-all.c", line=line@entry=2596) at ../softmmu/cpus.c:485

#4 0x0000557fca8ff5b2 in kvm_cpu_exec (cpu=cpu@entry=0x557fcb5c39c0) at ../accel/kvm/kvm-all.c:2596

#5 0x0000557fca918725 in kvm_vcpu_thread_fn (arg=arg@entry=0x557fcb5c39c0) at ../accel/kvm/kvm-cpus.c:49

#6 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#7 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#8 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 2 (Thread 0x7fd32dcca700 (LWP 1265700)):

#0 syscall () at ../sysdeps/unix/sysv/linux/x86_64/syscall.S:38

#1 0x0000557fcab28abb in qemu_futex_wait (val=<optimized out>, f=<optimized out>) at ../util/qemu-thread-posix.c:456

#2 qemu_event_wait (ev=ev@entry=0x557fcb045fe8 <rcu_call_ready_event>) at ../util/qemu-thread-posix.c:460

#3 0x0000557fcab1704a in call_rcu_thread (opaque=opaque@entry=0x0) at ../util/rcu.c:258

#4 0x0000557fcab27c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#5 0x00007fd338ce4fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#6 0x00007fd338c154cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 1 (Thread 0x7fd32de28340 (LWP 1265699)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fd338ce7714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x557fcb4e08e8) at ../nptl/pthread_mutex_lock.c:80

#2 0x0000557fcab27e39 in qemu_mutex_lock_impl (mutex=0x557fcb4e08e8, file=0x557fcac89149 "../monitor/qmp.c", line=80) at ../util/qemu-thread-posix.c:79

#3 0x0000557fcaaad686 in monitor_qmp_cleanup_queue_and_resume (mon=0x557fcb4e07d0) at ../monitor/qmp.c:80

#4 monitor_qmp_event (opaque=0x557fcb4e07d0, event=<optimized out>) at ../monitor/qmp.c:421

#5 0x0000557fcaaab505 in tcp_chr_disconnect_locked (chr=0x557fcb251fa0) at ../chardev/char-socket.c:507

#6 0x0000557fcaaab550 in tcp_chr_disconnect (chr=0x557fcb251fa0) at ../chardev/char-socket.c:517

#7 0x0000557fcaaab59e in tcp_chr_hup (channel=<optimized out>, cond=<optimized out>, opaque=<optimized out>) at ../chardev/char-socket.c:557

#8 0x00007fd33a5e0dd8 in g_main_context_dispatch () from /lib/x86_64-linux-gnu/libglib-2.0.so.0

#9 0x0000557fcab2c848 in glib_pollfds_poll () at ../util/main-loop.c:221

#10 os_host_main_loop_wait (timeout=<optimized out>) at ../util/main-loop.c:244

#11 main_loop_wait (nonblocking=nonblocking@entry=0) at ../util/main-loop.c:520

#12 0x0000557fca9090a1 in qemu_main_loop () at ../softmmu/vl.c:1678

#13 0x0000557fca67378e in main (argc=<optimized out>, argv=<optimized out>, envp=<optimized out>) at ../softmmu/main.c:50

proxmox-ve: 6.3-1 (running kernel: 5.4.103-1-pve)

pve-manager: 6.3-6 (running version: 6.3-6/2184247e)

pve-kernel-5.4: 6.3-7

pve-kernel-helper: 6.3-7

pve-kernel-5.4.103-1-pve: 5.4.103-1

pve-kernel-5.4.98-1-pve: 5.4.98-1

pve-kernel-5.4.34-1-pve: 5.4.34-2

ceph: 14.2.16-pve1

ceph-fuse: 14.2.16-pve1

corosync: 3.1.0-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: residual config

ifupdown2: 3.0.0-1+pve3

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-5

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-7

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.9-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-6

pve-cluster: 6.2-1

pve-container: 3.3-4

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.2.0-3

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-7

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

Another one crashed

Attaching to process 1306954

[New LWP 1306955]

[New LWP 1306979]

[New LWP 1306981]

[New LWP 1306982]

[New LWP 1306983]

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

__lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

103 ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S: No such file or directory.

Thread 6 (Thread 0x7fa5963fd700 (LWP 1306983)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fa5a8335714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x55722a977d80 <qemu_global_mutex>)

at ../nptl/pthread_mutex_lock.c:80

#2 0x000055722a475e39 in qemu_mutex_lock_impl (mutex=0x55722a977d80 <qemu_global_mutex>,

file=0x55722a591528 "../softmmu/physmem.c", line=2729) at ../util/qemu-thread-posix.c:79

#3 0x000055722a2b866f in qemu_mutex_lock_iothread_impl (file=file@entry=0x55722a591528 "../softmmu/physmem.c",

line=line@entry=2729) at ../softmmu/cpus.c:485

#4 0x000055722a2a138e in prepare_mmio_access (mr=<optimized out>) at ../softmmu/physmem.c:2729

#5 0x000055722a2a3dab in flatview_read_continue (fv=fv@entry=0x7fa3881fbad0, addr=addr@entry=375, attrs=...,

ptr=ptr@entry=0x7fa59d422000, len=len@entry=1, addr1=<optimized out>, l=<optimized out>, mr=0x55722cadd9d0)

at ../softmmu/physmem.c:2820

#6 0x000055722a2a4013 in flatview_read (fv=0x7fa3881fbad0, addr=addr@entry=375, attrs=attrs@entry=...,

buf=buf@entry=0x7fa59d422000, len=len@entry=1) at ../softmmu/physmem.c:2862

#7 0x000055722a2a4150 in address_space_read_full (as=0x55722a977aa0 <address_space_io>, addr=375, attrs=...,

buf=0x7fa59d422000, len=1) at ../softmmu/physmem.c:2875

#8 0x000055722a2a42b5 in address_space_rw (as=<optimized out>, addr=addr@entry=375, attrs=..., attrs@entry=...,

buf=<optimized out>, len=len@entry=1, is_write=is_write@entry=false) at ../softmmu/physmem.c:2903

#9 0x000055722a24d764 in kvm_handle_io (count=1, size=1, direction=<optimized out>, data=<optimized out>,

attrs=..., port=375) at ../accel/kvm/kvm-all.c:2285

#10 kvm_cpu_exec (cpu=cpu@entry=0x55722c05a960) at ../accel/kvm/kvm-all.c:2531

#11 0x000055722a266725 in kvm_vcpu_thread_fn (arg=arg@entry=0x55722c05a960) at ../accel/kvm/kvm-cpus.c:49

#12 0x000055722a475c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#13 0x00007fa5a8332fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#14 0x00007fa5a82634cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 5 (Thread 0x7fa596bfe700 (LWP 1306982)):

#0 0x00007fa5a825a427 in ioctl () at ../sysdeps/unix/syscall-template.S:78

#1 0x000055722a24d36c in kvm_vcpu_ioctl (cpu=cpu@entry=0x55722c04c5b0, type=type@entry=44672)

at ../accel/kvm/kvm-all.c:2654

#2 0x000055722a24d4b2 in kvm_cpu_exec (cpu=cpu@entry=0x55722c04c5b0) at ../accel/kvm/kvm-all.c:2491

#3 0x000055722a266725 in kvm_vcpu_thread_fn (arg=arg@entry=0x55722c04c5b0) at ../accel/kvm/kvm-cpus.c:49

#4 0x000055722a475c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#5 0x00007fa5a8332fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#6 0x00007fa5a82634cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 4 (Thread 0x7fa5973ff700 (LWP 1306981)):

#0 0x00007fa5a825a427 in ioctl () at ../sysdeps/unix/syscall-template.S:78

#1 0x000055722a24d36c in kvm_vcpu_ioctl (cpu=cpu@entry=0x55722cc93770, type=type@entry=44672)

at ../accel/kvm/kvm-all.c:2654

#2 0x000055722a24d4b2 in kvm_cpu_exec (cpu=cpu@entry=0x55722cc93770) at ../accel/kvm/kvm-all.c:2491

#3 0x000055722a266725 in kvm_vcpu_thread_fn (arg=arg@entry=0x55722cc93770) at ../accel/kvm/kvm-cpus.c:49

#4 0x000055722a475c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#5 0x00007fa5a8332fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#6 0x00007fa5a82634cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 3 (Thread 0x7fa597fff700 (LWP 1306979)):

#0 0x00007fa5a825a427 in ioctl () at ../sysdeps/unix/syscall-template.S:78

#1 0x000055722a24d36c in kvm_vcpu_ioctl (cpu=cpu@entry=0x55722c015800, type=type@entry=44672)

--Type <RET> for more, q to quit, c to continue without paging--

at ../accel/kvm/kvm-all.c:2654

#2 0x000055722a24d4b2 in kvm_cpu_exec (cpu=cpu@entry=0x55722c015800) at ../accel/kvm/kvm-all.c:2491

#3 0x000055722a266725 in kvm_vcpu_thread_fn (arg=arg@entry=0x55722c015800) at ../accel/kvm/kvm-cpus.c:49

#4 0x000055722a475c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#5 0x00007fa5a8332fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#6 0x00007fa5a82634cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 2 (Thread 0x7fa59d318700 (LWP 1306955)):

#0 syscall () at ../sysdeps/unix/sysv/linux/x86_64/syscall.S:38

#1 0x000055722a476abb in qemu_futex_wait (val=<optimized out>, f=<optimized out>) at ../util/qemu-thread-posix.c:456

#2 qemu_event_wait (ev=ev@entry=0x55722a993fe8 <rcu_call_ready_event>) at ../util/qemu-thread-posix.c:460

#3 0x000055722a46504a in call_rcu_thread (opaque=opaque@entry=0x0) at ../util/rcu.c:258

#4 0x000055722a475c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#5 0x00007fa5a8332fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#6 0x00007fa5a82634cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 1 (Thread 0x7fa59d476340 (LWP 1306954)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fa5a8335714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x55722bf3a848)

at ../nptl/pthread_mutex_lock.c:80

#2 0x000055722a475e39 in qemu_mutex_lock_impl (mutex=0x55722bf3a848, file=0x55722a5d7149 "../monitor/qmp.c",

line=80) at ../util/qemu-thread-posix.c:79

#3 0x000055722a3fb686 in monitor_qmp_cleanup_queue_and_resume (mon=0x55722bf3a730) at ../monitor/qmp.c:80

#4 monitor_qmp_event (opaque=0x55722bf3a730, event=<optimized out>) at ../monitor/qmp.c:421

#5 0x000055722a3f9505 in tcp_chr_disconnect_locked (chr=0x55722bc68fa0) at ../chardev/char-socket.c:507

#6 0x000055722a3f9550 in tcp_chr_disconnect (chr=0x55722bc68fa0) at ../chardev/char-socket.c:517

#7 0x000055722a3f959e in tcp_chr_hup (channel=<optimized out>, cond=<optimized out>, opaque=<optimized out>)

at ../chardev/char-socket.c:557

#8 0x00007fa5a9c2edd8 in g_main_context_dispatch () from /lib/x86_64-linux-gnu/libglib-2.0.so.0

#9 0x000055722a47a848 in glib_pollfds_poll () at ../util/main-loop.c:221

#10 os_host_main_loop_wait (timeout=<optimized out>) at ../util/main-loop.c:244

#11 main_loop_wait (nonblocking=nonblocking@entry=0) at ../util/main-loop.c:520

#12 0x000055722a2570a1 in qemu_main_loop () at ../softmmu/vl.c:1678

#13 0x0000557229fc178e in main (argc=<optimized out>, argv=<optimized out>, envp=<optimized out>)

at ../softmmu/main.c:50

Attaching to process 1306954

[New LWP 1306955]

[New LWP 1306979]

[New LWP 1306981]

[New LWP 1306982]

[New LWP 1306983]

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

__lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

103 ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S: No such file or directory.

Thread 6 (Thread 0x7fa5963fd700 (LWP 1306983)):

#0 __lll_lock_wait () at ../sysdeps/unix/sysv/linux/x86_64/lowlevellock.S:103

#1 0x00007fa5a8335714 in __GI___pthread_mutex_lock (mutex=mutex@entry=0x55722a977d80 <qemu_global_mutex>)

at ../nptl/pthread_mutex_lock.c:80

#2 0x000055722a475e39 in qemu_mutex_lock_impl (mutex=0x55722a977d80 <qemu_global_mutex>,

file=0x55722a591528 "../softmmu/physmem.c", line=2729) at ../util/qemu-thread-posix.c:79

#3 0x000055722a2b866f in qemu_mutex_lock_iothread_impl (file=file@entry=0x55722a591528 "../softmmu/physmem.c",

line=line@entry=2729) at ../softmmu/cpus.c:485

#4 0x000055722a2a138e in prepare_mmio_access (mr=<optimized out>) at ../softmmu/physmem.c:2729

#5 0x000055722a2a3dab in flatview_read_continue (fv=fv@entry=0x7fa3881fbad0, addr=addr@entry=375, attrs=...,

ptr=ptr@entry=0x7fa59d422000, len=len@entry=1, addr1=<optimized out>, l=<optimized out>, mr=0x55722cadd9d0)

at ../softmmu/physmem.c:2820

#6 0x000055722a2a4013 in flatview_read (fv=0x7fa3881fbad0, addr=addr@entry=375, attrs=attrs@entry=...,

buf=buf@entry=0x7fa59d422000, len=len@entry=1) at ../softmmu/physmem.c:2862

#7 0x000055722a2a4150 in address_space_read_full (as=0x55722a977aa0 <address_space_io>, addr=375, attrs=...,

buf=0x7fa59d422000, len=1) at ../softmmu/physmem.c:2875

#8 0x000055722a2a42b5 in address_space_rw (as=<optimized out>, addr=addr@entry=375, attrs=..., attrs@entry=...,

buf=<optimized out>, len=len@entry=1, is_write=is_write@entry=false) at ../softmmu/physmem.c:2903

#9 0x000055722a24d764 in kvm_handle_io (count=1, size=1, direction=<optimized out>, data=<optimized out>,

attrs=..., port=375) at ../accel/kvm/kvm-all.c:2285

#10 kvm_cpu_exec (cpu=cpu@entry=0x55722c05a960) at ../accel/kvm/kvm-all.c:2531

#11 0x000055722a266725 in kvm_vcpu_thread_fn (arg=arg@entry=0x55722c05a960) at ../accel/kvm/kvm-cpus.c:49

#12 0x000055722a475c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#13 0x00007fa5a8332fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#14 0x00007fa5a82634cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 5 (Thread 0x7fa596bfe700 (LWP 1306982)):

#0 0x00007fa5a825a427 in ioctl () at ../sysdeps/unix/syscall-template.S:78

#1 0x000055722a24d36c in kvm_vcpu_ioctl (cpu=cpu@entry=0x55722c04c5b0, type=type@entry=44672)

at ../accel/kvm/kvm-all.c:2654

#2 0x000055722a24d4b2 in kvm_cpu_exec (cpu=cpu@entry=0x55722c04c5b0) at ../accel/kvm/kvm-all.c:2491

#3 0x000055722a266725 in kvm_vcpu_thread_fn (arg=arg@entry=0x55722c04c5b0) at ../accel/kvm/kvm-cpus.c:49

#4 0x000055722a475c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#5 0x00007fa5a8332fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#6 0x00007fa5a82634cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 4 (Thread 0x7fa5973ff700 (LWP 1306981)):

#0 0x00007fa5a825a427 in ioctl () at ../sysdeps/unix/syscall-template.S:78

#1 0x000055722a24d36c in kvm_vcpu_ioctl (cpu=cpu@entry=0x55722cc93770, type=type@entry=44672)

at ../accel/kvm/kvm-all.c:2654

#2 0x000055722a24d4b2 in kvm_cpu_exec (cpu=cpu@entry=0x55722cc93770) at ../accel/kvm/kvm-all.c:2491

#3 0x000055722a266725 in kvm_vcpu_thread_fn (arg=arg@entry=0x55722cc93770) at ../accel/kvm/kvm-cpus.c:49

#4 0x000055722a475c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#5 0x00007fa5a8332fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#6 0x00007fa5a82634cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 3 (Thread 0x7fa597fff700 (LWP 1306979)):

#0 0x00007fa5a825a427 in ioctl () at ../sysdeps/unix/syscall-template.S:78

#1 0x000055722a24d36c in kvm_vcpu_ioctl (cpu=cpu@entry=0x55722c015800, type=type@entry=44672)

--Type <RET> for more, q to quit, c to continue without paging--

at ../accel/kvm/kvm-all.c:2654

#2 0x000055722a24d4b2 in kvm_cpu_exec (cpu=cpu@entry=0x55722c015800) at ../accel/kvm/kvm-all.c:2491

#3 0x000055722a266725 in kvm_vcpu_thread_fn (arg=arg@entry=0x55722c015800) at ../accel/kvm/kvm-cpus.c:49

#4 0x000055722a475c2a in qemu_thread_start (args=<optimized out>) at ../util/qemu-thread-posix.c:521

#5 0x00007fa5a8332fa3 in start_thread (arg=<optimized out>) at pthread_create.c:486

#6 0x00007fa5a82634cf in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 2 (Thread 0x7fa59d318700 (LWP 1306955)):

#0 syscall () at ../sysdeps/unix/sysv/linux/x86_64/syscall.S:38

#1 0x000055722a476abb in qemu_futex_wait (val=<optimized out>, f=<optimized out>) at ../util/qemu-thread-posix.c:456