I have 18 nodes in Proxmox Cluster on 2 rack (rack A (2 switch for ring0 và ring1) and rack B (2 switch for ring0 and ring1). Ring0 and Ring1 run on two vlan.

I move node04 from rack A to rack C (2 switch for ring0 and ring1). After turn on node04 => all 18 nodes were rebooted.

Has anyone encountered this error yet? Please support.

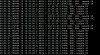

Some logs:

I move node04 from rack A to rack C (2 switch for ring0 and ring1). After turn on node04 => all 18 nodes were rebooted.

Has anyone encountered this error yet? Please support.

Some logs:

Code:

Dec 4 14:20:17 node04 corosync[2420]: [SERV ] Service engine loaded: corosync watchdog service [7]

Dec 4 14:20:17 node04 corosync[2420]: [QUORUM] Using quorum provider corosync_votequorum

Dec 4 14:20:17 node04 corosync[2420]: [SERV ] Service engine loaded: corosync vote quorum service v1.0 [5]

Dec 4 14:20:17 node04 corosync[2420]: [QB ] server name: votequorum

Dec 4 14:20:17 node04 corosync[2420]: [SERV ] Service engine loaded: corosync cluster quorum service v0.1 [3]

Dec 4 14:20:17 node04 corosync[2420]: [QB ] server name: quorum

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 5 (passive) best link: 0 (pri: 1)

Dec 4 14:20:17 node04 corosync[2420]: [TOTEM ] A new membership (7.7e4) was formed. Members joined: 7

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 13 (passive) best link: 0 (pri: 1)

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 13 has no active links

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 13 (passive) best link: 0 (pri: 1)

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 13 has no active links

Dec 4 14:20:17 node04 corosync[2420]: [CPG ] downlist left_list: 0 received

Dec 4 14:20:17 node04 systemd[1]: Started Corosync Cluster Engine.

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 15 (passive) best link: 0 (pri: 0)

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 15 has no active links

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 15 (passive) best link: 0 (pri: 1)

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 15 has no active links

Dec 4 14:20:17 node04 corosync[2420]: [QUORUM] Members[1]: 7

Dec 4 14:20:17 node04 corosync[2420]: [MAIN ] Completed service synchronization, ready to provide service.

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 1 has no active links

Code:

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 2 has no active links

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 2 (passive) best link: 0 (pri: 1)

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 2 has no active links

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 5 (passive) best link: 0 (pri: 1)

Dec 4 14:20:17 node04 corosync[2420]: [KNET ] host: host: 5 has no active links

Dec 4 14:20:18 node04 pve-firewall[2438]: starting server

Dec 4 14:20:18 node04 pvestatd[2439]: starting server

Dec 4 14:20:18 node04 systemd[1]: Started PVE Status Daemon.

Dec 4 14:20:18 node04 systemd[1]: Started Proxmox VE firewall.

Dec 4 14:20:18 node04 pvefw-logger[1082]: received terminate request (signal)

Dec 4 14:20:18 node04 pvefw-logger[1082]: stopping pvefw logger

Dec 4 14:20:18 node04 systemd[1]: Stopping Proxmox VE firewall logger...

Dec 4 14:20:18 node04 systemd[1]: pvefw-logger.service: Succeeded.

Dec 4 14:20:18 node04 systemd[1]: Stopped Proxmox VE firewall logger.

Dec 4 14:20:18 node04 systemd[1]: Starting Proxmox VE firewall logger...

Dec 4 14:20:18 node04 pvefw-logger[2482]: starting pvefw logger

Dec 4 14:20:18 node04 systemd[1]: Started Proxmox VE firewall logger.

Dec 4 14:20:18 node04 kernel: [ 17.121760] tg3 0000:01:00.0 eno1: Link is up at 1000 Mbps, full duplex

Dec 4 14:20:18 node04 kernel: [ 17.121767] tg3 0000:01:00.0 eno1: Flow control is on for TX and on for RX

Dec 4 14:20:18 node04 kernel: [ 17.121769] tg3 0000:01:00.0 eno1: EEE is disabled

Dec 4 14:20:18 node04 kernel: [ 17.121795] IPv6: ADDRCONF(NETDEV_CHANGE): eno1: link becomes ready

Dec 4 14:20:18 node04 pvedaemon[2485]: starting server

Dec 4 14:20:18 node04 pvedaemon[2485]: starting 3 worker(s)

Dec 4 14:20:18 node04 pvedaemon[2485]: worker 2486 started

Dec 4 14:20:18 node04 pvedaemon[2485]: worker 2487 started

Dec 4 14:20:18 node04 pvedaemon[2485]: worker 2488 started

Dec 4 14:20:18 node04 systemd[1]: Started PVE API Daemon.

Dec 4 14:20:18 node04 systemd[1]: Starting PVE Cluster Resource Manager Daemon...

Dec 4 14:20:18 node04 systemd[1]: Starting PVE API Proxy Server...

Dec 4 14:20:18 node04 kernel: [ 17.520089] tg3 0000:01:00.1 eno2: Link is up at 1000 Mbps, full duplex

Dec 4 14:20:18 node04 kernel: [ 17.520098] tg3 0000:01:00.1 eno2: Flow control is on for TX and on for RX

Dec 4 14:20:18 node04 kernel: [ 17.520100] tg3 0000:01:00.1 eno2: EEE is disabled

Dec 4 14:20:18 node04 kernel: [ 17.520127] IPv6: ADDRCONF(NETDEV_CHANGE): eno2: link becomes ready

Dec 4 14:20:19 node04 kernel: [ 17.613462] tg3 0000:02:00.0 eno3: Link is up at 1000 Mbps, full duplex

Dec 4 14:20:19 node04 kernel: [ 17.613470] tg3 0000:02:00.0 eno3: Flow control is on for TX and on for RX

Dec 4 14:20:19 node04 kernel: [ 17.613471] tg3 0000:02:00.0 eno3: EEE is disabled

Dec 4 14:20:19 node04 kernel: [ 17.613497] IPv6: ADDRCONF(NETDEV_CHANGE): eno3: link becomes ready

Dec 4 14:20:19 node04 pve-ha-crm[2492]: starting server

Code:

Dec 4 14:20:19 node04 pve-ha-crm[2492]: starting server

Dec 4 14:20:19 node04 pve-ha-crm[2492]: status change startup => wait_for_quorum

Dec 4 14:20:19 node04 systemd[1]: Started PVE Cluster Resource Manager Daemon.

Dec 4 14:20:19 node04 systemd[1]: Starting PVE Local HA Resource Manager Daemon...

Dec 4 14:20:19 node04 pveproxy[2494]: starting server

Dec 4 14:20:19 node04 pveproxy[2494]: starting 3 worker(s)

Dec 4 14:20:19 node04 pveproxy[2494]: worker 2495 started

Dec 4 14:20:19 node04 pveproxy[2494]: worker 2496 started

Dec 4 14:20:19 node04 pveproxy[2494]: worker 2497 started

Dec 4 14:20:19 node04 systemd[1]: Started PVE API Proxy Server.

Dec 4 14:20:19 node04 systemd[1]: Starting PVE SPICE Proxy Server...

Dec 4 14:20:19 node04 kernel: [ 18.033322] tg3 0000:02:00.1 eno4: Link is up at 1000 Mbps, full duplex

Dec 4 14:20:19 node04 kernel: [ 18.033330] tg3 0000:02:00.1 eno4: Flow control is on for TX and on for RX

Dec 4 14:20:19 node04 kernel: [ 18.033331] tg3 0000:02:00.1 eno4: EEE is disabled

Dec 4 14:20:19 node04 kernel: [ 18.033358] IPv6: ADDRCONF(NETDEV_CHANGE): eno4: link becomes ready

Dec 4 14:20:19 node04 spiceproxy[2499]: starting server

Dec 4 14:20:19 node04 spiceproxy[2499]: starting 1 worker(s)

Dec 4 14:20:19 node04 spiceproxy[2499]: worker 2500 started

Dec 4 14:20:19 node04 systemd[1]: Started PVE SPICE Proxy Server.

Dec 4 14:20:19 node04 pve-ha-lrm[2501]: starting server

Dec 4 14:20:19 node04 pve-ha-lrm[2501]: status change startup => wait_for_agent_lock

Dec 4 14:20:19 node04 systemd[1]: Started PVE Local HA Resource Manager Daemon.

Dec 4 14:20:19 node04 systemd[1]: Starting PVE guests...

Dec 4 14:20:20 node04 pve-guests[2502]: <root@pam> starting task UPID:d104:000009C7:0000075C:5DE75E34:startall::root@pam:

Dec 4 14:20:20 node04 pvesh[2502]: waiting for quorum ...

Dec 4 14:20:22 node04 pmxcfs[2286]: [status] notice: update cluster info (cluster name cluster1, version = 55)

Dec 4 14:20:22 node04 pmxcfs[2286]: [dcdb] notice: members: 7/2286

Dec 4 14:20:22 node04 pmxcfs[2286]: [dcdb] notice: all data is up to date

Dec 4 14:20:22 node04 pmxcfs[2286]: [status] notice: members: 7/2286

Dec 4 14:20:22 node04 pmxcfs[2286]: [status] notice: all data is up to date

Dec 4 14:20:33 node04 pvestatd[2439]: got timeout

Dec 4 14:20:35 node04 pvestatd[2439]: storage 'backup' is not online

Dec 4 14:20:40 node04 pvestatd[2439]: got timeout

Dec 4 14:20:40 node04 pvestatd[2439]: status update time (12.174 seconds)

Dec 4 14:20:42 node04 pvestatd[2439]: storage 'backup' is not online

Dec 4 14:20:47 node04 pvestatd[2439]: got timeout

Dec 4 14:20:50 node04 kernel: [ 48.658610] mlx4_en: enp4s0: Link Down

Dec 4 14:20:52 node04 pvestatd[2439]: got timeout

Code:

Dec 4 14:21:00 node04 corosync[2420]: [TOTEM ] A new membership (1.7f0) was formed. Members joined: 1 2 3 4 5 6 8 9 10 11 12 13 14 1

5 16 17 19

Dec 4 14:21:00 node04 corosync[2420]: [CPG ] downlist left_list: 0 received

Dec 4 14:21:00 node04 corosync[2420]: [CPG ] downlist left_list: 0 received

Dec 4 14:21:00 node04 pmxcfs[2286]: [dcdb] notice: members: 1/2340, 2/2302, 3/2332, 4/2654, 5/2352, 6/2355, 7/2286, 8/2141, 9/2418, 10

/2246, 11/2190, 12/2343, 13/2345, 14/2351, 15/2366, 16/2265, 17/4790, 19/2282

Dec 4 14:21:00 node04 pmxcfs[2286]: [dcdb] notice: starting data syncronisation

Dec 4 14:21:00 node04 pmxcfs[2286]: [status] notice: members: 1/2340, 2/2302, 3/2332, 4/2654, 5/2352, 6/2355, 7/2286, 8/2141, 9/2418,

10/2246, 11/2190, 12/2343, 13/2345, 14/2351, 15/2366, 16/2265, 17/4790, 19/2282

Dec 4 14:21:00 node04 pmxcfs[2286]: [status] notice: starting data syncronisation

Dec 4 14:21:00 node04 corosync[2420]: [QUORUM] This node is within the primary component and will provide service.

Dec 4 14:21:00 node04 corosync[2420]: [QUORUM] Members[18]: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 19

Dec 4 14:21:00 node04 corosync[2420]: [MAIN ] Completed service synchronization, ready to provide service.

Dec 4 14:21:00 node04 pmxcfs[2286]: [status] notice: node has quorum

Dec 4 14:21:00 node04 corosync[2420]: [KNET ] pmtud: PMTUD link change for host: 12 link: 0 from 469 to 1397

Dec 4 14:21:00 node04 corosync[2420]: [KNET ] pmtud: PMTUD link change for host: 12 link: 1 from 469 to 1397

Dec 4 14:21:00 node04 corosync[2420]: [KNET ] pmtud: PMTUD link change for host: 10 link: 0 from 469 to 1397

Dec 4 14:21:00 node04 corosync[2420]: [KNET ] pmtud: PMTUD link change for host: 10 link: 1 from 469 to 1397

Dec 4 14:21:00 node04 corosync[2420]: [KNET ] pmtud: PMTUD link change for host: 6 link: 0 from 469 to 1397