I have ProxMox 6.3-3 running a test environment. There are 17 LXCs and 5 VMs. At most, I would possibly 3-4 simultaneously. I have had the host machine running with no LXCs or VMs running over the past 5 days or so (i.e. it has just been idle whilst I was working on something else).

When I got back to it, I used the web interface to log in and was going to start a number of machines to test something,

I first noticed that there was a number of updates, so I installed them. Given there was nothing running and it had been a few months since rebooting the machine, I decided to do that.

Once rebooted, I tried to start up a LXC with failed, I then tried a VM and it failed.

I then tried all LXCs and VMs sequentially and they all failed to load.

I shut down the machine and after it came back up and tried again, but got the same results.

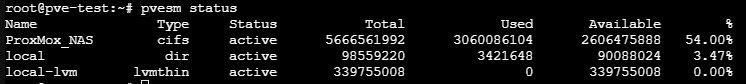

I then looked to see if the drives were available and they were.

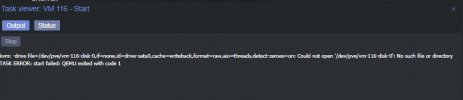

When trying to start an LXC, the following error message is provided:

If you look at the status tab, it shows:

I am not sure where to go from here to find the solution.

As an aside, within the last 30 days, I have sequentially started each of the LXCs and VMs successfully and ran update/upgrade commands on each (or the equivalent).

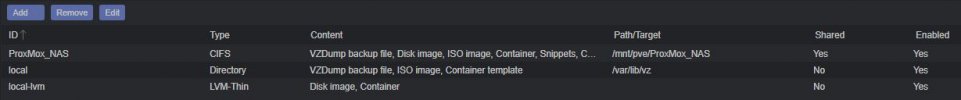

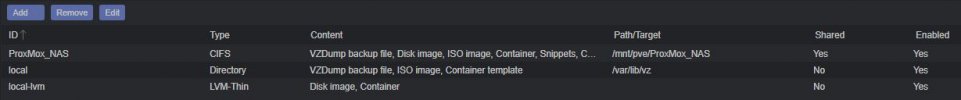

The only other change that I made in this intervening period was to move the location of the ProxMox_NAS (pve-test) from one shared folder group to another on the NAS for load balancing. The IP address remained the same and the target mount point name remained the same.

For the new share to be recognised I needed to remove the original entry and re-add it with the new location on the NAS (even though all the names were the same (& IP Addr)). I thought a reboot would have reconnected the logical links. But the above has been the only change since updating/upgrading the LXCs and VMs and the actual updates to ProxMox via the console.

It just seems strange that all LXCs and VMs would stop at the same time. I would be grateful for any guidance about thing to try, where to look etc.

Regards

Geoff

When I got back to it, I used the web interface to log in and was going to start a number of machines to test something,

I first noticed that there was a number of updates, so I installed them. Given there was nothing running and it had been a few months since rebooting the machine, I decided to do that.

Once rebooted, I tried to start up a LXC with failed, I then tried a VM and it failed.

I then tried all LXCs and VMs sequentially and they all failed to load.

I shut down the machine and after it came back up and tried again, but got the same results.

I then looked to see if the drives were available and they were.

When trying to start an LXC, the following error message is provided:

If you look at the status tab, it shows:

I am not sure where to go from here to find the solution.

As an aside, within the last 30 days, I have sequentially started each of the LXCs and VMs successfully and ran update/upgrade commands on each (or the equivalent).

The only other change that I made in this intervening period was to move the location of the ProxMox_NAS (pve-test) from one shared folder group to another on the NAS for load balancing. The IP address remained the same and the target mount point name remained the same.

For the new share to be recognised I needed to remove the original entry and re-add it with the new location on the NAS (even though all the names were the same (& IP Addr)). I thought a reboot would have reconnected the logical links. But the above has been the only change since updating/upgrading the LXCs and VMs and the actual updates to ProxMox via the console.

It just seems strange that all LXCs and VMs would stop at the same time. I would be grateful for any guidance about thing to try, where to look etc.

Regards

Geoff