Always when we migrate Windows Servers they stuck with an error screen.

After a reboot they work normal again.

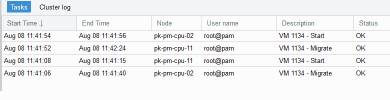

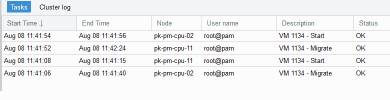

Today I migrated some VMs to an other node and back to update the pbs library which is used by the VMs.

All 4 VMs needed a restart afterwards.

It was a DomainController, 2 ExchangeSever, a RDP Gateway.

After migration CPU load was 100%.

QEMU Guest additions are intalled inside of the VMs and the option in Proxmox is enabled.

PVE version is 7.2.7

If I migrate Debian QMs it works always.

Any ideas?

Best regards

After a reboot they work normal again.

Today I migrated some VMs to an other node and back to update the pbs library which is used by the VMs.

All 4 VMs needed a restart afterwards.

It was a DomainController, 2 ExchangeSever, a RDP Gateway.

After migration CPU load was 100%.

QEMU Guest additions are intalled inside of the VMs and the option in Proxmox is enabled.

PVE version is 7.2.7

If I migrate Debian QMs it works always.

Any ideas?

Best regards

Last edited: