So about half a year ago, i built my home server and installed proxmox on it.

I chose to use dual sata ssd-s as a zfs mirror for the system, and chose that during installation.

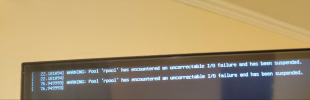

Now, half a year later, i decided i want to add another nvme ssd to the system, and was greeted by following message upon boot:

And quick search reveals that the likely cause is the use of /dev/sd? names when creating the pool.

And quick search reveals that the likely cause is the use of /dev/sd? names when creating the pool.

After removing nvme ssd and rebooting, i checked the rpool status and the result confirmed that it indeed used the /dev/sd? names.

At this point, i dont remember the exact install process, maybe there was a checkbox i didnt check, but im not sure that such a behavior should be a default.

Now, the real question is, how do i work around all that?

Is there a way to move the rpool zfs mirror to use /by-id/ ?

I chose to use dual sata ssd-s as a zfs mirror for the system, and chose that during installation.

Now, half a year later, i decided i want to add another nvme ssd to the system, and was greeted by following message upon boot:

After removing nvme ssd and rebooting, i checked the rpool status and the result confirmed that it indeed used the /dev/sd? names.

At this point, i dont remember the exact install process, maybe there was a checkbox i didnt check, but im not sure that such a behavior should be a default.

Now, the real question is, how do i work around all that?

Is there a way to move the rpool zfs mirror to use /by-id/ ?