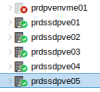

Proxmox 6.0-7 5 nodes. When I added the 6th node the entire cluster goes down, gui becomes unresponsive on all nodes and unable to log into the newly added node at all from UI. Shelled into each of the nodes and run pvecm status with this result. I've restarted corosync on each nodes and waited a few moments but it doesn't sync up and I lose connectivity to most if not all VMs already on the cluster until I shut down the newly added node.

Code:

Quorum information

------------------

Date: Mon Sep 30 08:57:11 2019

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000001

Ring ID: 1/11680

Quorate: No

Votequorum information

----------------------

Expected votes: 6

Highest expected: 6

Total votes: 1

Quorum: 4 Activity blocked

Flags:

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 10.0.3.145 (local)