root@proxmox:~# pvs -vv

global/use_lvmpolld not found in config: defaulting to 1

devices/sysfs_scan not found in config: defaulting to 1

devices/scan_lvs not found in config: defaulting to 0

devices/multipath_component_detection not found in config: defaulting to 1

devices/md_component_detection not found in config: defaulting to 1

devices/fw_raid_component_detection not found in config: defaulting to 0

devices/ignore_suspended_devices not found in config: defaulting to 0

devices/ignore_lvm_mirrors not found in config: defaulting to 1

devices/scan_lvs not found in config: defaulting to 0

devices/allow_mixed_block_sizes not found in config: defaulting to 0

devices/hints not found in config: defaulting to "all"

activation/activation_mode not found in config: defaulting to "degraded"

metadata/record_lvs_history not found in config: defaulting to 0

devices/search_for_devnames not found in config: defaulting to "auto"

activation/monitoring not found in config: defaulting to 1

global/locking_type not found in config: defaulting to 1

global/wait_for_locks not found in config: defaulting to 1

global/prioritise_write_locks not found in config: defaulting to 1

global/locking_dir not found in config: defaulting to "/run/lock/lvm"

devices/md_component_detection not found in config: defaulting to 1

devices/md_component_checks not found in config: defaulting to "auto"

devices/multipath_wwids_file not found in config: defaulting to "/etc/multipath/wwids"

global/use_lvmlockd not found in config: defaulting to 0

report/output_format not found in config: defaulting to "basic"

log/report_command_log not found in config: defaulting to 0

report/aligned not found in config: defaulting to 1

report/buffered not found in config: defaulting to 1

report/headings not found in config: defaulting to 1

report/separator not found in config: defaulting to " "

report/prefixes not found in config: defaulting to 0

report/quoted not found in config: defaulting to 1

report/columns_as_rows not found in config: defaulting to 0

report/pvs_sort not found in config: defaulting to "pv_name"

report/pvs_cols_verbose not found in config: defaulting to "pv_name,vg_name,pv_fmt,pv_attr,pv_size,pv_free,dev_size,pv_uuid"

report/compact_output_cols not found in config: defaulting to ""

Locking /run/lock/lvm/P_global RB

devices/use_devicesfile not found in config: defaulting to 0

/dev/loop0: size is 0 sectors

/dev/sda: size is 468862128 sectors

/dev/loop1: size is 0 sectors

/dev/sda1: size is 2014 sectors

/dev/loop2: size is 0 sectors

/dev/sda2: size is 2097152 sectors

/dev/loop3: size is 0 sectors

/dev/sda3: size is 466762895 sectors

/dev/loop4: size is 0 sectors

/dev/loop5: size is 0 sectors

/dev/loop6: size is 0 sectors

/dev/loop7: size is 0 sectors

/dev/sdb: size is 7814037167 sectors

/dev/sdb1: size is 262144 sectors

/dev/sdb2: size is 7813771264 sectors

/dev/sdc: size is 57482936320 sectors

Setting devices/global_filter to global_filter = [ "r|/dev/zd.*|", "r|/dev/rbd.*|" ]

devices/filter not found in config: defaulting to filter = [ "a|.*|" ]

devices/devicesfile not found in config: defaulting to "system.devices"

/dev/loop0: using cached size 0 sectors

/dev/sda: using cached size 468862128 sectors

/dev/loop1: using cached size 0 sectors

/dev/sda1: using cached size 2014 sectors

/dev/loop2: using cached size 0 sectors

/dev/sda2: using cached size 2097152 sectors

/dev/loop3: using cached size 0 sectors

/dev/sda3: using cached size 466762895 sectors

/dev/loop4: using cached size 0 sectors

/dev/loop5: using cached size 0 sectors

/dev/loop6: using cached size 0 sectors

/dev/loop7: using cached size 0 sectors

/dev/sdb: using cached size 7814037167 sectors

/dev/sdb1: using cached size 262144 sectors

/dev/sdb2: using cached size 7813771264 sectors

/dev/sdc: using cached size 57482936320 sectors

/dev/sda3: using cached size 466762895 sectors

/dev/sda3: using cached size 466762895 sectors

/dev/sdc: using cached size 57482936320 sectors

/dev/sdc: using cached size 57482936320 sectors

/dev/sdc: using cached size 57482936320 sectors

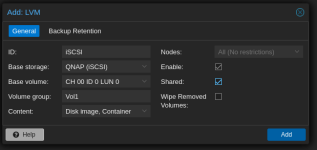

Locking /run/lock/lvm/V_Vol1 RB

metadata/lvs_history_retention_time not found in config: defaulting to 0

/dev/sdc: using cached size 57482936320 sectors

Processing PV /dev/sdc in VG Vol1.

/dev/sdc: using cached size 57482936320 sectors

Unlocking /run/lock/lvm/V_Vol1

Locking /run/lock/lvm/V_pve RB

Stack pve/data:0[0] on LV pve/data_tdata:0.

Adding pve/data:0 as an user of pve/data_tdata.

Adding pve/data:0 as an user of pve/data_tmeta.

Adding pve/vm-106-disk-0:0 as an user of pve/data.

metadata/lvs_history_retention_time not found in config: defaulting to 0

/dev/sda3: size is 466762895 sectors

Processing PV /dev/sda3 in VG pve.

/dev/sda3: using cached size 466762895 sectors

Unlocking /run/lock/lvm/V_pve

Reading orphan VG #orphans_lvm2.

report/compact_output not found in config: defaulting to 0

PV VG Fmt Attr PSize PFree DevSize PV UUID

/dev/sda3 pve lvm2 a-- <222.57g 16.00g <222.57g zC3XWN-K1bW-8uVj-HV29-6kbf-yFe0-WSN5bW

/dev/sdc Vol1 lvm2 a-- <26.77t <15.64t <26.77t 6tEYr9-iAXl-ulks-Icbx-gAFJ-d8sY-v24XwE

Unlocking /run/lock/lvm/P_global

global/notify_dbus not found in config: defaulting to 1