Post output of ' zpool get all ' and ' zfs get all ' ( I suggest you use e.g. pastebin website , so you don't take up large chunks of text space here )

Adding 2 new drives to expand a raidz2 pool

- Thread starter dominusdj

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Post output of ' zpool get all ' and ' zfs get all ' ( I suggest you use e.g. pastebin website , so you don't take up large chunks of text space here )

Here the outputs:

zpool get all

zfs get all

Thanks a lot!

Last edited:

I don't see anything out of the ordinary on the zpool side, but recommend you turn atime=off on all datasets to save on writes

DataZFS available 427G

^ That is a more accurate representation than the 'zpool' free

DataZFS recordsize 128K default

You can modify recordsize per-dataset, if you have e.g. large media files then it would benefit from recordsize=1M

I thought you might have had ' copies=2 ' somewhere, but this is not the case.

The other usual suspect, dedup, is Off everywhere

This stands out a bit:

[[

]]

You may want to move that disk $somewhere_else to other storage (possibly XFS or lvm-thin) and see if it fixes the space discrepancy.

DataZFS available 427G

^ That is a more accurate representation than the 'zpool' free

DataZFS recordsize 128K default

You can modify recordsize per-dataset, if you have e.g. large media files then it would benefit from recordsize=1M

I thought you might have had ' copies=2 ' somewhere, but this is not the case.

The other usual suspect, dedup, is Off everywhere

This stands out a bit:

[[

DataZFS/vm-103-disk-0 used 6.01T -DataZFS/vm-103-disk-0 available 4.48T -DataZFS/vm-103-disk-0 referenced 1.94T -DataZFS/vm-103-disk-0 compressratio 1.01x -DataZFS/vm-103-disk-0 reservation none defaultDataZFS/vm-103-disk-0 volsize 4.00T localDataZFS/vm-103-disk-0 volblocksize 16K defaultDataZFS/vm-103-disk-0 checksum on defaultDataZFS/vm-103-disk-0 compression on inherited from DataZFSDataZFS/vm-103-disk-0 readonly off defaultDataZFS/vm-103-disk-0 createtxg 32700 -DataZFS/vm-103-disk-0 copies 1 defaultDataZFS/vm-103-disk-0 refreservation 4.07T localDataZFS/vm-103-disk-0 guid 18439402590032835261 -DataZFS/vm-103-disk-0 primarycache all defaultDataZFS/vm-103-disk-0 secondarycache all defaultDataZFS/vm-103-disk-0 usedbysnapshots 1.03G -DataZFS/vm-103-disk-0 usedbydataset 1.94T -]]

You may want to move that disk $somewhere_else to other storage (possibly XFS or lvm-thin) and see if it fixes the space discrepancy.

OK , i set atime=off on DataZFS.

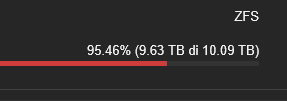

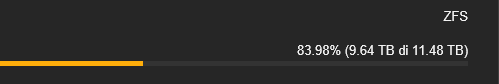

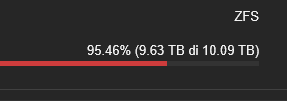

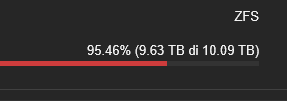

If you look at the pool before and after adding the two new drives to the raidz2 you see there must be something that has expanded automatically so there is definitely something wrong

Before

After

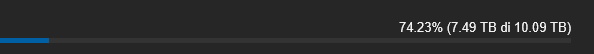

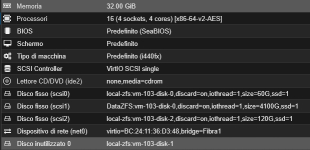

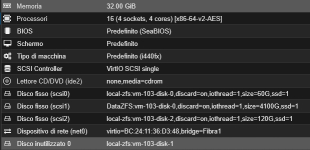

Vm 103 is Truenas and this is the setting

It's probably because it's ZFS on ZFS, but I don't think that's the real reason

It's probably this machine that's causing problems, especially since I can't explain this memory usage.

I never told him it had to take up all these TB.

DataZFS/vm-103-disk-0 used 6.01T

DataZFS/vm-103-disk-0 available 4.48T

I expanded the pool to be able to take snapshots and I'm in the same situation as before with all the space occupied and no snapshots

If you look at the pool before and after adding the two new drives to the raidz2 you see there must be something that has expanded automatically so there is definitely something wrong

Before

After

Vm 103 is Truenas and this is the setting

It's probably because it's ZFS on ZFS, but I don't think that's the real reason

It's probably this machine that's causing problems, especially since I can't explain this memory usage.

I never told him it had to take up all these TB.

DataZFS/vm-103-disk-0 used 6.01T

DataZFS/vm-103-disk-0 available 4.48T

I expanded the pool to be able to take snapshots and I'm in the same situation as before with all the space occupied and no snapshots

Last edited:

Last edited: