forgot to mention - ceph-volume also now offers this ability as well.

https://docs.ceph.com/en/latest/ceph-volume/lvm/migrate/

So based in this docs from Ceph, I had used this:

- First set the osd to noout

ceph osd set-group noout osd

- Followed to stop it

systemctl stop ceph-osd@0

- Create lvm

ceph-volume lvm create --data /dev/sdc

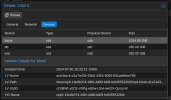

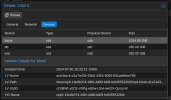

- Check the lvm created

ceph-volume lvm list

- Create the new-db

ceph-volume lvm new-db --osd-id 0 --osd-fsid d1a7e434-53b0-4454-9060-851ae8ebe785 --target ceph-f8683677-0651-4d19-8e19-83aff7c

1cf07/osd-block-189361f7-7b82-42c0-bbac-833dd5b2a5454

Works fine.

Now do the same to the new-wal

ceph-volume lvm create --data /dev/sdd

ceph-volume lvm list

ceph-volume lvm new-wal --osd-id 0 --osd-fsid d1a7e434-53b0-4454-9060-851ae8ebe785 --target ceph-e8798be2-c799-4c31-b067-

After that I got:

Everything seems to be ok, but after start the osd unset the noout, I see this HEALTH_WARN:

HEALTH_WARN: 1 OSD(s) experiencing BlueFS spillover

osd.0 spilled over 768 KiB metadata from 'db' device (22 MiB used of 280 GiB) to slow device

Since this is just a LAB and I am using Proxmox over Proxmox, with nested_virt, I am assuming this is something to do with the devices which is pretty much fake of pseudo devices.

In a real scenario with real devices this will not gonna happen, I suppose.

That's it.