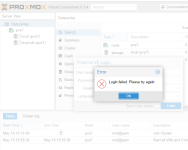

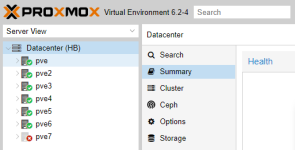

Hello, I have been trying to add a new node to my cluster, but when I added, this node don't work via WEB, only I can accesos using SSH.

So, I reinstalled proxmox, and do it again, but it's the same problem. Of course I deleted node in my cluster prevously. "pvecm delnode pve7".

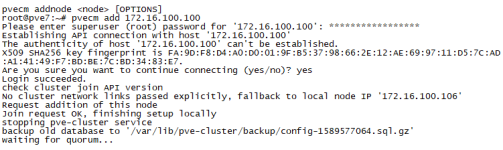

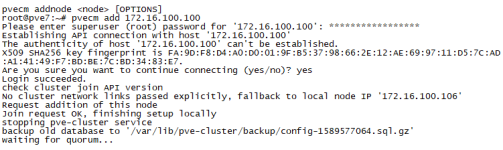

When the new installation finished, I connect to my new node pve7 using SSH, and use now command line:

pve add 172.16.100.100

And I have the same result.

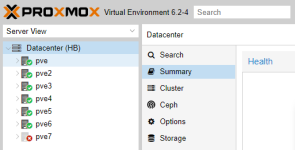

In my cluster I can see the node, but like offline.

So, I reinstalled proxmox, and do it again, but it's the same problem. Of course I deleted node in my cluster prevously. "pvecm delnode pve7".

When the new installation finished, I connect to my new node pve7 using SSH, and use now command line:

pve add 172.16.100.100

And I have the same result.

In my cluster I can see the node, but like offline.