I had a long project this weekend of adding two additional nodes to my cluster (fresh with PVE 9) and upgrading my existing node from PVE 8 and PBS 3. The upgrade and clustering went mostly fine. One thing that happened was my NFS share of my synology NAS where I dump my backups got unmounted. Not realizing the share was unmounted and thinking I had lost backups in the upgrade, I attempted to just run my backup job. Got errors that the disk was full and that is when I realized it had become unmounted. For some reason instead of going out via the vmbr0 bridge with a static IP that had been allowed on the NFS permissions, it was attempting to communicate using the newly created cluster network IP, which was not whitelisted. But that's a problem for another day.

So my issue is that the backups went to the wrong place. I unmounted the share, and did a rm -r on the /mnt/pbs/Synology directory and removed all files that were mistakenly written there. I then reboot the node and verified that all the mounts automatically came back (they did.) All my actual backups were still in place on the NAS.

However, my "local" is still full.

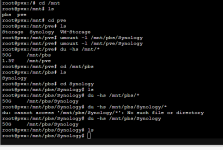

I unmounted the drive again, and did "du -hs /mnt/pbs/*" and I am showing 50G of usage in the "Synology" folder, but when I run an "ls" on the folder there is nothing.

What am I missing for this consumed space, and how do I reclaim it?

Thanks to anyone who can help.

So my issue is that the backups went to the wrong place. I unmounted the share, and did a rm -r on the /mnt/pbs/Synology directory and removed all files that were mistakenly written there. I then reboot the node and verified that all the mounts automatically came back (they did.) All my actual backups were still in place on the NAS.

However, my "local" is still full.

I unmounted the drive again, and did "du -hs /mnt/pbs/*" and I am showing 50G of usage in the "Synology" folder, but when I run an "ls" on the folder there is nothing.

What am I missing for this consumed space, and how do I reclaim it?

Thanks to anyone who can help.