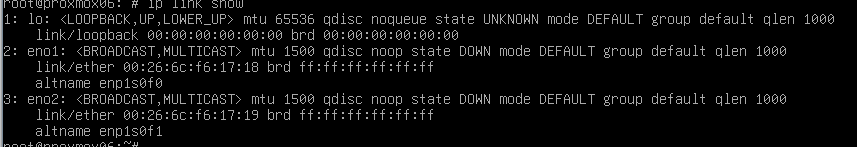

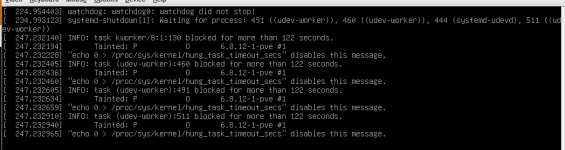

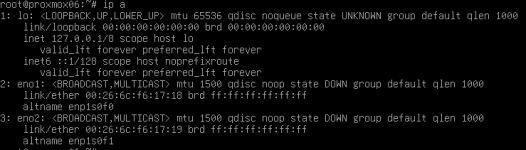

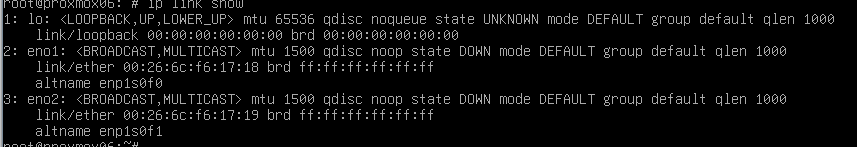

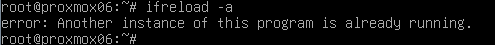

I installed this cluster about a year and a half ago. It hasn't been patched (don't yell at me, not my call), but has been rock solid. Today, that all changed. It all went offline, no links on the NICs (bonded par per server). All other hardware is fine: storage server, firewalls, etc, it's all on the same switch and not experiencing any issues. We've tried knocking the network config down to a single interface (removing the bond) and breaking LAGs on the switch. Bringing up the NICs manually does cause them to show UP, but they're not really up and the vmbr0 never comes online.

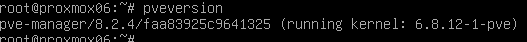

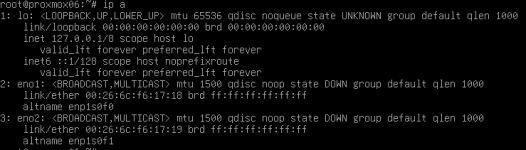

A sample from one of them (excuse the screenshots, this is from a really old iDRAC):

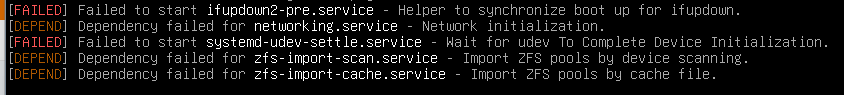

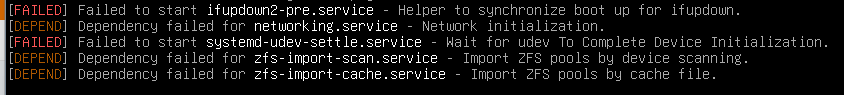

On boot:

A sample from one of them (excuse the screenshots, this is from a really old iDRAC):

On boot: