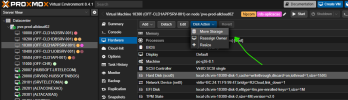

You can insert another SSD/NVMe and create a pool with no compression enabled and transfer the VM to that pool. Or transfer only the VM's virtual hard drive to its pool (uncompressed).I didn't try with compression disabled because I rebuilt the volume to LVM-Thin and had the same IO issues. The main nvme also uses the default file system rather than ZFS.