I have a consistent repro of a kernel panic, seemingly occurring due to something between cloud-init and Proxmox running 8.3. Likely not something your average user will find, as it requires expanding a disk on first install (whereas most click-ops would be the ISO installer, I assume). My example used Ansible, found this as well on a Terraform provider: https://github.com/bpg/terraform-provider-proxmox/issues/1639, but I can also reproduce the issue entirely natively in Proxmox:

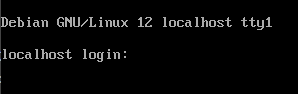

Result:

pveversion -v:

Hardware: Dell R730XD

- Download the latest debian-12-generic-amd64.qcow2 from https://cdimage.debian.org/images/cloud/bookworm/ (example is using the image uploaded on 2024-12-02 17:54)

- Create a VM without a disk, enable cloud-init (See below for the resulting .conf to know what settings were used):

- Import the previously-downloaded qcow2:

qm disk import 100 /var/lib/vz/template/vm/debian-12-generic-amd64.qcow2 local-zfs - Associate/mount the disk using default settings in the VM hardware config

- Expand the disk (I expanded mine by 64G, though my Ansible example expands by 62G) in the web UI.

- Start the VM (below is the final .conf file)

- A reboot/reset makes it past the kernel panic, and it *appears* as though the cloud-init is applied properly.

- The issue can be worked around by adding

serial0: socket. Not sure at all why this would be.

Code:

root@pve01:~# cat /etc/pve/qemu-server/100.conf

agent: 1

bios: ovmf

boot:

cipassword: $5$3VyDlYb6$9e.xg94NF1/HWdchmwqKOtcoh3gc5h9UUDu196bmVV5

ciuser: testuser

cores: 2

cpu: host

efidisk0: local-zfs:vm-100-disk-0,efitype=4m,size=1M

ide0: local-zfs:vm-100-cloudinit,media=cdrom

ipconfig0: ip=dhcp

machine: q35

memory: 4096

meta: creation-qemu=9.0.2,ctime=1736116049

name: test

net0: virtio=BC:24:11:06:EF:F5,bridge=vmbr0,tag=10

numa: 0

onboot: 1

ostype: l26

scsi0: local-zfs:vm-100-disk-2,iothread=1,size=66G

scsihw: virtio-scsi-single

smbios1: uuid=b4913ce3-c7f0-4eaf-9fab-1ab7f7a5738b

sockets: 2

tpmstate0: local-zfs:vm-100-disk-1,size=4M,version=v2.0

vmgenid: 6091fe0e-7915-4bea-833d-161da806c628Result:

pveversion -v:

Code:

root@pve01:~# pveversion

pve-manager/8.3.2/3e76eec21c4a14a7 (running kernel: 6.8.12-5-pve)

root@pve01:~# pveversion -v

proxmox-ve: 8.3.0 (running kernel: 6.8.12-5-pve)

pve-manager: 8.3.2 (running version: 8.3.2/3e76eec21c4a14a7)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.12-5

proxmox-kernel-6.8.12-5-pve-signed: 6.8.12-5

ceph-fuse: 17.2.7-pve3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx11

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.4

libpve-access-control: 8.2.0

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.10

libpve-cluster-perl: 8.0.10

libpve-common-perl: 8.2.9

libpve-guest-common-perl: 5.1.6

libpve-http-server-perl: 5.1.2

libpve-network-perl: 0.10.0

libpve-rs-perl: 0.9.1

libpve-storage-perl: 8.3.3

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.5.0-1

proxmox-backup-client: 3.3.2-1

proxmox-backup-file-restore: 3.3.2-2

proxmox-firewall: 0.6.0

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.3.1

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.7

proxmox-widget-toolkit: 4.3.3

pve-cluster: 8.0.10

pve-container: 5.2.3

pve-docs: 8.3.1

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.2

pve-firewall: 5.1.0

pve-firmware: 3.14-2

pve-ha-manager: 4.0.6

pve-i18n: 3.3.2

pve-qemu-kvm: 9.0.2-4

pve-xtermjs: 5.3.0-3

qemu-server: 8.3.3

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.6-pve1Hardware: Dell R730XD

- CPU: Xeon E5-2683v4 (2X)

- RAM: 256GB DDR4

- Disks: 6x Toshiba 3.84TB SAS SSD in ZFS RAID 10

Last edited: