Hi all,

Fortunately, I have Zabbix keeping trace of containers parameter from inside, and I see a very strange thing with LXC swap: for some containres it runs well, for some it grows from container start moment and till 100% and then only go back to zero with container restart. I suspect this 100% swap to be a reason for containres occasionally go down by itself, silenty and without simple reason. At least, I did not found the reason. However, the most problem container is one with the most fast swap usage grow rate.

All my containers are identical Oracle Linux 8.8, may vary by update level, some more updated, some less, but there no clear correlation to update level.

It seems like swap grow rate depends of what kind of app inside. It runs well with Percona DB, Gitea and FreeRadius. Ir rise with about the same speed for some container group with different apps: BIND, php-fpm+nginx, zabbix server and it's php-based frontend, exim. What's really wonder, all their charts goes parallel, means the same swap rise speed. And I have on 'champion' LXC which grows times faster then others. It runs Kannel, SMS gateway software and do some IP-level forwards.

It seesm like it correlate to host (not container!) memory load: all 'leaking' LXCs seems to be located on seeervers with less phy memory. But these servers has about the same % of memory load that bigger ones. And finally host servers has swap on separate SSD partitions, so there no effect from underlay filesystem.

Last but not list: when I tr to find out who, which process swaps - it shows that there no processes with these amounts of swap at all! From inside LXC I see a very little, dozen of Mb swap bound to processes while 1G of swap shown as 100% used. The same picture on the host level: swap of the host itself is very low, and most of it bound to KVM processes, which are apart from LXC.

Searching for forums, I meet mention that it is a problem, related to cgroup1 use, but it should not be cgroup1 in my system: I was use Centos7 and has to reinstall all my apps/containers from scratch before migrate from 6x to 7x, just becuase of cgroup issue.

I am tracing back this problem for at least two years, up to the depth of my zabbix history data. It was not seen to me because there was no triggers for swap too much, and I only found it now occasionally.

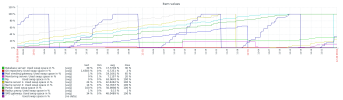

Pls, have a look to the 3 monthhistorical chart, it is very cuorious.

If someone has an idea about reasons of this? Any ideas about steps to find the reason? Or just set swap off for all the containers, watch the total memory and rely on the host swap management?

System details: 4 absolutely the same servers, but 2x 64GB RAM and 2x128GB RAM, VE 7.4-16, ZFS mirror root, all containers on ZFS mirrored volume, OL8 minimal image was taken from Oracle site.

Fortunately, I have Zabbix keeping trace of containers parameter from inside, and I see a very strange thing with LXC swap: for some containres it runs well, for some it grows from container start moment and till 100% and then only go back to zero with container restart. I suspect this 100% swap to be a reason for containres occasionally go down by itself, silenty and without simple reason. At least, I did not found the reason. However, the most problem container is one with the most fast swap usage grow rate.

All my containers are identical Oracle Linux 8.8, may vary by update level, some more updated, some less, but there no clear correlation to update level.

It seems like swap grow rate depends of what kind of app inside. It runs well with Percona DB, Gitea and FreeRadius. Ir rise with about the same speed for some container group with different apps: BIND, php-fpm+nginx, zabbix server and it's php-based frontend, exim. What's really wonder, all their charts goes parallel, means the same swap rise speed. And I have on 'champion' LXC which grows times faster then others. It runs Kannel, SMS gateway software and do some IP-level forwards.

It seesm like it correlate to host (not container!) memory load: all 'leaking' LXCs seems to be located on seeervers with less phy memory. But these servers has about the same % of memory load that bigger ones. And finally host servers has swap on separate SSD partitions, so there no effect from underlay filesystem.

Last but not list: when I tr to find out who, which process swaps - it shows that there no processes with these amounts of swap at all! From inside LXC I see a very little, dozen of Mb swap bound to processes while 1G of swap shown as 100% used. The same picture on the host level: swap of the host itself is very low, and most of it bound to KVM processes, which are apart from LXC.

Searching for forums, I meet mention that it is a problem, related to cgroup1 use, but it should not be cgroup1 in my system: I was use Centos7 and has to reinstall all my apps/containers from scratch before migrate from 6x to 7x, just becuase of cgroup issue.

I am tracing back this problem for at least two years, up to the depth of my zabbix history data. It was not seen to me because there was no triggers for swap too much, and I only found it now occasionally.

Pls, have a look to the 3 monthhistorical chart, it is very cuorious.

If someone has an idea about reasons of this? Any ideas about steps to find the reason? Or just set swap off for all the containers, watch the total memory and rely on the host swap management?

System details: 4 absolutely the same servers, but 2x 64GB RAM and 2x128GB RAM, VE 7.4-16, ZFS mirror root, all containers on ZFS mirrored volume, OL8 minimal image was taken from Oracle site.

Attachments

Last edited: