Hi all.

I'm from ovh, fresh install on 6.4

Then upgrade to 7.1

I need to change /etc/network/interfaces to make it run.

Now, I can install LXC containers.

I disabled all firewall (at datacenter and container), but I can't use network inside my containers.

In proxmox host, networks works fine.

The template I'm using is ubuntu-20.04-standard_20.04-1_amd64.tar.gz, but happens too with centos7

I have others servers with Proxmox 6 into OVH and works as expect. I have my failover into server, and generated virtual mac. I cannot see any difference into proxmox 6 <> 7 containers config

I'm from ovh, fresh install on 6.4

Then upgrade to 7.1

I need to change /etc/network/interfaces to make it run.

Now, I can install LXC containers.

I disabled all firewall (at datacenter and container), but I can't use network inside my containers.

In proxmox host, networks works fine.

Code:

root@shared1:/# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 02:00:00:XX:XX:XX brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 51.XXX.XXX.XXX/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ff:feb4:9e6f/64 scope link

valid_lft forever preferred_lft foreverThe template I'm using is ubuntu-20.04-standard_20.04-1_amd64.tar.gz, but happens too with centos7

Code:

root@ns31:/mnt/# pveversion -v

proxmox-ve: 7.1-1 (running kernel: 5.13.19-4-pve)

pve-manager: 7.1-10 (running version: 7.1-10/6ddebafe)

pve-kernel-helper: 7.1-10

pve-kernel-5.13: 7.1-7

pve-kernel-5.4: 6.4-12

pve-kernel-5.13.19-4-pve: 5.13.19-8

pve-kernel-5.4.162-1-pve: 5.4.162-2

ceph-fuse: 14.2.21-1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-6

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-2

libpve-guest-common-perl: 4.0-3

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.0-15

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.3.0-1

proxmox-backup-client: 2.1.5-1

proxmox-backup-file-restore: 2.1.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-5

pve-cluster: 7.1-3

pve-container: 4.1-3

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-5

pve-ha-manager: 3.3-3

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.1-1

pve-xtermjs: 4.16.0-1

pve-zsync: 2.2.1

qemu-server: 7.1-4

smartmontools: 7.2-pve2

spiceterm: 3.2-2

swtpm: 0.7.0~rc1+2

vncterm: 1.7-1

zfsutils-linux: 2.1.2-pve1

Code:

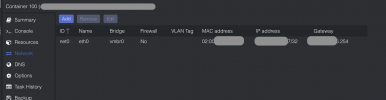

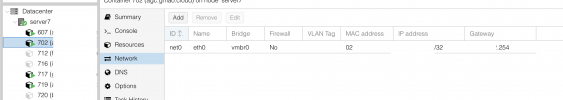

root@ns31:/mnt# pct config 100

arch: amd64

cores: 12

features: nesting=1

hostname: domain.ltd

memory: 65536

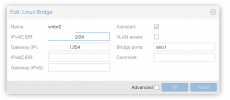

net0: name=eth0,bridge=vmbr0,gw=135.XXX.XXX.254,hwaddr=02:00:00:XX:XX:XX,ip=51.XXX.XXX.XXX/32,type=veth

ostype: ubuntu

rootfs: data0:100/vm-100-disk-0.raw,size=500G

swap: 1024

unprivileged: 1I have others servers with Proxmox 6 into OVH and works as expect. I have my failover into server, and generated virtual mac. I cannot see any difference into proxmox 6 <> 7 containers config

Last edited: