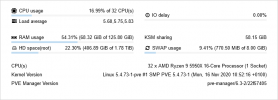

Hey, so I have a 5800x and I did a KVM for 2 clients to host their game servers.

I followed all the guides here and I have all the drivers and OS is Windows Server 2019 Desktop.

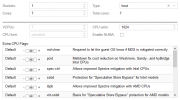

Their current KVM settings are:

cache writeback, ballooning enabled, qemu guest enabled etc...

I want both of my clients to have their own 8threads/4cores. That's why I set CPU limit 8.

Although my clients when using this KVM they are having the same performance as someone using a 6700k dedicated which is not normal.

The single-core and multi-core performance are bad overall.

So anyone please give me few ideas.

I followed all the guides here and I have all the drivers and OS is Windows Server 2019 Desktop.

Their current KVM settings are:

cache writeback, ballooning enabled, qemu guest enabled etc...

I want both of my clients to have their own 8threads/4cores. That's why I set CPU limit 8.

Although my clients when using this KVM they are having the same performance as someone using a 6700k dedicated which is not normal.

The single-core and multi-core performance are bad overall.

So anyone please give me few ideas.