Hi there,

we got a strange perfomance draw in Disk speed.

We have a freshly installed Proxmox 8.2 (upgraded to 8.3).

- HP DL380 Gen10

- 256 GB Total RAM

- 2x6144 Xeon Gold

- 2x240 GB SSD Raid 1 for Proxmox Host

- 6x1.6 TB NVME Hardware Raid10 SupremeRaid (GraidTech)

- mounted as ext4 directory

We created one single Instance with opensuse 15.6. and the read rates from the disk reduce by approx 50%.

We did simple tests with

Test on the proxmox Host:

Same Test in VM:

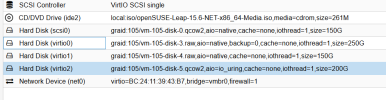

VM-Config:

We already tried with differet aio/cache settings. The read perfomance changes between 5.500 and 6.200 - but not more.

Any ideas / is there anyway to raise the VM-Disk perfomance?

A similar thread here on basic NVMe perfomance: https://forum.proxmox.com/threads/nvme-pcie-5-benchmarking.158056/

we got a strange perfomance draw in Disk speed.

We have a freshly installed Proxmox 8.2 (upgraded to 8.3).

- HP DL380 Gen10

- 256 GB Total RAM

- 2x6144 Xeon Gold

- 2x240 GB SSD Raid 1 for Proxmox Host

- 6x1.6 TB NVME Hardware Raid10 SupremeRaid (GraidTech)

- mounted as ext4 directory

We created one single Instance with opensuse 15.6. and the read rates from the disk reduce by approx 50%.

We did simple tests with

Code:

fioTest on the proxmox Host:

Code:

fio --filename=/dev/gdg0n1 --direct=1 --rw=randread --bs=64k --ioengine=libaio --iodepth=64 --runtime=20 --numjobs=4 --time_based --group_reporting --name=throughput-test-job --eta-newline=1 --readonly

throughput-test-job: (g=0): rw=randread, bs=(R) 64.0KiB-64.0KiB, (W) 64.0KiB-64.0KiB, (T) 64.0KiB-64.0KiB, ioengine=libaio, iodepth=64

...

fio-3.33

Starting 4 processes

Jobs: 4 (f=4): [r(4)][15.0%][r=10.8GiB/s][r=177k IOPS][eta 00m:17s]

Jobs: 4 (f=4): [r(4)][25.0%][r=10.8GiB/s][r=177k IOPS][eta 00m:15s]

Jobs: 4 (f=4): [r(4)][35.0%][r=10.7GiB/s][r=176k IOPS][eta 00m:13s]

Jobs: 4 (f=4): [r(4)][45.0%][r=10.8GiB/s][r=177k IOPS][eta 00m:11s]

Jobs: 4 (f=4): [r(4)][55.0%][r=10.8GiB/s][r=177k IOPS][eta 00m:09s]

Jobs: 4 (f=4): [r(4)][65.0%][r=10.8GiB/s][r=177k IOPS][eta 00m:07s]

Jobs: 4 (f=4): [r(4)][75.0%][r=10.8GiB/s][r=177k IOPS][eta 00m:05s]

Jobs: 4 (f=4): [r(4)][85.0%][r=10.8GiB/s][r=177k IOPS][eta 00m:03s]

Jobs: 4 (f=4): [r(4)][95.0%][r=10.8GiB/s][r=177k IOPS][eta 00m:01s]

Jobs: 4 (f=4): [r(4)][100.0%][r=10.8GiB/s][r=177k IOPS][eta 00m:00s]

throughput-test-job: (groupid=0, jobs=4): err= 0: pid=139578: Fri Nov 29 17:32:01 2024

read: IOPS=176k, BW=10.8GiB/s (11.6GB/s)(215GiB/20002msec)

slat (usec): min=3, max=559, avg= 4.75, stdev= 1.05

clat (usec): min=392, max=5586, avg=1445.61, stdev=372.36

lat (usec): min=400, max=5590, avg=1450.36, stdev=372.36

clat percentiles (usec):

| 1.00th=[ 611], 5.00th=[ 750], 10.00th=[ 922], 20.00th=[ 1205],

| 30.00th=[ 1319], 40.00th=[ 1385], 50.00th=[ 1450], 60.00th=[ 1500],

| 70.00th=[ 1582], 80.00th=[ 1696], 90.00th=[ 1975], 95.00th=[ 2147],

| 99.00th=[ 2278], 99.50th=[ 2311], 99.90th=[ 2409], 99.95th=[ 2606],

| 99.99th=[ 4752]

bw ( MiB/s): min=10927, max=11106, per=100.00%, avg=11030.02, stdev= 9.51, samples=156

iops : min=174832, max=177710, avg=176480.15, stdev=152.13, samples=156

lat (usec) : 500=0.02%, 750=5.04%, 1000=6.58%

lat (msec) : 2=78.96%, 4=9.37%, 10=0.03%

cpu : usr=9.96%, sys=25.38%, ctx=2308859, majf=0, minf=28397

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwts: total=3528344,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=10.8GiB/s (11.6GB/s), 10.8GiB/s-10.8GiB/s (11.6GB/s-11.6GB/s), io=215GiB (231GB), run=20002-20002msec

Disk stats (read/write):

gdg0n1: ios=3503912/6, merge=0/1, ticks=5053315/3, in_queue=5053317, util=100.00%Same Test in VM:

Code:

fio --filename=/dev/sdb --direct=1 --rw=randread --bs=64k --ioengine=libaio --iodepth=64 --runtime=15 --numjobs=4 --time_based --group_reporting --name=throughput-test-job --eta-newline=1

throughput-test-job: (g=0): rw=randread, bs=(R) 64.0KiB-64.0KiB, (W) 64.0KiB-64.0KiB, (T) 64.0KiB-64.0KiB, ioengine=libaio, iodepth=64

...

fio-3.23

Starting 4 processes

Jobs: 4 (f=4): [r(4)][20.0%][r=6252MiB/s][r=100k IOPS][eta 00m:12s]

Jobs: 4 (f=4): [r(4)][33.3%][r=6319MiB/s][r=101k IOPS][eta 00m:10s]

Jobs: 4 (f=4): [r(4)][46.7%][r=6242MiB/s][r=99.9k IOPS][eta 00m:08s]

Jobs: 4 (f=4): [r(4)][60.0%][r=6242MiB/s][r=99.9k IOPS][eta 00m:06s]

Jobs: 4 (f=4): [r(4)][73.3%][r=6247MiB/s][r=99.9k IOPS][eta 00m:04s]

Jobs: 4 (f=4): [r(4)][86.7%][r=6254MiB/s][r=100k IOPS][eta 00m:02s]

Jobs: 4 (f=4): [r(4)][100.0%][r=6274MiB/s][r=100k IOPS][eta 00m:00s]

throughput-test-job: (groupid=0, jobs=4): err= 0: pid=14021: Fri Nov 29 17:26:10 2024

read: IOPS=100k, BW=6251MiB/s (6555MB/s)(91.6GiB/15003msec)

slat (usec): min=2, max=1151, avg= 6.44, stdev=12.10

clat (usec): min=54, max=71820, avg=2551.56, stdev=1523.41

lat (usec): min=61, max=71843, avg=2558.11, stdev=1523.43

clat percentiles (usec):

| 1.00th=[ 494], 5.00th=[ 873], 10.00th=[ 1123], 20.00th=[ 1450],

| 30.00th=[ 1713], 40.00th=[ 1975], 50.00th=[ 2245], 60.00th=[ 2540],

| 70.00th=[ 2868], 80.00th=[ 3326], 90.00th=[ 4359], 95.00th=[ 5473],

| 99.00th=[ 7701], 99.50th=[ 8586], 99.90th=[10683], 99.95th=[11994],

| 99.99th=[24249]

bw ( MiB/s): min= 5392, max= 6964, per=100.00%, avg=6259.38, stdev=62.92, samples=116

iops : min=86278, max=111430, avg=100149.90, stdev=1006.67, samples=116

lat (usec) : 100=0.01%, 250=0.10%, 500=0.93%, 750=2.23%, 1000=3.93%

lat (msec) : 2=34.05%, 4=46.02%, 10=12.56%, 20=0.15%, 50=0.01%

lat (msec) : 100=0.01%

cpu : usr=6.82%, sys=20.32%, ctx=766993, majf=0, minf=2124

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwts: total=1500518,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=6251MiB/s (6555MB/s), 6251MiB/s-6251MiB/s (6555MB/s-6555MB/s), io=91.6GiB (98.3GB), run=15003-15003msec

Disk stats (read/write):

sdb: ios=1489747/0, merge=7/0, ticks=3625738/0, in_queue=3625738, util=99.50%VM-Config:

Code:

agent: 1

balloon: 0

bios: seabios

boot: order=scsi0;ide2;net0

cores: 16

cpu: host

ide2: local:iso/openSUSE-Leap-15.6-NET-x86_64-Media.iso,media=cdrom,size=261M

machine: q35,viommu=intel

memory: 128000

meta: creation-qemu=9.0.2,ctime=1732894143

name: svb-server17

net0: virtio=BC:24:11:39:43:B7,bridge=vmbr0,firewall=1

numa: 1

ostype: l26

scsi0: graid:105/vm-105-disk-0.qcow2,aio=native,cache=none,iothread=1,size=150G

scsi1: graid:105/vm-105-disk-1.qcow2,aio=io_uring,cache=directsync,iothread=1,size=250G

scsi2: graid:105/vm-105-disk-2.qcow2,aio=native,backup=0,cache=directsync,size=150G

scsihw: virtio-scsi-single

smbios1: uuid=53da87cc-950f-4692-b4c9-2871c

sockets: 2

vmgenid: ee5c1e62-ffb4-40fe-8f6c-494dbbd1e3We already tried with differet aio/cache settings. The read perfomance changes between 5.500 and 6.200 - but not more.

Any ideas / is there anyway to raise the VM-Disk perfomance?

A similar thread here on basic NVMe perfomance: https://forum.proxmox.com/threads/nvme-pcie-5-benchmarking.158056/