Hi all,

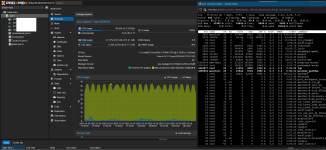

I recently upgraded Proxmox from 8 to 9 (with no errors), only there seems to be some kind of background process running that is taking up anywhere from 38-44% CPU usage on top of my other processes.

I'm not sure what this even is, as `top` doesn't show anything of significance. It's also not a graphical bug, as my wall outlet power measuring brick shows a significant increase in workload (60-70 idle, 80-90W normal load, 160W currently).

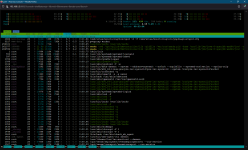

pve8to9 --full reads the following output;

I'm not sure where to go from here. It's not a time sensitive issue, but an additional 80W over a long period of time is a significant bump in electricity costs to me.

Thanks in advance.

I recently upgraded Proxmox from 8 to 9 (with no errors), only there seems to be some kind of background process running that is taking up anywhere from 38-44% CPU usage on top of my other processes.

I'm not sure what this even is, as `top` doesn't show anything of significance. It's also not a graphical bug, as my wall outlet power measuring brick shows a significant increase in workload (60-70 idle, 80-90W normal load, 160W currently).

pve8to9 --full reads the following output;

root@pve1:~# pve8to9 --full= CHECKING VERSION INFORMATION FOR PVE PACKAGES =Checking for package updates..PASS: all packages up-to-dateChecking proxmox-ve package version..PASS: already upgraded to Proxmox VE 9Checking running kernel version..PASS: running new kernel '6.14.11-4-pve' after upgrade.= CHECKING CLUSTER HEALTH/SETTINGS =SKIP: standalone node.= CHECKING HYPER-CONVERGED CEPH STATUS =SKIP: no hyper-converged ceph setup detected!= CHECKING CONFIGURED STORAGES =PASS: storage 'local' enabled and active.PASS: storage 'local-zfs' enabled and active.PASS: storage 'tank' enabled and active.INFO: Checking storage content type configuration..PASS: no storage content problems foundPASS: no storage re-uses a directory for multiple content types.INFO: Check for usage of native GlusterFS storage plugin...PASS: No GlusterFS storage found.INFO: Checking whether all external RBD storages have the 'keyring' option configuredSKIP: No RBD storage configured.= VIRTUAL GUEST CHECKS =SKIP: Skipping check for running guests - already upgraded.INFO: Checking if LXCFS is running with FUSE3 library, if already upgraded..PASS: systems seems to be upgraded and LXCFS is running with FUSE 3 libraryINFO: Checking for VirtIO devices that would change their MTU...PASS: All guest config descriptions fit in the new limit of 8 KiBINFO: Checking container configs for deprecated lxc.cgroup entriesWARN: The following CTs have 'lxc.cgroup' keys configured, which will be ignored in the new default unified cgroupv2: CT 102, CT 107 Often it can be enough to change to the new 'lxc.cgroup2' prefix after the upgrade to Proxmox VE 7.xINFO: Checking VM configurations for outdated machine versionsPASS: All VM machine versions are recent enough= MISCELLANEOUS CHECKS =INFO: Checking common daemon services..PASS: systemd unit 'pveproxy.service' is in state 'active'PASS: systemd unit 'pvedaemon.service' is in state 'active'PASS: systemd unit 'pvescheduler.service' is in state 'active'PASS: systemd unit 'pvestatd.service' is in state 'active'INFO: Checking for supported & active NTP service..PASS: Detected active time synchronisation unit 'chrony.service'INFO: Checking if the local node's hostname 'pve1' is resolvable..INFO: Checking if resolved IP is configured on local node..PASS: Resolved node IP '192.168.1.3' configured and active on single interface.INFO: Check node certificate's RSA key sizePASS: Certificate 'pve-root-ca.pem' passed Debian Busters (and newer) security level for TLS connections (4096 >= 2048)PASS: Certificate 'pve-ssl.pem' passed Debian Busters (and newer) security level for TLS connections (2048 >= 2048)PASS: Certificate 'pveproxy-ssl.pem' passed Debian Busters (and newer) security level for TLS connections (4096 >= 2048)INFO: Checking backup retention settings..PASS: no backup retention problems found.INFO: checking CIFS credential location..PASS: no CIFS credentials at outdated location found.INFO: Checking permission system changes..INFO: Checking custom role IDsPASS: no custom roles definedINFO: Checking node and guest description/note length..PASS: All node config descriptions fit in the new limit of 64 KiBINFO: Checking if the suite for the Debian security repository is correct..PASS: found no suite mismatchINFO: Checking for existence of NVIDIA vGPU Manager..PASS: No NVIDIA vGPU Service found.INFO: Checking bootloader configuration...PASS: bootloader packages installed correctlyINFO: Check for dkms modules...SKIP: could not get dkms statusINFO: Check for legacy 'filter' or 'group' sections in /etc/pve/notifications.cfg...INFO: Check for legacy 'notification-policy' or 'notification-target' options in /etc/pve/jobs.cfg...PASS: No legacy 'notification-policy' or 'notification-target' options found!INFO: Check for LVM autoactivation settings on LVM and LVM-thin storages...PASS: No problematic volumes found.INFO: Checking lvm config for thin_check_options...PASS: Check for correct thin_check_options passedINFO: Check post RRD metrics data format migration situation...PASS: No old RRD metric files found, normally this means all have been migrated.INFO: Checking for IPAM DB files that have not yet been migrated.PASS: No legacy IPAM DB found.PASS: No legacy MAC DB found.INFO: Checking if the legacy sysctl file '/etc/sysctl.conf' needs to be migrated to new '/etc/sysctl.d/' path.PASS: Legacy file '/etc/sysctl.conf' is not present.INFO: Checking if matching CPU microcode package is installed.WARN: The matching CPU microcode package 'intel-microcode' could not be found! Consider installing it to receive the latest security and bug fixes for your CPU. apt install intel-microcode= SUMMARY =TOTAL: 42PASSED: 35SKIPPED: 5WARNINGS: 2FAILURES: 0I'm not sure where to go from here. It's not a time sensitive issue, but an additional 80W over a long period of time is a significant bump in electricity costs to me.

Thanks in advance.