Absolutely, but I think the context is sometimes forgotten and often the ones that are trying to help lives in different worlds and speaks a different language than the newbies looking for help with different requirements and risk profiles. I make this mistake all the time myself trying to help people in other domains where I am more of the expert.That all really depends on your workload and setup. My homeserver is running 20 VMs and these are writing 900GB per day while idleing where most of the writes are just logs/metrics created by the VMs themself. Sum that up and a 1TB consumer SSDs TBW will be exeeded within a year.

If you are not using any DBs doing alot of small sync writes and if you skip raid/zfs and just use a single SSD with LVM it might survive for many years.

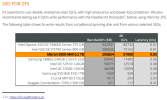

That right there is what I'm talking about. Most people who just want to set this up for a smal scale home server has no clue if they will be running stuff that only generates a "a small bursts of sequential async reads/writes". They just want a couple of VMs for a Windows 10 and maybe a Home Assistant instance or whatnot and maybe some LXCs for a few services. They don't know what sequential async reads/writes is or what generates it.Again, its all about the workload. Consumer SSDs are great if you need small bursts of sequential async reads/writes but the performance will drop massively as soon as the cache is filled up because of long sustained loads. In such a case a enterprise SSD will be much faster because the performance won't drop that hard. And most consumer SSDs won't be able to use caching at all for sync writes, so here the performance is always horrible.

And please don't misunderstand...I think everyone appreciate the time people like yourself put into replying and assisting with issues here. It's just that the context is really important recognize at both ends.

Last edited: