Can you please poste the complete path of the 2TB file? Your screenshots shows whitespace. Personally I don't think it's a good idea trying to be smarter than the kernel (see the links I posted earlier). Normally I would expect that the kernel/qemu will free the cache if more RAM is needed. Since you have more than enough free RAM I would assume that the kernel still keeps the file in cache until the remaining free RAM is exchausted. And (like explained int he links) this behaviour is not only expected but actually a good thing: The kernel can and will use a large amount of RAM for caching so file access doesn't need to read from the (slower compared to RAM) disc storage.

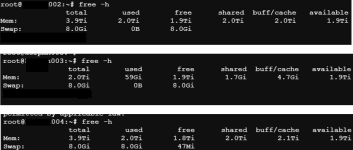

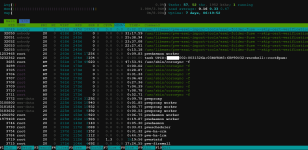

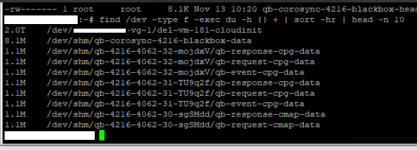

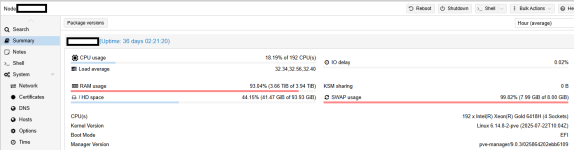

the whitespace is just the name of the storage it resides on. I rather not disclose this information on a public forum. It could be for example "/dev/netapp-vg-1/del-vm-181-cloudinit ". Well I got it to a point I didn't had sufficient RAM to run all VM workloads. I had +/- 85GB RAM available and still had to migrate a 150GB VM. It's not something I like to test, these hosts run around 150 VMs each.

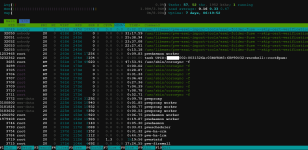

I had this behavior on 2 different hosts. On both hosts, I deleted a VM, and than it kicked in. I will pay close attention the next time I will delete a VM, but it's very likely this again will occur.

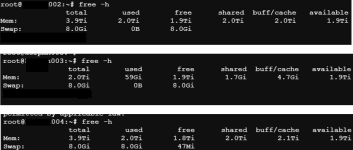

The first time, I moved all VMs to 2 hosts out of 3. As my 3rd host also had this RAM usage by the del-vm*. I didn't had enough RAM to run all the VMs. Luckily I could power down some temporarily and reboot the host. Post reboot, the RAM usage is fine, and the file is deleted.

On the other host, I manually deleted the file holding the 2TB RAM. This took a bit of time, but afterwards the RAM usage was cleared.

So same results, except for the 2nd attempt, I didn't had to move all the VMs to another host, which takes a bit of time when you have 150 VMs.