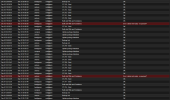

1298:Dec 01 22:11:52 canopus systemd[1]: Started corosync.service - Corosync Cluster Engine.

1316:Dec 01 22:11:57 canopus pmxcfs[1035]: [status] notice: update cluster info (cluster name local-group, version = 3)

1317:Dec 01 22:11:57 canopus pmxcfs[1035]: [dcdb] notice: members: 3/1035

1318:Dec 01 22:11:57 canopus pmxcfs[1035]: [dcdb] notice: all data is up to date

1319:Dec 01 22:11:57 canopus pmxcfs[1035]: [status] notice: members: 3/1035

1320:Dec 01 22:11:57 canopus pmxcfs[1035]: [status] notice: all data is up to date

1321:Dec 01 22:12:23 canopus pvecm[1188]: got timeout when trying to ensure cluster certificates and base file hierarchy is set up - no quorum (yet) or hung pmxcfs?

1338:Dec 01 22:12:24 canopus systemd[1]: Starting pve-guests.service - PVE guests...

1339:Dec 01 22:12:24 canopus pve-guests[1389]: <root@pam> starting task UPID:canopus:00000572:00000F4F:674D0978:startall::root@pam:

1341:Dec 01 22:12:33 canopus kernel: e1000e 0000:00:1f.6 enp0s31f6: NIC Link is Up 1000 Mbps Full Duplex, Flow Control: None

1342:Dec 01 22:12:33 canopus kernel: vmbr0: port 1(enp0s31f6) entered blocking state

1343:Dec 01 22:12:33 canopus kernel: vmbr0: port 1(enp0s31f6) entered forwarding state

1344:Dec 01 22:12:33 canopus kernel: vmbr0v40: port 1(enp0s31f6.40) entered blocking state

1345:Dec 01 22:12:33 canopus kernel: vmbr0v40: port 1(enp0s31f6.40) entered forwarding state

1346:Dec 01 22:12:38 canopus corosync[1133]: [KNET ] link: Resetting MTU for link 0 because host 2 joined

1347:Dec 01 22:12:38 canopus corosync[1133]: [KNET ] host: host: 2 (passive) best link: 0 (pri: 1)

1348:Dec 01 22:12:38 canopus corosync[1133]: [KNET ] pmtud: PMTUD link change for host: 2 link: 0 from 469 to 1397

1349:Dec 01 22:12:38 canopus corosync[1133]: [KNET ] pmtud: Global data MTU changed to: 1397

1350:Dec 01 22:12:38 canopus corosync[1133]: [KNET ] rx: host: 1 link: 0 is up

1351:Dec 01 22:12:38 canopus corosync[1133]: [KNET ] link: Resetting MTU for link 0 because host 1 joined

1352:Dec 01 22:12:38 canopus corosync[1133]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

1353:Dec 01 22:12:38 canopus corosync[1133]: [QUORUM] Sync members[3]: 1 2 3

1354:Dec 01 22:12:38 canopus corosync[1133]: [QUORUM] Sync joined[2]: 1 2

1355:Dec 01 22:12:38 canopus corosync[1133]: [TOTEM ] A new membership (1.148) was formed. Members joined: 1 2

1356:Dec 01 22:12:38 canopus pmxcfs[1035]: [dcdb] notice: members: 1/1553, 2/1562, 3/1035

1357:Dec 01 22:12:38 canopus pmxcfs[1035]: [dcdb] notice: starting data syncronisation

1358:Dec 01 22:12:38 canopus pmxcfs[1035]: [status] notice: members: 1/1553, 2/1562, 3/1035

1359:Dec 01 22:12:38 canopus pmxcfs[1035]: [status] notice: starting data syncronisation

1360:Dec 01 22:12:38 canopus corosync[1133]: [QUORUM] This node is within the primary component and will provide service.

1361:Dec 01 22:12:38 canopus corosync[1133]: [QUORUM] Members[3]: 1 2 3

1362:Dec 01 22:12:38 canopus corosync[1133]: [MAIN ] Completed service synchronization, ready to provide service.

1363:Dec 01 22:12:38 canopus pmxcfs[1035]: [status] notice: node has quorum

1364:Dec 01 22:12:38 canopus pmxcfs[1035]: [dcdb] notice: received sync request (epoch 1/1553/00000003)

1365:Dec 01 22:12:38 canopus pmxcfs[1035]: [status] notice: received sync request (epoch 1/1553/00000003)

1366:Dec 01 22:12:38 canopus pmxcfs[1035]: [dcdb] notice: received all states

1367:Dec 01 22:12:38 canopus pmxcfs[1035]: [dcdb] notice: leader is 1/1553

1368:Dec 01 22:12:38 canopus pmxcfs[1035]: [dcdb] notice: synced members: 1/1553, 2/1562

1369:Dec 01 22:12:38 canopus pmxcfs[1035]: [dcdb] notice: waiting for updates from leader

1370:Dec 01 22:12:38 canopus pmxcfs[1035]: [status] notice: received all states

1371:Dec 01 22:12:38 canopus pmxcfs[1035]: [status] notice: all data is up to date

1372:Dec 01 22:12:38 canopus pmxcfs[1035]: [status] notice: dfsm_deliver_queue: queue length 3

1373:Dec 01 22:12:38 canopus pmxcfs[1035]: [status] notice: received log

1374:Dec 01 22:12:38 canopus pmxcfs[1035]: [main] notice: ignore insert of duplicate cluster log

1375:Dec 01 22:12:38 canopus pmxcfs[1035]: [dcdb] notice: update complete - trying to commit (got 7 inode updates)

1376:Dec 01 22:12:38 canopus pmxcfs[1035]: [dcdb] notice: all data is up to date

1377:Dec 01 22:12:39 canopus kernel: e1000e 0000:00:1f.6 enp0s31f6: NIC Link is Down

1378:Dec 01 22:12:39 canopus kernel: vmbr0: port 1(enp0s31f6) entered disabled state

1379:Dec 01 22:12:39 canopus kernel: vmbr0v40: port 1(enp0s31f6.40) entered disabled state

1381:Dec 01 22:12:39 canopus pve-guests[1389]: <root@pam> end task UPID:canopus:00000572:00000F4F:674D0978:startall::root@pam: OK

1382:Dec 01 22:12:39 canopus systemd[1]: Finished pve-guests.service - PVE guests.

1384:Dec 01 22:12:40 canopus corosync[1133]: [KNET ] link: host: 2 link: 0 is down

1385:Dec 01 22:12:40 canopus corosync[1133]: [KNET ] host: host: 2 (passive) best link: 0 (pri: 1)

1386:Dec 01 22:12:40 canopus corosync[1133]: [KNET ] host: host: 2 has no active links

1387:Dec 01 22:12:41 canopus corosync[1133]: [KNET ] link: host: 1 link: 0 is down

1388:Dec 01 22:12:41 canopus corosync[1133]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

1389:Dec 01 22:12:41 canopus corosync[1133]: [KNET ] host: host: 1 has no active links

1390:Dec 01 22:12:41 canopus corosync[1133]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

1391:Dec 01 22:12:41 canopus corosync[1133]: [KNET ] host: host: 1 has no active links

1392:Dec 01 22:12:41 canopus corosync[1133]: [TOTEM ] Token has not been received in 2737 ms

1393:Dec 01 22:12:42 canopus corosync[1133]: [TOTEM ] A processor failed, forming new configuration: token timed out (3650ms), waiting 4380ms for consensus.

1394:Dec 01 22:12:42 canopus kernel: e1000e 0000:00:1f.6 enp0s31f6: NIC Link is Up 1000 Mbps Full Duplex, Flow Control: None