Hi,

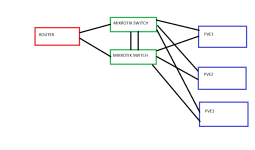

Ive got two Nic's on each of my Proxmox servers aggregate bonded together via 802.3ad to my vmbr and connected to a high speed switch. I have a spare third Nic on each server which I would like to connect to a lower speed switch as a failover. Just incase the high speed switch fails or is rebooted. Is this possible? I don't have the ability to 802.3ad across switches.

Thanks

Chris

Ive got two Nic's on each of my Proxmox servers aggregate bonded together via 802.3ad to my vmbr and connected to a high speed switch. I have a spare third Nic on each server which I would like to connect to a lower speed switch as a failover. Just incase the high speed switch fails or is rebooted. Is this possible? I don't have the ability to 802.3ad across switches.

Thanks

Chris