Hey Proxmox Forum,

I've backed up one of my VM's on an old node, and moved the backup file to a new server

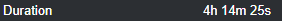

I began the restore this morning around 8:50AM, and now at 1:38, it says, "Progress 100%" ONLY for read

Things were taking aggregiously long previously, up to 5 minutes per GB

What could be causing this?

I

I

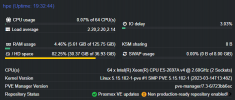

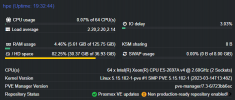

I am restoring this VM onto a 1TB SSD Raid 5 Array, the HDD space is just where the backup is stored for now, as to why it is full

The VM Disk Size is only set to 80, and should only be using 40-50 of that

I've backed up one of my VM's on an old node, and moved the backup file to a new server

I began the restore this morning around 8:50AM, and now at 1:38, it says, "Progress 100%" ONLY for read

Things were taking aggregiously long previously, up to 5 minutes per GB

What could be causing this?

I

II am restoring this VM onto a 1TB SSD Raid 5 Array, the HDD space is just where the backup is stored for now, as to why it is full

The VM Disk Size is only set to 80, and should only be using 40-50 of that