I tried to remove all OSD's from a cluster and recreate them, but 2 of them are still stuck in the ceph configuration database.

I have done all the standard commands to remove them, but the reference stays.

The reference to these does doesn't exist in cepf.conf anymore either and neither are there directories in which they exists.

I see there are commands to dump the database:

But none that will delete entries from the database?

How do I remove these two osd's?

I have done all the standard commands to remove them, but the reference stays.

Code:

# ceph osd crush remove osd.1

removed item id 1 name 'osd.1' from crush map

# ceph osd crush remove osd.0

removed item id 0 name 'osd.0' from crush map

# ceph auth del osd.1

# ceph auth del osd.0

# ceph osd rm 1

removed osd.1

# ceph osd rm 0

removed osd.0The reference to these does doesn't exist in cepf.conf anymore either and neither are there directories in which they exists.

I see there are commands to dump the database:

Code:

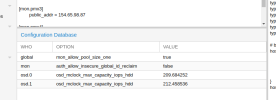

# ceph config dump

WHO MASK LEVEL OPTION VALUE RO

global advanced mon_allow_pool_size_one true

mon advanced auth_allow_insecure_global_id_reclaim false

osd.0 basic osd_mclock_max_capacity_iops_hdd 209.684252

osd.1 basic osd_mclock_max_capacity_iops_hdd 212.458536But none that will delete entries from the database?

How do I remove these two osd's?