Dear All,

we are using proxmox 8 EE, when checking ceph status we see 2 OSD down, is this normal or is there any serious issues in the hard disk

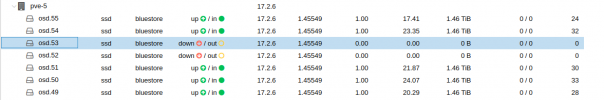

Do we need to think that there is problem in disk , screen shot and command line status given

Guidance requested

Thanks

Joseph John

we are using proxmox 8 EE, when checking ceph status we see 2 OSD down, is this normal or is there any serious issues in the hard disk

Do we need to think that there is problem in disk , screen shot and command line status given

Guidance requested

root@pve-5:~# pveceph status

cluster:

id: 6346d7b8-713e-4a84-be38-7fd483f49da0

health: HEALTH_OK

services:

mon: 3 daemons, quorum pve-1,pve-2,pve-3 (age 11d)

mgr: pve-1(active, since 11d)

osd: 56 osds: 54 up (since 11m), 54 in (since 72m)

data:

pools: 2 pools, 513 pgs

objects: 1.49M objects, 5.5 TiB

usage: 16 TiB used, 62 TiB / 79 TiB avail

pgs: 513 active+clean

io:

client: 0 B/s rd, 4.9 MiB/s wr, 0 op/s rd, 149 op/s wr

Thanks

Joseph John