He Thank you for the extensive answer,

@Dunuin , first of all.

Just do yourself two benchmarks of a consumer and an enterprise SSD with the same type of NAND, same interface, same manufacturer and same size.

This is virtually impossible to do which you must be aware of. I had difficulty comparing the Kingstons above as with the consumer NVMe I would need to take something U.3 from them (I think there's none) or I think they had NVMe meant as a server boot drive but Gen3 to begin with, but that's about it. Even then,

the controller would not be the same, the amount of DRAM, etc, etc.

One benchmark is doing 4K random async writes, and another is doing 4K random sync writes. Every consumer SSD will be terribly slow doing these sync writes while being fast doing async writes. The enterprise SSD will be fast on both and 100 times faster than the consumer SSD when doing sync writes. That's all about the PLP as no consumer SSD can cache sync writes in volatile DRAM while the DRAM cache of the enterprise SSD isn't volatile as it is backed by the PLP so the DRAM write cache could be quickly dumped to non-volatile SLC-cache while running on backup power.

Despite I do not have exactly a good way to test this, I will take this as something I might agree* on as it is reasonable explanation within that hypothetical environment as it sounds logical in terms of how sync writes could work on an SSD with capacitors - that is, until I fill up the DRAM, for instance.

I do not want to argue about

synthetic tests (doing majority 4k sync writes is such as is filling up DRAM) however because the entire premise of this thread and arguably this entire forum is that we are

talking homelabs. There's not much an average homelab would resemble a DC server rack in terms of use case. So everything I posted so far was very much taking that into consideration, which I believe I made clear.

And you probably know that sync writes will shred NAND while async writes are not that bad. With PLP those heavy sync writes will be handled internally more like async writes by the enterprise SSD and therefore causing less wear.

My use of the term "shred", admittedly inaccurate, was in the sense of "consuming the drive", it was all related to

biting off the TBW. In this sense, sync or async does not matter. As I do not want to detract from the original topic, I will stay away from e.g. ZFS or any other specific filesystem and the params and how the sync/async would impact the drive's lifespan. It will, I am aware of that, but it's not that a non-PLP drive will be "shredded" in a homelab much more than PLP one.

An SSD without PLP is like running a HW raid card without BBU+cache. So if you say enterprise SSDs aren't worth the money you would probably also argue that a HW raid without BBU+cache is a better choice for a homelab server?

For a homelab server in 2024, the RAID-anything debate has been stale since long, let alone HW card. I know it's not your point here, but the example is wrong because even if we lived in 2000s and someone was debating HW RAID, they would be pointed to just mdadm it and use HBA in a homelab anyways.

The reason I mention this is because

maybe in a few years' time the whole debate of "enterprise vs consumer" SSDs will be equally silly.

But the point is that sync writes have to be safe 100% of the time. You are not allowed to cache them in volatile memory. So only thing you can do with a consumer SSD is to skip the DRAM-cache and write them directly to SLC-cache and reordering the block there. And the SLC-cache is slow and will wear with every write. Would be way faster and less wear if you got a PLP so you can cache and reorder those in DRAM-cache that won't wear with each write and then do a single optimized write from DRAM to the NAND.

I am allowed to do anything really.

It's a homelab. And I have a backup. Say we are talking iSCSI LUN, whatever non-PLP thing is there, the syncs will be async. Talking ZFS, that's terrible for sync anything really, but suppose nothing prevents one to have a ZIL on a separate SLOG (most of us do not need it), someone might have been using Optane since a while (which is now dead for a reason) for that (and still stay with a consumer SSD and go crazy on his sync writes too). Had this discussion happened some time ago, someone would have chipped in they really encourage us to go buy used ZeusRAMs for our homelab.

I think this sort-of-elitist debates have to stop. This is a homelab discussion, arguably it's a essentially a homelab-majority forum.

Don't trust any manufacturers datasheets. They don't do sync writes. Run your own fio benchmarks.

My homelab is not doing fio-style barrage of sync writes. Yours? I just want to be clear on that I agree with what you say on a technical level, but it's not relevant in the real homelab world. There's not that many writes going on to begin with in homelab. Or even a corporate webserver.

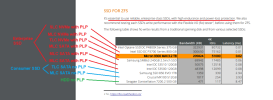

The official Proxmox ZFS benchmark paper for example did sync writes and its not hard to identify the SSDs without PLP by just looking at the IOPS performance:

https://www.proxmox.com/en/downloads/item/proxmox-ve-zfs-benchmark-2020

View attachment 62186

This is now stale document, no one uses those consumer drives anymore, the EVOs are not PROs, etc. I don't want to sound like i disregard it because it's Proxmox-branded, but it clearly had an agenda at the time. It is very specific to ZFS and wants to make a point that is valid in a real datacentre, not in a homelab, not with 2024 (or even Gen3+) drives.

Its not that a sync write benchmark of Enterprise SSDs is faster because the NAND would be faster. NAND performance is the same between comparable consumer/enterpeise models. Its just that you are benchmarking the SSDs internal DRAM with enterprise SSD and the NAND with consumer SSDs, as Consumer SSD simply can't use it.

This is skewed interpretation, of course the consumer SSDs can and use DRAM, unless you force them not to. Which is fine if you want to prove a point of technical difference, but not relevant for a homelab use case.

Consumer SSDs are fine as long as you only do bursts of async sequential writes. Then they are even faster than Enterprise SSDs.

It seems to me that we agree that for homelab use they might be actually even "faster" after all.

As soon as you got sync or continuous writes they really suck. So great for workloads like gaming but not good for running something like a DB.

Which DBs with actual heavy write workload is the most common in homelab use?

See for example here why DRAM is needed to optimize writes and lower write amplification and why PLP is needed to protect contents of the DRAM cache:

https://image-us.samsung.com/Samsun...rce_WHP-SSD-POWERLOSSPROTECTION-r1-JUL16J.pdf

*Alright, I am at a loss with this source, even considering your own reasoning above which I had just agreed with sounded reasonable. Because

a "technical marketing specialist" at Samsung in 2016 writes first of all that:

"Usually SSDs send an acknowledgement to the OS that the data has been written once it has been committed to DRAM. In other words, the OS considers that the data is now safe, even though it hasn’t been written to NAND yet."

So he actually wants to push sales of his PLP SSDs as they are

better for data integrity - nothing about performance.

He talks about the FTL, but again, only in the context of that should you buy a non-PLP drive a

"power loss may corrupt all data". I do not know if this was true in maybe early 2010s, but I have never had this happen and I have some with quite high unsafe shutdown counters.

He then goes on to say that consumer (client) SSDs are actually fine because they

flush the FTL regularly and have a journal. He makes a terrible case for his cause because he says the power loss and small amount of data loss does not actually matter that much because it's nothing new (compared to HDDs) and there's filesystem journalling for that in the consumer space, as if there was no journalling in a DC.

Journaling of consumer SSDs will only protect the Flash Translation Layer from corrupting. The cached data will still be lost, so the sync write has to skip the RAM and write directly to the NAND and only return an ACK once the data got written to the NAND. The Enterprise SSD with PLP is so fast when doing sync writes because it can actually use the DRAM and send an ACK once the data hits the DRAM but while not being written to NAND yet.

The guy was all over the place in his "reasoning", so I would be no wiser from his contribution. First of all, the FTL in my understanding really only is needed to be up to date when it comes to what has been written to the actual NAND, so this would make a case for you saying it is

a stress test of the SSD's capabilities and well, making it slow. I do not call that "shredding" and I do not see how that bites off TBW somehow faster than with a PLP drive. And again flushing the FTL often minimises the amount of data not written to NAND which he then admits would be in a filesystem's journal.

But notably - nowhere did he ever write that that the

only PLP SSDs can satisfy a sync write instantly purely from writing into DRAM. So this is not the source I hoped for to confirm that.

Some food for thought would also be - what if I have some ancient PLP drive with rather small DRAM and I am actually doing crazy amount of intermittent sustained writes. And then I have an option to use modern consumer SSD with larger DRAM - and I do not need those to be sync at all. But again, not in a homelab, usually.

EDIT: What we got into discussing seems to have happened already in multiple places before:

https://www.reddit.com/r/storage/comments/iop7ef/performance_gain_of_plp_powerlossprotection_drives/

I really wonder what would all those tests looked like without ZFS on various "gaming" drives.