Hello All,

i run an LACP Bond (layer 2 logic) 2 x 100GB with different VLAns. i want to Use vlan 230 with MTU of 9000 enabled for linstor/drbd. But we only get a bandwith of around 21GB not matter if i use iperf or iperf3 (also with 4 simultaniously threads).

i amended the cpu states like this:

https://forum.proxmox.com/threads/mellanox-connectx-5-en-100g-running-at-40g.106095/

updated the mellanox firmware to latest available like this:

https://www.thomas-krenn.com/de/wiki/Mellanox_Firmware_Tools_-_Firmware_Upgrade_unter_Linux

also installed these helpers to use rdma

apt install -y infiniband-diags opensm ibutils rdma-core rdmacm-utils &&

modprobe ib_umad &&

modprobe ib_ipoib

switchwise also LACP Bond for sure with mtu 9000 and PFC enabled for loosless communication.

ethtool bond0

Settings for bond0:

Supported ports: [ ]

Supported link modes: Not reported

Supported pause frame use: No

Supports auto-negotiation: No

Supported FEC modes: Not reported

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 200000Mb/s

Duplex: Full

Auto-negotiation: off

Port: Other

PHYAD: 0

Transceiver: internal

Link detected: yes

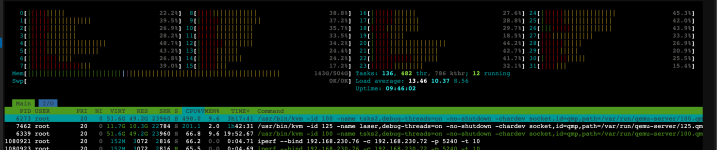

attached a picture of the systemload, so there is not a single thread limiting.

root@pve2:~# iperf --bind 192.168.230.76 -c 192.168.230.72 -p 5240 -t 10

------------------------------------------------------------

Client connecting to 192.168.230.72, TCP port 5240

TCP window size: 16.0 KByte (default)

------------------------------------------------------------

[ 1] local 192.168.230.76 port 42397 connected with 192.168.230.72 port 5240 (icwnd/mss/irtt=87/8948/183)

[ ID] Interval Transfer Bandwidth

[ 1] 0.0000-10.0140 sec 21.0 GBytes 18.0 Gbits/sec

and here is my network setting:

iface enp6s0f1 inet manual

iface enp5s0 inet manual

auto eno1

iface eno1 inet manual

#Quorum

auto eno2

iface eno2 inet manual

#Quorum

iface enxbe3af2b6059f inet manual

auto bond0

iface bond0 inet manual

bond-slaves enp1s0f0np0 enp1s0f1np1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2

mtu 9000

auto bond1

iface bond1 inet static

address 10.10.10.76/24

bond-slaves eno1 eno2

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

#quorum

auto vmbr1

iface vmbr1 inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

#100G_Bond_Bridge_Vlan

auto vmbr1.160

iface vmbr1.160 inet manual

mtu 1500

#CNC_160

auto vmbr1.170

iface vmbr1.170 inet manual

mtu 1500

#Video_170

auto vmbr1.180

iface vmbr1.180 inet manual

mtu 1500

#DMZ_180

auto vmbr1.199

iface vmbr1.199 inet manual

mtu 1500

#VOIP_199

auto vmbr1.201

iface vmbr1.201 inet static

address 192.168.201.76/24

gateway 192.168.201.254

mtu 1500

#MGMT_201

auto vmbr1.202

iface vmbr1.202 inet manual

mtu 1500

#WLAN_202

auto vmbr1.1

iface vmbr1.1 inet manual

mtu 1500

#IINTERN

auto vmbr1.230

iface vmbr1.230 inet static

address 192.168.230.76/24

mtu 9000

#CEPH_LINBIT_230

also with iperf3 we see a lot of retries:

root@pve2:/etc/pve# iperf3 --bind 192.168.230.76 -c 192.168.230.72 -p 22222 -t 10

Connecting to host 192.168.230.72, port 22222

[ 5] local 192.168.230.76 port 44521 connected to 192.168.230.72 port 22222

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 2.11 GBytes 18.1 Gbits/sec 732 1.08 MBytes

[ 5] 1.00-2.00 sec 1.99 GBytes 17.1 Gbits/sec 874 516 KBytes

[ 5] 2.00-3.00 sec 1.99 GBytes 17.1 Gbits/sec 992 577 KBytes

[ 5] 3.00-4.00 sec 1.93 GBytes 16.6 Gbits/sec 703 481 KBytes

[ 5] 4.00-5.00 sec 1.86 GBytes 16.0 Gbits/sec 986 524 KBytes

[ 5] 5.00-6.00 sec 1.99 GBytes 17.2 Gbits/sec 762 533 KBytes

[ 5] 6.00-7.00 sec 2.01 GBytes 17.3 Gbits/sec 715 516 KBytes

[ 5] 7.00-8.00 sec 2.04 GBytes 17.5 Gbits/sec 1007 498 KBytes

[ 5] 8.00-9.00 sec 2.11 GBytes 18.1 Gbits/sec 917 446 KBytes

[ 5] 9.00-10.00 sec 2.17 GBytes 18.6 Gbits/sec 469 402 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 20.2 GBytes 17.4 Gbits/sec 8157 sender

[ 5] 0.00-10.00 sec 20.2 GBytes 17.4 Gbits/sec receiver

funny enough on the same mellanox ConnectX-5 CArd but with only 2 x 25GBE i hardly see any retries.

i run an LACP Bond (layer 2 logic) 2 x 100GB with different VLAns. i want to Use vlan 230 with MTU of 9000 enabled for linstor/drbd. But we only get a bandwith of around 21GB not matter if i use iperf or iperf3 (also with 4 simultaniously threads).

i amended the cpu states like this:

https://forum.proxmox.com/threads/mellanox-connectx-5-en-100g-running-at-40g.106095/

updated the mellanox firmware to latest available like this:

https://www.thomas-krenn.com/de/wiki/Mellanox_Firmware_Tools_-_Firmware_Upgrade_unter_Linux

also installed these helpers to use rdma

apt install -y infiniband-diags opensm ibutils rdma-core rdmacm-utils &&

modprobe ib_umad &&

modprobe ib_ipoib

switchwise also LACP Bond for sure with mtu 9000 and PFC enabled for loosless communication.

ethtool bond0

Settings for bond0:

Supported ports: [ ]

Supported link modes: Not reported

Supported pause frame use: No

Supports auto-negotiation: No

Supported FEC modes: Not reported

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 200000Mb/s

Duplex: Full

Auto-negotiation: off

Port: Other

PHYAD: 0

Transceiver: internal

Link detected: yes

attached a picture of the systemload, so there is not a single thread limiting.

root@pve2:~# iperf --bind 192.168.230.76 -c 192.168.230.72 -p 5240 -t 10

------------------------------------------------------------

Client connecting to 192.168.230.72, TCP port 5240

TCP window size: 16.0 KByte (default)

------------------------------------------------------------

[ 1] local 192.168.230.76 port 42397 connected with 192.168.230.72 port 5240 (icwnd/mss/irtt=87/8948/183)

[ ID] Interval Transfer Bandwidth

[ 1] 0.0000-10.0140 sec 21.0 GBytes 18.0 Gbits/sec

and here is my network setting:

iface enp6s0f1 inet manual

iface enp5s0 inet manual

auto eno1

iface eno1 inet manual

#Quorum

auto eno2

iface eno2 inet manual

#Quorum

iface enxbe3af2b6059f inet manual

auto bond0

iface bond0 inet manual

bond-slaves enp1s0f0np0 enp1s0f1np1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2

mtu 9000

auto bond1

iface bond1 inet static

address 10.10.10.76/24

bond-slaves eno1 eno2

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

#quorum

auto vmbr1

iface vmbr1 inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

#100G_Bond_Bridge_Vlan

auto vmbr1.160

iface vmbr1.160 inet manual

mtu 1500

#CNC_160

auto vmbr1.170

iface vmbr1.170 inet manual

mtu 1500

#Video_170

auto vmbr1.180

iface vmbr1.180 inet manual

mtu 1500

#DMZ_180

auto vmbr1.199

iface vmbr1.199 inet manual

mtu 1500

#VOIP_199

auto vmbr1.201

iface vmbr1.201 inet static

address 192.168.201.76/24

gateway 192.168.201.254

mtu 1500

#MGMT_201

auto vmbr1.202

iface vmbr1.202 inet manual

mtu 1500

#WLAN_202

auto vmbr1.1

iface vmbr1.1 inet manual

mtu 1500

#IINTERN

auto vmbr1.230

iface vmbr1.230 inet static

address 192.168.230.76/24

mtu 9000

#CEPH_LINBIT_230

also with iperf3 we see a lot of retries:

root@pve2:/etc/pve# iperf3 --bind 192.168.230.76 -c 192.168.230.72 -p 22222 -t 10

Connecting to host 192.168.230.72, port 22222

[ 5] local 192.168.230.76 port 44521 connected to 192.168.230.72 port 22222

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 2.11 GBytes 18.1 Gbits/sec 732 1.08 MBytes

[ 5] 1.00-2.00 sec 1.99 GBytes 17.1 Gbits/sec 874 516 KBytes

[ 5] 2.00-3.00 sec 1.99 GBytes 17.1 Gbits/sec 992 577 KBytes

[ 5] 3.00-4.00 sec 1.93 GBytes 16.6 Gbits/sec 703 481 KBytes

[ 5] 4.00-5.00 sec 1.86 GBytes 16.0 Gbits/sec 986 524 KBytes

[ 5] 5.00-6.00 sec 1.99 GBytes 17.2 Gbits/sec 762 533 KBytes

[ 5] 6.00-7.00 sec 2.01 GBytes 17.3 Gbits/sec 715 516 KBytes

[ 5] 7.00-8.00 sec 2.04 GBytes 17.5 Gbits/sec 1007 498 KBytes

[ 5] 8.00-9.00 sec 2.11 GBytes 18.1 Gbits/sec 917 446 KBytes

[ 5] 9.00-10.00 sec 2.17 GBytes 18.6 Gbits/sec 469 402 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 20.2 GBytes 17.4 Gbits/sec 8157 sender

[ 5] 0.00-10.00 sec 20.2 GBytes 17.4 Gbits/sec receiver

funny enough on the same mellanox ConnectX-5 CArd but with only 2 x 25GBE i hardly see any retries.

Attachments

Last edited: