100% Swap Usage

- Thread starter mikiz

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Swap is more than just an escape for low memory: https://chrisdown.name/2018/01/02/in-defence-of-swap.html

But given that the host has ~185G of memory, you could consider disabling swap, as that is a lot of memory, and if you run out of memory, those 8G of swap are most likely not enough anyway.

and then comment out the swap line in

But given that the host has ~185G of memory, you could consider disabling swap, as that is a lot of memory, and if you run out of memory, those 8G of swap are most likely not enough anyway.

Code:

swapoff -a/etc/fstab.Or use zram as swap:

https://pve.proxmox.com/wiki/Zram#Alternative_Setup_using_zram-tools

https://pve.proxmox.com/wiki/Zram#Alternative_Setup_using_zram-tools

Swap is more than just an escape for low memory: https://chrisdown.name/2018/01/02/in-defence-of-swap.html

But given that the host has ~185G of memory, you could consider disabling swap, as that is a lot of memory, and if you run out of memory, those 8G of swap are most likely not enough anyway.

and then comment out the swap line inCode:swapoff -a/etc/fstab.

Hi,

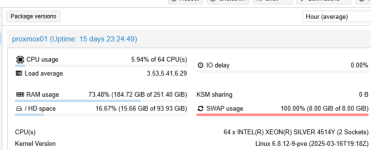

I have facing this 100% Swap usage problem.Yesterday everything were fine, i migrated 2 VM from Vmware to Proxmox.One of them is IIS Server and the other one SQL Server.What shoud i do.What does this 100% usage cause normally?

View attachment 89862

Hi,Swap is more than just an escape for low memory: https://chrisdown.name/2018/01/02/in-defence-of-swap.html

But given that the host has ~185G of memory, you could consider disabling swap, as that is a lot of memory, and if you run out of memory, those 8G of swap are most likely not enough anyway.

and then comment out the swap line inCode:swapoff -a/etc/fstab.

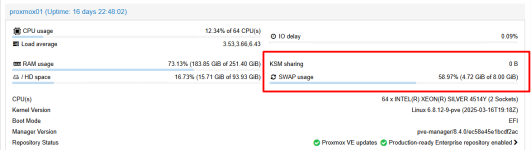

You talked about memory just like i said 2 days ago evertyhing is fine.After i migrated those 2 VM this problem occured.I realized all windows vm's cpu type x64 but those 2 VM's CPU type default(kvm64).I stop one of the vm usnig default(kvm64) CPU and SWAP Usage decreased to 60%.I am very confused about this.Why CPU type change decreased the swap usage.Isn't that about memory?

Thank you

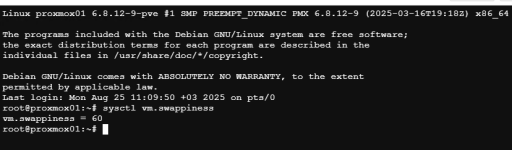

Output 60. What does it means?

That's explained in detail at the already linked article https://chrisdown.name/2018/01/02/in-defence-of-swap.html

The relevant part for you is this:

What should my swappiness setting be?

First, it's important to understand what vm.swappiness does. vm.swappiness is a sysctl that biases memory reclaim either towards reclamation of anonymous pages, or towards file pages. It does this using two different attributes: file_prio (our willingness to reclaim file pages) and anon_prio (our willingness to reclaim anonymous pages). vm.swappiness plays into this, as it becomes the default value for anon_prio, and it also is subtracted from the default value of 200 for file_prio, which means for a value of vm.swappiness = 50, the outcome is that anon_prio is 50, and file_prio is 150 (the exact numbers don't matter as much as their relative weight compared to the other).

This means that, in general, vm.swappiness is simply a ratio of how costly reclaiming and refaulting anonymous memory is compared to file memory for your hardware and workload. The lower the value, the more you tell the kernel that infrequently accessed anonymous pages are expensive to swap out and in on your hardware. The higher the value, the more you tell the kernel that the cost of swapping anonymous pages and file pages is similar on your hardware. The memory management subsystem will still try to mostly decide whether it swaps file or anonymous pages based on how hot the memory is, but swappiness tips the cost calculation either more towards swapping or more towards dropping filesystem caches when it could go either way. On SSDs these are basically as expensive as each other, so setting vm.swappiness = 100 (full equality) may work well. On spinning disks, swapping may be significantly more expensive since swapping in general requires random reads, so you may want to bias more towards a lower value.

The reality is that most people don't really have a feeling about which their hardware demands, so it's non-trivial to tune this value based on instinct alone – this is something that you need to test using different values. You can also spend time evaluating the memory composition of your system and core applications and their behaviour under mild memory reclamation.

When talking about vm.swappiness, an extremely important change to consider from recent(ish) times is this change to vmscan by Satoru Moriya in 2012, which changes the way that vm.swappiness = 0 is handled quite significantly.

Essentially, the patch makes it so that we are extremely biased against scanning (and thus reclaiming) any anonymous pages at all with vm.swappiness = 0, unless we are already encountering severe memory contention. As mentioned previously in this post, that's generally not what you want, since this prevents equality of reclamation prior to extreme memory pressure occurring, which may actually lead to this extreme memory pressure in the first place. vm.swappiness = 1 is the lowest you can go without invoking the special casing for anonymous page scanning implemented in that patch.

The kernel default here is vm.swappiness = 60. This value is generally not too bad for most workloads, but it's hard to have a general default that suits all workloads. As such, a valuable extension to the tuning mentioned in the "how much swap do I need" section above would be to test these systems with differing values for vm.swappiness, and monitor your application and system metrics under heavy (memory) load. Some time in the near future, once we have a decent implementation of refault detection in the kernel, you'll also be able to determine this somewhat workload-agnostically by looking at cgroup v2's page refaulting metrics.

If you have now idea what to do with this you can think like this: A lower swappiness values will lead to a lower usage of swap, a higher value the opposite. The default value of 60 is working well enough for most scenarios (including desktops) but on servers with enough memory, a value of 10 (as given in the ProxmoxVE documentation) is more suited (since they will still profit from swap for the reasons explained in the non-quoted part of Chris Downs blog but need it less than a machine with lower memory).

If you uses a compressed RAM disc as swap (see https://pve.proxmox.com/wiki/Zram and https://forum.proxmox.com/threads/zram-why-bother.151712/ ) you actually don't need to change the value of vm.swappiness but it won't hurt either. The idea is that most of the times the zram disc will be used as swap and the actual swap disc (which is slower) will only be used if there is no other way. That this will happen is quite unlikely in your case though.

Another thing: Which filesystem are you using? swapfiles on ZFS are known to be problematic (see https://pve.proxmox.com/pve-docs/pve-admin-guide.html#zfs_swap ) but a swap partition or zramswap combined with ZFS is fine.