Hello all

I know it maybe unrelated, but I noticed this after upgrading to 7.0 pve.

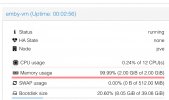

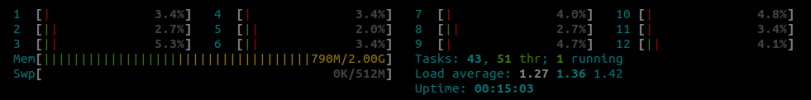

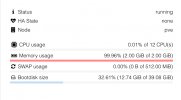

I have a CT that is used only to run Emby server. It has 2GB RAM allocated. I’ve never seen memory use more then 1.2 GB and tested it to its limits on multiple steams etc before came to conclusion that 2GB is a good number.

After 7.0 I see 100% memory used on a single stream and it’s very odd. Tried to restore 3 weeks old backup and see no difference.

So wondering, maybe it’s related to the latest .0 upgrade, some changes how containers are managed ?

Anybody else has seen something similar?

Thx

PS: any clues how to troubleshoot/fix it

I know it maybe unrelated, but I noticed this after upgrading to 7.0 pve.

I have a CT that is used only to run Emby server. It has 2GB RAM allocated. I’ve never seen memory use more then 1.2 GB and tested it to its limits on multiple steams etc before came to conclusion that 2GB is a good number.

After 7.0 I see 100% memory used on a single stream and it’s very odd. Tried to restore 3 weeks old backup and see no difference.

So wondering, maybe it’s related to the latest .0 upgrade, some changes how containers are managed ?

Anybody else has seen something similar?

Thx

PS: any clues how to troubleshoot/fix it