That's not a good choice for a local cache... i would recommended to use local fast nvme ssds, best with some redundancy for error correction.It's based on NFS on a Synology NAS.

Proxmox Backup Server 4.0 released!

- Thread starter t.lamprecht

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Thanks again Chris, I will take a look in this!That's not a good choice for a local cache... i would recommended to use local fast nvme ssds, best with some redundancy for error correction.

Allow me a follow up question regarding the local cache. What kind of data does the cache hold and how long does it cache these files? I see a significant amount of data still stored in that cache. Is there any documentation how the cache is used or for it is used for in detail?

Thanks again for your help.

Thanks again for your help.

The cache stores snapshot metadata such as index file, owner files, ecc. as well as backup data chunks. Chunks are stored in a least recently used cache, evicting older ones if no more slots are available. The cache will use as much storage space as available, therefore the recommendation to use a dedicated disk, partition or dataset with quota. Some more considerations are also to be found in the docs https://pbs.proxmox.com/docs/storage.html#datastores-with-s3-backendAllow me a follow up question regarding the local cache. What kind of data does the cache hold and how long does it cache these files? I see a significant amount of data still stored in that cache. Is there any documentation how the cache is used or for it is used for in detail?

Thanks again for your help..

Thanks again!The cache stores snapshot metadata such as index file, owner files, ecc. as well as backup data chunks. Chunks are stored in a least recently used cache, evicting older ones if no more slots are available. The cache will use as much storage space as available, therefore the recommendation to use a dedicated disk, partition or dataset with quota. Some more considerations are also to be found in the docs https://pbs.proxmox.com/docs/storage.html#datastores-with-s3-backend

the larger backups (600G) are failing still after updating to 4.0.15

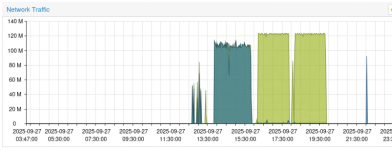

Its interesting to note the network graph for the backup server vs smaller backups, only incoming traffic , none out to the s3 based storage

are there any logs I can view to try get a clue - checked the journal log and nothing stands out like the previous vpn network issue (we use wireguard)

Edit: I wonder if there are any files from the failed jobs causing issues on the cache disk or s3 storage

Edit2: just backed up a 1.2TB VM - worked fine, leads me to believe either some files are stuck in s3 or cache related to that VMid that fails or something on the VM is corrupt, but it stopped working after the VPN network backup proxy issue

Its interesting to note the network graph for the backup server vs smaller backups, only incoming traffic , none out to the s3 based storage

are there any logs I can view to try get a clue - checked the journal log and nothing stands out like the previous vpn network issue (we use wireguard)

Edit: I wonder if there are any files from the failed jobs causing issues on the cache disk or s3 storage

Edit2: just backed up a 1.2TB VM - worked fine, leads me to believe either some files are stuck in s3 or cache related to that VMid that fails or something on the VM is corrupt, but it stopped working after the VPN network backup proxy issue

Attachments

Last edited:

Hi!

[0] https://bugzilla.proxmox.com/show_bug.cgi?id=6750

What exact error message do you get for the failed backups? Anything of interest in the PVE task log or the corresponding PBS backup task log?the larger backups (600G) are failing still after updating to 4.0.15

We discovered an issue leading to possible deadlocks. A fix is work in progress, please subscribe to the issue [0] for status updates.Its interesting to note the network graph for the backup server vs smaller backups, only incoming traffic , none out to the s3 based storage

are there any logs I can view to try get a clue - checked the journal log and nothing stands out like the previous vpn network issue (we use wireguard)

Edit: I wonder if there are any files from the failed jobs causing issues on the cache disk or s3 storage

Edit2: just backed up a 1.2TB VM - worked fine, leads me to believe either some files are stuck in s3 or cache related to that VMid that fails or something on the VM is corrupt, but it stopped working after the VPN network backup proxy issue

[0] https://bugzilla.proxmox.com/show_bug.cgi?id=6750

Last edited:

Hi!

What exact error message do you get for the failed backups? Anything of interest in the PVE task log or the corresponding PBS backup task log?

We discovered an issue leading to possible deadlocks. A fix is work in progress, please subscribe to the issue [0] for status updates.

[0] https://bugzilla.proxmox.com/show_bug.cgi?id=6750

Code:

INFO: 98% (818.4 GiB of 835.1 GiB) in 1h 38m 34s, read: 149.0 MiB/s, write: 147.0 MiB/s

INFO: 99% (826.8 GiB of 835.1 GiB) in 1h 39m 32s, read: 147.5 MiB/s, write: 145.1 MiB/s

INFO: 100% (835.1 GiB of 835.1 GiB) in 1h 40m 33s, read: 139.6 MiB/s, write: 136.7 MiB/s

ERROR: backup close image failed: command error: broken pipe

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 108 failed - backup close image failed: command error: broken pipe

INFO: Failed at 2025-09-28 04:43:41

INFO: Backup job finished with errors

TASK ERROR: job errorsI do have a dirty bitmap on the large volume due to the backups to the old backup server which I since removed, but I assume the dirty bit doesnt go away as the backup for that volume never completes?

PBS log looks interesting:

Code:

2025-09-27T17:10:38+02:00: starting new backup on datastore 'hetzners3ds' from ::ffff:192.168.122.15: "vm/108/2025-09-27T15:10:37Z"

2025-09-27T17:10:38+02:00: GET /previous: 400 Bad Request: no valid previous backup

2025-09-27T17:10:38+02:00: created new fixed index 1 ("vm/108/2025-09-27T15:10:37Z/drive-scsi0.img.fidx")

2025-09-27T17:10:38+02:00: Skip upload of already encountered chunk bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8

2025-09-27T17:10:38+02:00: created new fixed index 2 ("vm/108/2025-09-27T15:10:37Z/drive-scsi1.img.fidx")

2025-09-27T17:10:38+02:00: Skip upload of already encountered chunk bb9f8df61474d25e71fa00722318cd387396ca1736605e1248821cc0de3d3af8

2025-09-27T17:10:39+02:00: Uploaded blob to object store: .cnt/vm/108/2025-09-27T15:10:37Z/qemu-server.conf.blob

2025-09-27T17:10:39+02:00: add blob "/mnt/datastore/s3cache/vm/108/2025-09-27T15:10:37Z/qemu-server.conf.blob" (1081 bytes, comp: 1081)

2025-09-27T17:10:39+02:00: Upload of new chunk 7cea0197776e28a0558513e967af200d4c6b073abf57dc702e37967b2f603e25

2025-09-27T17:10:39+02:00: Skip upload of already encountered chunk 1061b7ee267fabb1e4e61d2d752c4ec92c1c6144c34a95b3dae9df742b4fffdd

2025-09-27T17:10:39+02:00: Caching of chunk 7cea0197776e28a0558513e967af200d4c6b073abf57dc702e37967b2f603e25

2025-09-27T17:10:39+02:00: Skip upload of already encountered chunk 59aed2a3f720cfb9d58fae695d52cb8a6512a74e9bfbe7158cdd88718160bc9c

2025-09-27T17:10:39+02:00: Skip upload of already encountered chunk 366ce253154ab63930d9787323347ed7b92b3361c966bd1ac93f39043e3d4941

2025-09-27T17:10:39+02:00: Skip upload of already encountered chunk fe2ff82c2a214a6d28e70e716c1f297a52361d6b47abe285a78c5b054fb958d8

removed similar entries

2025-09-27T17:10:42+02:00: Caching of chunk 28e2eeae5799e1d3f610f0c8ed7bd216a98fd228a7a97da1b7e5f1efe2f70a2d

2025-09-27T17:10:42+02:00: Upload of new chunk c226a367c733d50411847e9b29370168b958300cac5ddf2528edc2a34c13d77b

2025-09-27T17:10:42+02:00: Upload of new chunk 9d8a5a0b10feff4a26c02ce9e56cb0c704d71805a61849dd4e45607dbb3ed744

2025-09-27T17:10:42+02:00: Caching of chunk 12b7b3157d0c8b292e012a7027a62a0b6807fc75b8f5525044d92adb5c08463e

2025-09-27T17:10:42+02:00: Caching of chunk ba5b40fa07c88e152e2e24cddfa3dfc0a191c48dcf4e858d8ae6daded5529f53

removed similar entries

2025-09-27T18:52:45+02:00: Skip upload of already encountered chunk 3bad1bf6e5b57cb8bba3eb30d0ff1418b1f2294322648e8a16c31d5965c8607d

2025-09-27T18:52:45+02:00: Upload of new chunk 19a596026fc5d92de48a814285b6114c85ffa8839e5302bfb29b3df5c2cb4044

2025-09-27T18:52:45+02:00: Upload of new chunk b743eb6750f175fd852343c4bc9bfb375e19c73c73027ed8f2bda9b32b0e1f39

2025-09-27T18:52:45+02:00: Caching of chunk 19a596026fc5d92de48a814285b6114c85ffa8839e5302bfb29b3df5c2cb4044

2025-09-27T18:52:45+02:00: POST /fixed_close: 400 Bad Request: Atomic rename file "/mnt/datastore/s3cache/vm/108/2025-09-27T15:10:37Z/drive-scsi1.img.fidx" failed - No such file or directory (os e

rror 2)

2025-09-27T18:52:45+02:00: Upload of new chunk 6096a2f7554ae3ebfbf2766f2f939496e79a4f034ea00613f97af7021873f77b

2025-09-27T18:52:45+02:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection

2025-09-27T18:52:45+02:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection

2025-09-27T18:52:45+02:00: POST /fixed_chunk: 400 Bad Request: error reading a body from connection

2025-09-27T18:52:45+02:00: backup failed: connection error

2025-09-27T18:52:45+02:00: removing failed backup

2025-09-27T18:52:45+02:00: removing backup snapshot "/mnt/datastore/s3cache/vm/108/2025-09-27T15:10:37Z"

2025-09-27T18:52:45+02:00: TASK ERROR: connection error: bytes remaining on streamc.ebner 2025-10-09 14:08:29 CEST

Several more patches to fix possible deadlock issues have been applied and packaged with proxmox-backup-server 4.0.16-1, available in the pbs-test repository at the time of writing

I can confirm after updating that this backup job is now working, thanks for the patching!

This is not possible since the data is split in a lot of small files ( chunks ), which are referenced by each backup snapshot they are part of. So if one chunk is in backup a of vm 101 and backup b of vm 202 it still just occupies the space one time. How should PBS decide which backup uses how much space? Such an estimation will be missleading at best or worse plain wrong.With the last version it is possible to see how much space each VM's backup occupies?

Read the technical overview to get an idea how everything fit together:

https://pbs.proxmox.com/docs/technical-overview.html

Last edited:

Hi

See also: https://bugzilla.proxmox.com/show_bug.cgi?id=2859#c2

the relevant bug report is still open: https://bugzilla.proxmox.com/show_bug.cgi?id=4506With the last version it is possible to see how much space each VM's backup occupies?

See also: https://bugzilla.proxmox.com/show_bug.cgi?id=2859#c2

And it references an older thread where @dcsapak gave some insights what make this problem non-trivial:

Hi,

is there a way to know how much real space is a backup occupying on a datastore?

Right now i can see only the full disk size of the VM for each backup which in contrast are incremental and, thanks to your nice job, not taking as much space as a full backup would... but without knowing how much real space each takes makes the feature a problem if the datastore is running out of space and a manual decision has to be made.

Thanks a lot!

is there a way to know how much real space is a backup occupying on a datastore?

Right now i can see only the full disk size of the VM for each backup which in contrast are incremental and, thanks to your nice job, not taking as much space as a full backup would... but without knowing how much real space each takes makes the feature a problem if the datastore is running out of space and a manual decision has to be made.

Thanks a lot!

- Gianks

- backup size disk space

- Replies: 18

- Forum: Proxmox Backup: Installation and configuration

That being said there are some third-party tools for an estimation ( but they still have the root problem with one chunks in different snapshots of different vms or lxcs):

I've tweaked the code somewhat to try to ascertain the sizes of the CTs too using XXD as in gerkos original example. I'm not sure I've got the logic quite right for CTs though when calculating the chunk sizes in the .didx files but perhaps someone would like to try this out and let me know if perhaps I'm getting the right data from the .didx files?

Code:

#!/bin/bash

datastore=/mnt/backup

id=$1

#check to see if its a VM or a CT

if [[ -d "$datastore/ct/$1" ]]

then

type=ct

elif [[ -d "$datastore/vm/$1" ]]

then

type=vm

else

echo "canot find VM/CT"

exit

fi...Hi, I have set up PBS 4 using S3 tech preview and i would like to propose some improvement. It could be nice if S3 tech included IAM role managing instead of access_key & secret_key. Moreover, it could also be nice if groups can be implemented as Proxmox is already using it (a real gift for users managing  ).

).

That's just little things, but I think it will improve the product in our company.

That's just little things, but I think it will improve the product in our company.

Hi,

but that is AWS specific? We're trying to remain provider agnostic, so I don't think that is something which will be pursued.S3 tech included IAM role managing instead of access_key & secret_key

What groups are you referring to here?Moreover, it could also be nice if groups can be implemented as Proxmox is already using it

Hi Chris,Hi,

but that is AWS specific? We're trying to remain provider agnostic, so I don't think that is something which will be pursued.

What groups are you referring to here?

It's AWS specific, i'm not sure to understand because S3 preview it's an integreted part of AWS, even in the "amazonaws.com" is specified.. you are already using aws user feature

I am referring to those groups (cf: screenshot) in Proxmox VE, it will be great to have de same in PBS and then manage permissions in groups instead on user one by one

No, you can use it with a lot of other S3 compatible object store providers and are in no way limited to use AWS.It's AWS specific, i'm not sure to understand because S3 preview it's an integreted part of AWS, even in the "amazonaws.com" is specified.. you are already using aws user feature

This has been requested before, see and add your usecase to https://bugzilla.proxmox.com/show_bug.cgi?id=5867I am referring to those groups (cf: screenshot) in Proxmox VE, it will be great to have de same in PBS and then manage permissions in groups instead on user one by one

Hello again there, i would like to know a thing about encryption on PBS. I know i can encrypt my Storage on PVE and then it will encrypt my backups. How do PBS will use my encryption key ? How do i informe him to use it if I need to restore ? And second thing, if I have previous backups already stored, enable encryption will work on them or only for the next backups ?

Thank you for you time

Thank you for you time

Hello again there, i would like to know a thing about encryption on PBS. I know i can encrypt my Storage on PVE and then it will encrypt my backups. How do PBS will use my encryption key ? How do i informe him to use it if I need to restore ? And second thing, if I have previous backups already stored, enable encryption will work on them or only for the next backups ?

Thank you for you time

Encryption on storage and encryption in PBS are completely different things. For example on storage Level ( like zfs) you need support by the storage ( zfs encryption or linux dm-crypt) while encrypted backups to PBS don't need a specific filesystem to work.

See also: https://forum.proxmox.com/threads/proxmox-deduplication.140304/post-799715

The key for PBS backups is on the ProxmoxVE Server, you will need to save it somewhere else to be able to restore your backups. For that reason it's a good idea to backup everything in /etc/pve outside of PBS.

Last edited: