I have a three node cluster running PVE 8.4, and things have been working well.

But two days ago, I noticed in

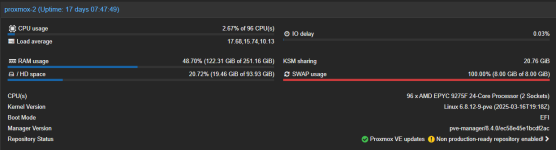

Today, I see that this host is still using 100% of the 8GB swap space, even though there is plenty of free RAM:

Searching these forums, it sounds like swap space may be important to coalesce pages, other people suggest swap should be avoided altogether. But, many of these posts may be somewhat dated (5+ years) so the current "best practice" may not be reflected therein.

So a couple of questions:

-Cheers,

speck

But two days ago, I noticed in

dmesg that the OOM killer stopped one of my VMs:

Code:

Sep 02 08:57:28 proxmox-2 kernel: pvedaemon worke invoked oom-killer: gfp_mask=0x140cca(GFP_HIGHUSER_MOVABLE|__GFP_COMP), order=0, oom_score_adj=0

Sep 02 08:57:28 proxmox-2 kernel: CPU: 91 PID: 196197 Comm: pvedaemon worke Tainted: P O 6.8.12-9-pve #1

Sep 02 08:57:28 proxmox-2 kernel: Hardware name: Dell Inc. PowerEdge R6725/0KRFPX, BIOS 1.1.3 02/25/2025

Sep 02 08:57:28 proxmox-2 kernel: Call Trace:

Sep 02 08:57:28 proxmox-2 kernel: <TASK>

Sep 02 08:57:28 proxmox-2 kernel: dump_stack_lvl+0x76/0xa0

Sep 02 08:57:28 proxmox-2 kernel: dump_stack+0x10/0x20

Sep 02 08:57:28 proxmox-2 kernel: dump_header+0x47/0x1f0

Sep 02 08:57:28 proxmox-2 kernel: oom_kill_process+0x110/0x240

Sep 02 08:57:28 proxmox-2 kernel: out_of_memory+0x26e/0x560

Sep 02 08:57:28 proxmox-2 kernel: __alloc_pages+0x10ce/0x1320

Sep 02 08:57:28 proxmox-2 kernel: alloc_pages_mpol+0x91/0x1f0

Sep 02 08:57:28 proxmox-2 kernel: alloc_pages+0x54/0xb0

Sep 02 08:57:28 proxmox-2 kernel: folio_alloc+0x15/0x40

Sep 02 08:57:28 proxmox-2 kernel: filemap_alloc_folio+0xfd/0x110

Sep 02 08:57:28 proxmox-2 kernel: __filemap_get_folio+0x195/0x2d0

Sep 02 08:57:28 proxmox-2 kernel: filemap_fault+0x5d0/0xc10

Sep 02 08:57:28 proxmox-2 kernel: __do_fault+0x3a/0x190

Sep 02 08:57:28 proxmox-2 kernel: do_fault+0x296/0x4f0

Sep 02 08:57:28 proxmox-2 kernel: __handle_mm_fault+0x894/0xf70

Sep 02 08:57:28 proxmox-2 kernel: handle_mm_fault+0x18d/0x380

Sep 02 08:57:28 proxmox-2 kernel: do_user_addr_fault+0x169/0x660

Sep 02 08:57:29 proxmox-2 kernel: exc_page_fault+0x83/0x1b0

Sep 02 08:57:29 proxmox-2 kernel: asm_exc_page_fault+0x27/0x30

Sep 02 08:57:29 proxmox-2 kernel: RIP: 0033:0x628c76483374

Sep 02 08:57:29 proxmox-2 kernel: Code: Unable to access opcode bytes at 0x628c7648334a.

Sep 02 08:57:29 proxmox-2 kernel: RSP: 002b:00007ffdc7820430 EFLAGS: 00010297

Sep 02 08:57:29 proxmox-2 kernel: RAX: 0000000000000002 RBX: 0000628cb07c82a0 RCX: 0000000000000000

Sep 02 08:57:29 proxmox-2 kernel: RDX: 0000000000000000 RSI: 0000000000000000 RDI: 0000628cb07c82a0

Sep 02 08:57:29 proxmox-2 kernel: RBP: 0000628cb8eb85c8 R08: 0000000000000000 R09: 0000628cb8e9da68

Sep 02 08:57:29 proxmox-2 kernel: R10: 0000628cb2d4c150 R11: 00007ffdc7820950 R12: 0000628c766a38e0

Sep 02 08:57:29 proxmox-2 kernel: R13: 0000628cb07c83e0 R14: 0000000000000052 R15: 0000628c76528630

Sep 02 08:57:29 proxmox-2 kernel: </TASK>

Sep 02 08:57:29 proxmox-2 kernel: Mem-Info:

Sep 02 08:57:29 proxmox-2 kernel: active_anon:20052567 inactive_anon:44204516 isolated_anon:0

active_file:0 inactive_file:1773 isolated_file:0

unevictable:52120 dirty:0 writeback:0

slab_reclaimable:124971 slab_unreclaimable:387891

mapped:25830 shmem:23126 pagetables:143465

sec_pagetables:15638 bounce:0

kernel_misc_reclaimable:0

free:153117 free_pcp:733 free_cma:0

Sep 02 08:57:29 proxmox-2 kernel: Node 0 active_anon:60206284kB inactive_anon:67036072kB active_file:0kB inactive_file:1184kB unevictable:67276kB isolated(anon):0kB isolated(file):0kB mapped:39100kB dirty:0kB writeback:0kB shmem:25240kB shmem_thp:0kB shmem_pmdmapped:0kB anon_thp:86706176kB writeback_tmp:0kB kernel_stack:18984kB p>

Sep 02 08:57:29 proxmox-2 kernel: Node 1 active_anon:20003984kB inactive_anon:109781992kB active_file:0kB inactive_file:5908kB unevictable:141204kB isolated(anon):0kB isolated(file):0kB mapped:64220kB dirty:0kB writeback:0kB shmem:67264kB shmem_thp:0kB shmem_pmdmapped:0kB anon_thp:75548672kB writeback_tmp:0kB kernel_stack:11032kB>

Sep 02 08:57:29 proxmox-2 kernel: Node 0 DMA free:11264kB boost:0kB min:4kB low:16kB high:28kB reserved_highatomic:0KB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB writepending:0kB present:15996kB managed:15360kB mlocked:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Sep 02 08:57:29 proxmox-2 kernel: lowmem_reserve[]: 0 735 128190 128190 128190

Sep 02 08:57:29 proxmox-2 kernel: Node 0 DMA32 free:509584kB boost:0kB min:256kB low:1008kB high:1760kB reserved_highatomic:0KB active_anon:151808kB inactive_anon:142068kB active_file:0kB inactive_file:12kB unevictable:0kB writepending:0kB present:878632kB managed:813092kB mlocked:0kB bounce:0kB free_pcp:500kB local_pcp:0kB free_>

Sep 02 08:57:29 proxmox-2 kernel: lowmem_reserve[]: 0 0 127455 127455 127455

Sep 02 08:57:29 proxmox-2 kernel: Node 0 Normal free:46692kB boost:0kB min:44668kB low:175180kB high:305692kB reserved_highatomic:2048KB active_anon:60054480kB inactive_anon:66894000kB active_file:0kB inactive_file:1064kB unevictable:67276kB writepending:0kB present:132620224kB managed:130514484kB mlocked:64204kB bounce:0kB free_>

Sep 02 08:57:29 proxmox-2 kernel: lowmem_reserve[]: 0 0 0 0 0

Sep 02 08:57:29 proxmox-2 kernel: Node 1 Normal free:44928kB boost:0kB min:45180kB low:177188kB high:309196kB reserved_highatomic:0KB active_anon:20003984kB inactive_anon:109781992kB active_file:0kB inactive_file:5908kB unevictable:141204kB writepending:0kB present:134181632kB managed:132019088kB mlocked:141204kB bounce:0kB free_>

Sep 02 08:57:29 proxmox-2 kernel: lowmem_reserve[]: 0 0 0 0 0

Sep 02 08:57:29 proxmox-2 kernel: Node 0 DMA: 0*4kB 0*8kB 0*16kB 0*32kB 0*64kB 0*128kB 0*256kB 0*512kB 1*1024kB (U) 1*2048kB (M) 2*4096kB (M) = 11264kB

Sep 02 08:57:29 proxmox-2 kernel: Node 0 DMA32: 2*4kB (UM) 12*8kB (UME) 13*16kB (UME) 13*32kB (ME) 13*64kB (ME) 15*128kB (UME) 13*256kB (UME) 8*512kB (UME) 7*1024kB (UM) 4*2048kB (U) 118*4096kB (UME) = 509592kB

Sep 02 08:57:29 proxmox-2 kernel: Node 0 Normal: 734*4kB (UME) 710*8kB (ME) 431*16kB (UME) 486*32kB (UME) 203*64kB (UME) 24*128kB (UM) 1*256kB (U) 0*512kB 0*1024kB 0*2048kB 0*4096kB = 47384kB

Sep 02 08:57:29 proxmox-2 kernel: Node 1 Normal: 9*4kB (UME) 141*8kB (UME) 981*16kB (UM) 788*32kB (UME) 17*64kB (M) 0*128kB 0*256kB 0*512kB 0*1024kB 1*2048kB (M) 0*4096kB = 45212kB

Sep 02 08:57:29 proxmox-2 kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=1048576kB

Sep 02 08:57:29 proxmox-2 kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB

Sep 02 08:57:29 proxmox-2 kernel: Node 1 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=1048576kB

Sep 02 08:57:29 proxmox-2 kernel: Node 1 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB

Sep 02 08:57:29 proxmox-2 kernel: 58626 total pagecache pages

Sep 02 08:57:29 proxmox-2 kernel: 29370 pages in swap cache

Sep 02 08:57:29 proxmox-2 kernel: Free swap = 44kB

Sep 02 08:57:29 proxmox-2 kernel: Total swap = 8388604kB

Sep 02 08:57:29 proxmox-2 kernel: 66924121 pages RAM

Sep 02 08:57:29 proxmox-2 kernel: 0 pages HighMem/MovableOnly

Sep 02 08:57:29 proxmox-2 kernel: 1083615 pages reserved

Sep 02 08:57:29 proxmox-2 kernel: 0 pages hwpoisonedToday, I see that this host is still using 100% of the 8GB swap space, even though there is plenty of free RAM:

Searching these forums, it sounds like swap space may be important to coalesce pages, other people suggest swap should be avoided altogether. But, many of these posts may be somewhat dated (5+ years) so the current "best practice" may not be reflected therein.

So a couple of questions:

- Is the 8GB default that the Proxmox PVE installer set up the right amount for a host with 256GB of physical RAM? Short of manual partitioning I don't see a way to change that during setup. I'm currently investigating upgrading the cluster from PVE8 to 9; perhaps an ideal time to make the change?

- Should I increase the amount of swap available on the host? The local drive is a 400GB SSD so I could allocate more space to swap if it would be helpful, but I worry that there is some underlying mechanism that would push the swap usage to fill all available space. Is this a "buffer bloat"-like situation?

- What's the best way to free up this swap space? The UI is presenting the swap usage graph in red, which tells me "unhealthy", but I don't see how to nudge PVE to do whatever is required to make it "healthy" again.

- The hosts' value of vm.swappiness is the default of 60; is this still the recommended setting?

-Cheers,

speck

Last edited: