Hey guys, I did some experiments recently and I think I finally found out why Windows performs poorly when the CPU type is host. You can check the complete experiment process and conclusion in my blog (Chinese, use google translate!)

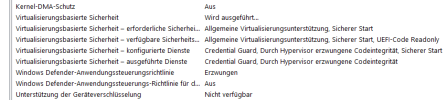

In short, the experiment finally found that the reason was that the two flags md_clear and flush_l1d caused performance problems. They would activate the CPU vulnerability mitigation measures of Windows, which would cause a significant increase in memory read latency, thus causing Windows to freeze.

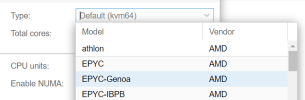

The two flags md_clear and flush_l1d are not passed to the virtual machine in traditional CPUs such as x86_64-v2-AES or Ivybridge-IBRS. This means that Windows will not and cannot start CPU side channel vulnerability mitigation measures in these CPU types, and performance will not be affected. This explains why Windows is normal when using these types, but Windows is stuck when using host, which is the most powerful type in theory.

The good news is that it is not Windows Hyper-V virtualization startup (bcdedit /set hypervisorlaunchtype off) and VBS that cause the performance degradation. Through the method in my blog, you can also perform nested virtualization in Windows without using a host.

These data do not appear in the official Proxmox Windows best practices, so many people are confused and I have not seen anyone give a specific reason so far, so I came here. You can find an alternative to using the host directly in my blog

In short, the experiment finally found that the reason was that the two flags md_clear and flush_l1d caused performance problems. They would activate the CPU vulnerability mitigation measures of Windows, which would cause a significant increase in memory read latency, thus causing Windows to freeze.

The two flags md_clear and flush_l1d are not passed to the virtual machine in traditional CPUs such as x86_64-v2-AES or Ivybridge-IBRS. This means that Windows will not and cannot start CPU side channel vulnerability mitigation measures in these CPU types, and performance will not be affected. This explains why Windows is normal when using these types, but Windows is stuck when using host, which is the most powerful type in theory.

The good news is that it is not Windows Hyper-V virtualization startup (bcdedit /set hypervisorlaunchtype off) and VBS that cause the performance degradation. Through the method in my blog, you can also perform nested virtualization in Windows without using a host.

These data do not appear in the official Proxmox Windows best practices, so many people are confused and I have not seen anyone give a specific reason so far, so I came here. You can find an alternative to using the host directly in my blog

Last edited: