Hello!

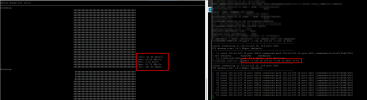

I have a Proxmox cluster built with 5 nodes. Each of them has a Mellanox ConnectX-4 or 5 network card with 100 Gb/s. The servers are configured identically in terms of network interfaces. On these cards, I have LACP set up, followed by bridges, and VLANs on top of them (I’m attaching a screenshot).

For live migration, I use a dedicated VLAN (bond0.107), where I have configured the network for this specific VLAN along with type=insecure. Nothing else runs over this VLAN. Additionally, all Proxmox nodes are connected to a 100 Gb/s switch.

Of course jumbo is set on that vlan (MTU 9000).

I have an issue where the live migration speed is quite poor (in my opinion), as I can't achieve more than ~1.8 GiB/s (~15 Gb/s) and often it is much less, in MiB/s. Is there anything else I can do or check?

One thing that comes to mind is trying to change the "hash policy" for LACP, as I currently have only layer 2 (I would switch to 2+3). I would appreciate any suggestions—thank you!

W will be grateful for any tips.

P.S. I ran iperf3 tests on this VLAN, and for a single server, I achieved speeds of around 30 Gb/s, while with multiple servers, it reached around 50 Gb/s.

Example of live migration logs:

"ethtool" command results:

---

Your faithfully,

Marek

I have a Proxmox cluster built with 5 nodes. Each of them has a Mellanox ConnectX-4 or 5 network card with 100 Gb/s. The servers are configured identically in terms of network interfaces. On these cards, I have LACP set up, followed by bridges, and VLANs on top of them (I’m attaching a screenshot).

For live migration, I use a dedicated VLAN (bond0.107), where I have configured the network for this specific VLAN along with type=insecure. Nothing else runs over this VLAN. Additionally, all Proxmox nodes are connected to a 100 Gb/s switch.

Of course jumbo is set on that vlan (MTU 9000).

Code:

ping -M do -s 8000 172.16.107.16

PING 172.16.107.16 (172.16.107.16) 8000(8028) bytes of data.

8008 bytes from 172.16.107.16: icmp_seq=1 ttl=64 time=0.265 ms

8008 bytes from 172.16.107.16: icmp_seq=2 ttl=64 time=0.233 ms

8008 bytes from 172.16.107.16: icmp_seq=3 ttl=64 time=0.226 ms

8008 bytes from 172.16.107.16: icmp_seq=4 ttl=64 time=0.215 ms

^C

--- 172.16.107.16 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3075ms

rtt min/avg/max/mdev = 0.215/0.234/0.265/0.018 ms

root@NODE1:~# ping -M do -s 8000 172.16.107.15

PING 172.16.107.15 (172.16.107.15) 8000(8028) bytes of data.

8008 bytes from 172.16.107.15: icmp_seq=1 ttl=64 time=0.077 ms

8008 bytes from 172.16.107.15: icmp_seq=2 ttl=64 time=0.055 ms

8008 bytes from 172.16.107.15: icmp_seq=3 ttl=64 time=0.051 msI have an issue where the live migration speed is quite poor (in my opinion), as I can't achieve more than ~1.8 GiB/s (~15 Gb/s) and often it is much less, in MiB/s. Is there anything else I can do or check?

One thing that comes to mind is trying to change the "hash policy" for LACP, as I currently have only layer 2 (I would switch to 2+3). I would appreciate any suggestions—thank you!

W will be grateful for any tips.

P.S. I ran iperf3 tests on this VLAN, and for a single server, I achieved speeds of around 30 Gb/s, while with multiple servers, it reached around 50 Gb/s.

Example of live migration logs:

Code:

Header

Proxmox

Virtual Environment 8.3.3

Search

Node 'NODE1'

Server View

Logs

()

task started by HA resource agent

2025-02-12 12:22:06 use dedicated network address for sending migration traffic (172.16.107.15)

2025-02-12 12:22:06 starting migration of VM 110 to node 'NODE1' (172.16.107.15)

2025-02-12 12:22:06 starting VM 110 on remote node 'NODE1'

2025-02-12 12:22:11 start remote tunnel

2025-02-12 12:22:12 ssh tunnel ver 1

2025-02-12 12:22:12 starting online/live migration on tcp:172.16.107.15:60000

2025-02-12 12:22:12 set migration capabilities

2025-02-12 12:22:12 migration downtime limit: 100 ms

2025-02-12 12:22:12 migration cachesize: 4.0 GiB

2025-02-12 12:22:12 set migration parameters

2025-02-12 12:22:12 start migrate command to tcp:172.16.107.15:60000

2025-02-12 12:22:13 migration active, transferred 1.3 GiB of 32.0 GiB VM-state, 1.8 GiB/s

2025-02-12 12:22:14 migration active, transferred 2.8 GiB of 32.0 GiB VM-state, 1.6 GiB/s

2025-02-12 12:22:15 migration active, transferred 4.3 GiB of 32.0 GiB VM-state, 1.5 GiB/s

2025-02-12 12:22:16 migration active, transferred 5.8 GiB of 32.0 GiB VM-state, 1.5 GiB/s

2025-02-12 12:22:17 migration active, transferred 7.3 GiB of 32.0 GiB VM-state, 1.5 GiB/s

2025-02-12 12:22:18 migration active, transferred 9.0 GiB of 32.0 GiB VM-state, 1.6 GiB/s

2025-02-12 12:22:19 migration active, transferred 10.7 GiB of 32.0 GiB VM-state, 1.8 GiB/s

2025-02-12 12:22:20 migration active, transferred 12.4 GiB of 32.0 GiB VM-state, 1.7 GiB/s

2025-02-12 12:22:21 migration active, transferred 14.1 GiB of 32.0 GiB VM-state, 1.4 GiB/s

2025-02-12 12:22:22 migration active, transferred 15.6 GiB of 32.0 GiB VM-state, 1.5 GiB/s

2025-02-12 12:22:23 migration active, transferred 17.3 GiB of 32.0 GiB VM-state, 1.8 GiB/s

2025-02-12 12:22:24 migration active, transferred 19.0 GiB of 32.0 GiB VM-state, 1.8 GiB/s

2025-02-12 12:22:25 migration active, transferred 20.7 GiB of 32.0 GiB VM-state, 1.8 GiB/s

2025-02-12 12:22:26 migration active, transferred 22.4 GiB of 32.0 GiB VM-state, 1.7 GiB/s

2025-02-12 12:22:27 migration active, transferred 24.2 GiB of 32.0 GiB VM-state, 1.8 GiB/s

2025-02-12 12:22:28 migration active, transferred 25.9 GiB of 32.0 GiB VM-state, 1.7 GiB/s

2025-02-12 12:22:29 migration active, transferred 27.6 GiB of 32.0 GiB VM-state, 1.7 GiB/s

2025-02-12 12:22:30 migration active, transferred 29.3 GiB of 32.0 GiB VM-state, 1.8 GiB/s

2025-02-12 12:22:31 migration active, transferred 30.9 GiB of 32.0 GiB VM-state, 427.8 MiB/s

2025-02-12 12:22:33 migration active, transferred 31.0 GiB of 32.0 GiB VM-state, 16.5 MiB/s

2025-02-12 12:22:34 migration active, transferred 31.1 GiB of 32.0 GiB VM-state, 225.6 MiB/s

2025-02-12 12:22:35 auto-increased downtime to continue migration: 200 ms

2025-02-12 12:22:35 migration active, transferred 31.2 GiB of 32.0 GiB VM-state, 101.1 MiB/s

2025-02-12 12:22:35 xbzrle: send updates to 33617 pages in 5.1 MiB encoded memory, cache-miss 41.07%, overflow 62

2025-02-12 12:22:36 auto-increased downtime to continue migration: 400 ms

2025-02-12 12:22:37 migration active, transferred 31.2 GiB of 32.0 GiB VM-state, 107.6 MiB/s

2025-02-12 12:22:37 xbzrle: send updates to 69631 pages in 7.2 MiB encoded memory, cache-miss 6.98%, overflow 76

2025-02-12 12:22:38 migration active, transferred 31.2 GiB of 32.0 GiB VM-state, 86.3 MiB/s, VM dirties lots of memory: 114.3 MiB/s

2025-02-12 12:22:38 xbzrle: send updates to 99985 pages in 8.9 MiB encoded memory, cache-miss 25.69%, overflow 79

2025-02-12 12:22:38 auto-increased downtime to continue migration: 800 ms

2025-02-12 12:22:39 average migration speed: 1.2 GiB/s - downtime 252 ms

2025-02-12 12:22:39 migration status: completed

2025-02-12 12:22:43 migration finished successfully (duration 00:00:38)

TASK OK

Code:

2025-02-12 12:23:01 use dedicated network address for sending migration traffic (172.16.107.18)

2025-02-12 12:23:01 starting migration of VM 105 to node 'NODE3' (172.16.107.18)

2025-02-12 12:23:01 starting VM 105 on remote node 'NODE3'

2025-02-12 12:23:06 start remote tunnel

2025-02-12 12:23:08 ssh tunnel ver 1

2025-02-12 12:23:08 starting online/live migration on tcp:172.16.107.18:60000

2025-02-12 12:23:08 set migration capabilities

2025-02-12 12:23:08 migration downtime limit: 100 ms

2025-02-12 12:23:08 migration cachesize: 256.0 MiB

2025-02-12 12:23:08 set migration parameters

2025-02-12 12:23:08 start migrate command to tcp:172.16.107.18:60000

2025-02-12 12:23:09 migration active, transferred 368.5 MiB of 2.0 GiB VM-state, 403.9 MiB/s

2025-02-12 12:23:10 migration active, transferred 729.2 MiB of 2.0 GiB VM-state, 434.7 MiB/s

2025-02-12 12:23:11 migration active, transferred 1.1 GiB of 2.0 GiB VM-state, 348.2 MiB/s

2025-02-12 12:23:12 migration active, transferred 1.4 GiB of 2.0 GiB VM-state, 369.1 MiB/s

2025-02-12 12:23:13 average migration speed: 413.0 MiB/s - downtime 31 ms

2025-02-12 12:23:13 migration status: completed

2025-02-12 12:23:17 migration finished successfully (duration 00:00:17)

TASK OK"ethtool" command results:

Code:

ethtool bond0

Settings for bond0:

Supported ports: [ ]

Supported link modes: Not reported

Supported pause frame use: No

Supports auto-negotiation: No

Supported FEC modes: Not reported

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 200000Mb/s

Duplex: Full

Auto-negotiation: off

Port: Other

PHYAD: 0

Transceiver: internal

Link detected: yes

root@NODE1:~# ethtool bond0.107

Settings for bond0.107:

Supported ports: [ ]

Supported link modes: Not reported

Supported pause frame use: No

Supports auto-negotiation: No

Supported FEC modes: Not reported

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 200000Mb/s

Duplex: Full

Auto-negotiation: off

Port: Other

PHYAD: 0

Transceiver: internal

Link detected: yes

root@NODE1:~# ethtool ens1f0np0

Settings for ens1f0np0:

Supported ports: [ Backplane ]

Supported link modes: 1000baseKX/Full

10000baseKR/Full

40000baseKR4/Full

40000baseCR4/Full

40000baseSR4/Full

40000baseLR4/Full

56000baseKR4/Full

25000baseCR/Full

25000baseKR/Full

25000baseSR/Full

50000baseCR2/Full

50000baseKR2/Full

100000baseKR4/Full

100000baseSR4/Full

100000baseCR4/Full

100000baseLR4_ER4/Full

Supported pause frame use: Symmetric

Supports auto-negotiation: Yes

Supported FEC modes: None RS BASER

Advertised link modes: 1000baseKX/Full

10000baseKR/Full

40000baseKR4/Full

40000baseCR4/Full

40000baseSR4/Full

40000baseLR4/Full

56000baseKR4/Full

25000baseCR/Full

25000baseKR/Full

25000baseSR/Full

50000baseCR2/Full

50000baseKR2/Full

100000baseKR4/Full

100000baseSR4/Full

100000baseCR4/Full

100000baseLR4_ER4/Full

Advertised pause frame use: Symmetric

Advertised auto-negotiation: Yes

Advertised FEC modes: RS

Link partner advertised link modes: Not reported

Link partner advertised pause frame use: No

Link partner advertised auto-negotiation: Yes

Link partner advertised FEC modes: Not reported

Speed: 100000Mb/s

Duplex: Full

Auto-negotiation: on

Port: Direct Attach Copper

PHYAD: 0

Transceiver: internal

Supports Wake-on: d

Wake-on: d

Link detected: yesYour faithfully,

Marek