VictorSTS's latest activity

-

VictorSTS reacted to t.lamprecht's post in the thread Proxmox Datacenter Manager 1.0 (stable) with

VictorSTS reacted to t.lamprecht's post in the thread Proxmox Datacenter Manager 1.0 (stable) with Like.

We're very excited to present the first stable release of our new Proxmox Datacenter Manager! Proxmox Datacenter Manager is an open-source, centralized management solution to oversee and manage multiple, independent Proxmox-based environments...

Like.

We're very excited to present the first stable release of our new Proxmox Datacenter Manager! Proxmox Datacenter Manager is an open-source, centralized management solution to oversee and manage multiple, independent Proxmox-based environments... -

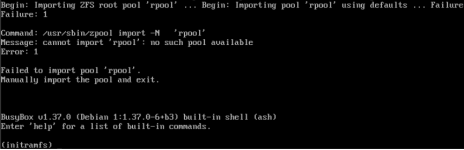

VictorSTS replied to the thread ZFS rpool isn't imported automatically after update from PVE9.0.10 to latest PVE9.1 and kernel 6.17 with viommu=virtio.I though it would be related to nested virtualization / virtio vIOMMU. Haven't seen any issue with bare metal yet. Can you manually import the pool and continue boot once disks are detected (zpool import rpool)?

VictorSTS replied to the thread ZFS rpool isn't imported automatically after update from PVE9.0.10 to latest PVE9.1 and kernel 6.17 with viommu=virtio.I though it would be related to nested virtualization / virtio vIOMMU. Haven't seen any issue with bare metal yet. Can you manually import the pool and continue boot once disks are detected (zpool import rpool)? -

VictorSTS replied to the thread Any news on lxc online migration?.For reference, https://forum.proxmox.com/threads/proxmox-ve-8-4-released.164821/page-2#post-762577 CRIU doesn't seem to be powerful / mature enough to be used as an option and IMHO seems that Proxmox would have to devel a tool for live migrating...

VictorSTS replied to the thread Any news on lxc online migration?.For reference, https://forum.proxmox.com/threads/proxmox-ve-8-4-released.164821/page-2#post-762577 CRIU doesn't seem to be powerful / mature enough to be used as an option and IMHO seems that Proxmox would have to devel a tool for live migrating... -

VictorSTS replied to the thread iSCSI multipath issue.As mentioned previously, PVE will try to connect iSCSI disks later in the boot process than multipath expects them to be online, so multipath won't be able to use the disks. You can't use multipath with iSCSI disks managed/connected/configured...

VictorSTS replied to the thread iSCSI multipath issue.As mentioned previously, PVE will try to connect iSCSI disks later in the boot process than multipath expects them to be online, so multipath won't be able to use the disks. You can't use multipath with iSCSI disks managed/connected/configured... -

VictorSTS reacted to bbgeek17's post in the thread PSA: PVE 9.X iSCSI/iscsiadm upgrade incompatibility with

VictorSTS reacted to bbgeek17's post in the thread PSA: PVE 9.X iSCSI/iscsiadm upgrade incompatibility with Like.

Hi @VictorSTS , You are correct. If there are existing entries in the iSCSI database (iscsiadm -m nodes) at the time of the upgrade, they will cause issues when you try to modify them afterward. Other scenarios can also lead to problems, for...

Like.

Hi @VictorSTS , You are correct. If there are existing entries in the iSCSI database (iscsiadm -m nodes) at the time of the upgrade, they will cause issues when you try to modify them afterward. Other scenarios can also lead to problems, for... -

VictorSTS replied to the thread PSA: PVE 9.X iSCSI/iscsiadm upgrade incompatibility.IIUC, this may/will affect iSCSI deployments configured on PVE8.x when updating to PVE9.x, am I right? New deployments with PVE9.x should work correctly? Thanks!

VictorSTS replied to the thread PSA: PVE 9.X iSCSI/iscsiadm upgrade incompatibility.IIUC, this may/will affect iSCSI deployments configured on PVE8.x when updating to PVE9.x, am I right? New deployments with PVE9.x should work correctly? Thanks! -

VictorSTS reacted to bbgeek17's post in the thread PSA: PVE 9.X iSCSI/iscsiadm upgrade incompatibility with

VictorSTS reacted to bbgeek17's post in the thread PSA: PVE 9.X iSCSI/iscsiadm upgrade incompatibility with Like.

Hello everyone, This is a brief public service announcement regarding an iSCSI upgrade incompatibility affecting PVE 9.x. A bug introduced in iscsiadm in 2023 prevents the tool from parsing the iSCSI database generated by earlier versions. If...

Like.

Hello everyone, This is a brief public service announcement regarding an iSCSI upgrade incompatibility affecting PVE 9.x. A bug introduced in iscsiadm in 2023 prevents the tool from parsing the iSCSI database generated by earlier versions. If... -

VictorSTS replied to the thread [PROXMOX CLUSTER] Add NFS resource to Proxmox from a NAS for backup.Can't really recommend anything specific without infrastructure details, but I would definitely use some VPN and tunnel NFS traffic inside it, both for obvious security reasons and ease of management on the WAN side (you'll only need to expose...

VictorSTS replied to the thread [PROXMOX CLUSTER] Add NFS resource to Proxmox from a NAS for backup.Can't really recommend anything specific without infrastructure details, but I would definitely use some VPN and tunnel NFS traffic inside it, both for obvious security reasons and ease of management on the WAN side (you'll only need to expose... -

VictorSTS posted the thread ZFS rpool isn't imported automatically after update from PVE9.0.10 to latest PVE9.1 and kernel 6.17 with viommu=virtio in Proxmox VE: Installation and configuration.On one of my training labs, I have a series of training VMs running PVE with nested virtualization. These VM has two disks in a ZFS mirror for the OS, UEFI, secure boot disabled, use systemd-boot (no grub). VM uses machine: q35,viommu=virtio for...

VictorSTS posted the thread ZFS rpool isn't imported automatically after update from PVE9.0.10 to latest PVE9.1 and kernel 6.17 with viommu=virtio in Proxmox VE: Installation and configuration.On one of my training labs, I have a series of training VMs running PVE with nested virtualization. These VM has two disks in a ZFS mirror for the OS, UEFI, secure boot disabled, use systemd-boot (no grub). VM uses machine: q35,viommu=virtio for... -

VictorSTS reacted to fweber's post in the thread [Feature request] Ability to enable rxbounce option in Ceph RBD storage for windows VMs with

VictorSTS reacted to fweber's post in the thread [Feature request] Ability to enable rxbounce option in Ceph RBD storage for windows VMs with Like.

Hi, this should be resolved with Proxmox VE 9.1: When KRBD is enabled, RBD storages will automatically map disks of VMs with a Windows OS type with the rxbounce flag set, so there should be no need for a workaround anymore. See [1] for more...

Like.

Hi, this should be resolved with Proxmox VE 9.1: When KRBD is enabled, RBD storages will automatically map disks of VMs with a Windows OS type with the rxbounce flag set, so there should be no need for a workaround anymore. See [1] for more... -

VictorSTS replied to the thread [PROXMOX CLUSTER] Add NFS resource to Proxmox from a NAS for backup.It's unclear if you are using some VPN or direct connection using public IPs (hope not, NFS has no encryption), but maybe there's some firewall and/or NAT rule that doesn't allow RPC traffic properly? Maybe your synology uses some port range for...

VictorSTS replied to the thread [PROXMOX CLUSTER] Add NFS resource to Proxmox from a NAS for backup.It's unclear if you are using some VPN or direct connection using public IPs (hope not, NFS has no encryption), but maybe there's some firewall and/or NAT rule that doesn't allow RPC traffic properly? Maybe your synology uses some port range for... -

VictorSTS reacted to bbgeek17's post in the thread [PROXMOX CLUSTER] Add NFS resource to Proxmox from a NAS for backup with

VictorSTS reacted to bbgeek17's post in the thread [PROXMOX CLUSTER] Add NFS resource to Proxmox from a NAS for backup with Like.

There are two operations that are happening in the background when adding NFS storage pool in PVE: - health probe - mount When you are doing manual mount, the health probe is skipped. In PVE case the health probe is a "showmount" command...

Like.

There are two operations that are happening in the background when adding NFS storage pool in PVE: - health probe - mount When you are doing manual mount, the health probe is skipped. In PVE case the health probe is a "showmount" command... -

VictorSTS reacted to t.lamprecht's post in the thread Proxmox Backup Server 4.1 released! with

VictorSTS reacted to t.lamprecht's post in the thread Proxmox Backup Server 4.1 released! with Like.

We're pleased to announce the release of Proxmox Backup Server 4.1. This version is based on Debian 13.2 (“Trixie”), uses Linux kernel 6.17.2-1 as the new stable default, and comes with ZFS 2.3.4 for reliable, enterprise-grade storage and...

Like.

We're pleased to announce the release of Proxmox Backup Server 4.1. This version is based on Debian 13.2 (“Trixie”), uses Linux kernel 6.17.2-1 as the new stable default, and comes with ZFS 2.3.4 for reliable, enterprise-grade storage and... -

VictorSTS replied to the thread Abysmally slow restore from backup.IIUC that patch seem to be applied to the verify tasks to improve it's performance. If that's the case, words can't express how eager am I to test it once patch gets packaged!

VictorSTS replied to the thread Abysmally slow restore from backup.IIUC that patch seem to be applied to the verify tasks to improve it's performance. If that's the case, words can't express how eager am I to test it once patch gets packaged! -

VictorSTS replied to the thread redundant qdevice network.This is wrong by design: you infrastructure devices must be 100% independent from your VMs. If your PVE hosts need to reach a remote QDevice, they must reach it on their own (i.e. run a wireguard tunnel on each PVE host). From the point of view...

VictorSTS replied to the thread redundant qdevice network.This is wrong by design: you infrastructure devices must be 100% independent from your VMs. If your PVE hosts need to reach a remote QDevice, they must reach it on their own (i.e. run a wireguard tunnel on each PVE host). From the point of view... -

VictorSTS replied to the thread Snapshots as Volume-Chain Creates Large Snapshot Volumes.IMHO, a final delete/discard should be done, too. If no discard is sent, it would delegate to the SAN what to do with those zeroes and depending on SAN capabilities (mainly thin provision but also compression and deduplication) may not free the...

VictorSTS replied to the thread Snapshots as Volume-Chain Creates Large Snapshot Volumes.IMHO, a final delete/discard should be done, too. If no discard is sent, it would delegate to the SAN what to do with those zeroes and depending on SAN capabilities (mainly thin provision but also compression and deduplication) may not free the... -

VictorSTS replied to the thread PVE8to9 Prompts to Remove systemd-boot on ZFS & UEFI.It's explicitly explained in the PVE 8 to 9 documentation [1]: that package has been split in two on Trixie, hence systemd-boot isn't needed. I've upgraded some clusters already and removed that package when pve8to9 suggested to and the boot up...

VictorSTS replied to the thread PVE8to9 Prompts to Remove systemd-boot on ZFS & UEFI.It's explicitly explained in the PVE 8 to 9 documentation [1]: that package has been split in two on Trixie, hence systemd-boot isn't needed. I've upgraded some clusters already and removed that package when pve8to9 suggested to and the boot up... -

VictorSTS replied to the thread Bug in wipe volume operation.I reported this already [1] and it is claimed to be fixed in PVE9.1 released today, although haven't tested it yet. [1] https://bugzilla.proxmox.com/show_bug.cgi?id=6941

VictorSTS replied to the thread Bug in wipe volume operation.I reported this already [1] and it is claimed to be fixed in PVE9.1 released today, although haven't tested it yet. [1] https://bugzilla.proxmox.com/show_bug.cgi?id=6941 -

VictorSTS reacted to t.lamprecht's post in the thread Proxmox Virtual Environment 9.1 available! with

VictorSTS reacted to t.lamprecht's post in the thread Proxmox Virtual Environment 9.1 available! with Like.

We're proud to present the next iteration of our Proxmox Virtual Environment platform. This new version 9.1 is the first point release since our major update and is dedicated to refinement. This release is based on Debian 13.2 "Trixie" but we're...

Like.

We're proud to present the next iteration of our Proxmox Virtual Environment platform. This new version 9.1 is the first point release since our major update and is dedicated to refinement. This release is based on Debian 13.2 "Trixie" but we're... -

VictorSTS reacted to t.lamprecht's post in the thread Opt-in Linux 6.17 Kernel for Proxmox VE 9 available on test & no-subscription with

VictorSTS reacted to t.lamprecht's post in the thread Opt-in Linux 6.17 Kernel for Proxmox VE 9 available on test & no-subscription with Like.

We recently uploaded the 6.17 kernel to our repositories. The current default kernel for the Proxmox VE 9 series is still 6.14, but 6.17 is now an option. We plan to use the 6.17 kernel as the new default for the Proxmox VE 9.1 release later in...

Like.

We recently uploaded the 6.17 kernel to our repositories. The current default kernel for the Proxmox VE 9 series is still 6.14, but 6.17 is now an option. We plan to use the 6.17 kernel as the new default for the Proxmox VE 9.1 release later in...