Hello everyone,

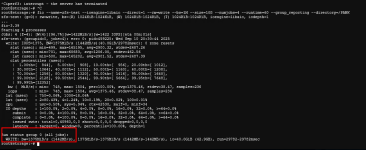

I need some help with my Proxmox Backup Server (PBS) backup and restore speeds. My setup includes three HP ProLiant DL360 servers with 10Gb network cards. The PBS itself is running on a custom PC with the following specifications:

Currently, the PBS is not in production, so I have the flexibility to run further tests with my ZFS setup.

Versions:

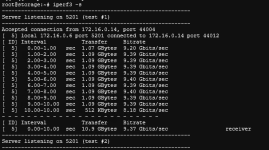

I currently have a Debian-based NAS with a PC and RAID cards for my standard vzdump backups. These are already in production, and the copy speed consistently stays around 430MB/s. This makes me believe the problem is not a network performance issue, but rather something related to the PBS configuration

Thank you in advance for your help!

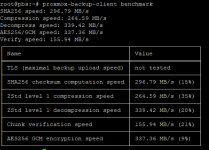

PD: PBS Benchmarks results attached

I need some help with my Proxmox Backup Server (PBS) backup and restore speeds. My setup includes three HP ProLiant DL360 servers with 10Gb network cards. The PBS itself is running on a custom PC with the following specifications:

- CPU: Ryzen 7 8700G

- RAM: 128GB DDR5

- Storage: 4x 14TB HDDs in a RAIDZ2 ZFS pool, and 3x 128GB NVMe SSDs for cache

- Motherboard: ASUS X670E-E

- Network: 10Gb Ethernet card

Currently, the PBS is not in production, so I have the flexibility to run further tests with my ZFS setup.

Versions:

- Proxmox: 8.4.13

- PBS: 4.0.14

I currently have a Debian-based NAS with a PC and RAID cards for my standard vzdump backups. These are already in production, and the copy speed consistently stays around 430MB/s. This makes me believe the problem is not a network performance issue, but rather something related to the PBS configuration

Thank you in advance for your help!

PD: PBS Benchmarks results attached

Attachments

Last edited: