Hello everyone,

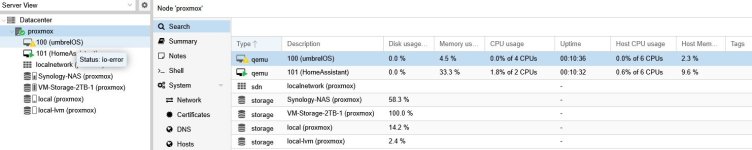

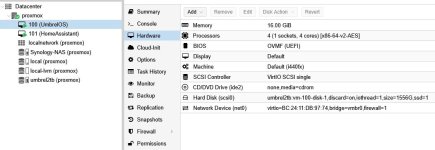

I took what I'm finding to be a deep dive into Proxmox a few weeks ago. I have two VMs set up, one for home assistant (running fine) and one for Umbrel.

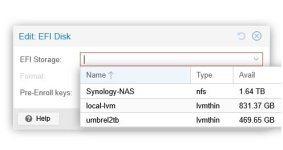

I installed proxmox on a 500gb USB connected SSD, then I have a new 2TB m.2 plugged into the motherboard.

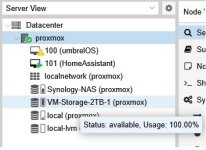

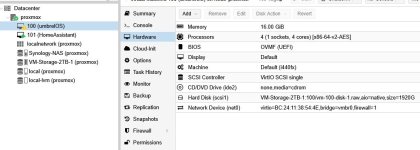

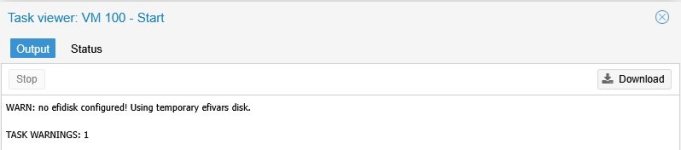

Home assistant is installed on a VM on the 500GB disk and I have assigned it 100GB, and I assigned the whole 2tb m.2 to the umbrel install to run a BTC node. All has been working fine until a few days ago and I am getting a yellow triangle on the Unbrel VM with an io-error. I have been reading this and other forums trying to work it out. I'll be honest, I am not sure where to look for the logs, but after all my reading it might be becasue the 2TB m.2 is 100% full. I cannot reboot the VM indiviually, and I have rebooted proxmox but I still see the error.

Unbrel was using about 900GB of the 2TB available. I want it to have as much of the disk as possible, but maybe I haven't left enough space for proxmox to do anything it needs on the drive, hence the error?

From my reading I have changed the aoi on the HDD to 'native' but that hans't helped.

I don't mind removing the Umbrel VM and starting again, but I want to make sure it doesn't happen again.

Any help appreciated,

Cheers

I took what I'm finding to be a deep dive into Proxmox a few weeks ago. I have two VMs set up, one for home assistant (running fine) and one for Umbrel.

I installed proxmox on a 500gb USB connected SSD, then I have a new 2TB m.2 plugged into the motherboard.

Home assistant is installed on a VM on the 500GB disk and I have assigned it 100GB, and I assigned the whole 2tb m.2 to the umbrel install to run a BTC node. All has been working fine until a few days ago and I am getting a yellow triangle on the Unbrel VM with an io-error. I have been reading this and other forums trying to work it out. I'll be honest, I am not sure where to look for the logs, but after all my reading it might be becasue the 2TB m.2 is 100% full. I cannot reboot the VM indiviually, and I have rebooted proxmox but I still see the error.

Unbrel was using about 900GB of the 2TB available. I want it to have as much of the disk as possible, but maybe I haven't left enough space for proxmox to do anything it needs on the drive, hence the error?

From my reading I have changed the aoi on the HDD to 'native' but that hans't helped.

I don't mind removing the Umbrel VM and starting again, but I want to make sure it doesn't happen again.

Any help appreciated,

Cheers

Attachments

Last edited: