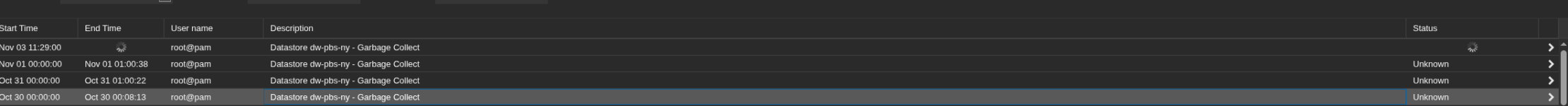

I've noticed this only since upgrading to 4.0.18. We have several nodes with backup jobs that run daily. When backups trigger in the morning, all of the jobs in PBS hang at this status:

This results in 50+ backup jobs hung on the PBS server, they will just sit in this status forever and never time out:

The jobs on the Proxmox nodes do timeout and fail:

The PBS node gets into this 'state' almost daily. Whenever this happens, every VM I backup hangs like this, even a newly created VM with no previous backups. The only fix I've found is to reboot the node, then backups start working normally again. This has been happening almost every day since I upgraded to 4.0.18. This is on an S3 datastore using Backblaze B2.

Code:

2025-10-31T03:25:57-04:00: starting new backup on datastore 'dw-pbs-ny' from ::ffff:172.81.128.10: "vm/5142/2025-10-31T07:25:54Z"

2025-10-31T03:25:57-04:00: download 'index.json.blob' from previous backup 'vm/5142/2025-10-24T07:04:23Z'.

2025-10-31T03:25:57-04:00: register chunks in 'drive-virtio0.img.fidx' from previous backup 'vm/5142/2025-10-24T07:04:23Z'.

2025-10-31T03:25:57-04:00: download 'drive-virtio0.img.fidx' from previous backup 'vm/5142/2025-10-24T07:04:23Z'.

2025-10-31T03:25:57-04:00: created new fixed index 1 ("vm/5142/2025-10-31T07:25:54Z/drive-virtio0.img.fidx")This results in 50+ backup jobs hung on the PBS server, they will just sit in this status forever and never time out:

The jobs on the Proxmox nodes do timeout and fail:

Code:

INFO: Starting Backup of VM 5154 (qemu)

INFO: Backup started at 2025-10-31 02:38:47

INFO: status = running

INFO: VM Name: Server

INFO: include disk 'scsi0' 'local:5154/vm-5154-disk-0.raw' 80G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating Proxmox Backup Server archive 'vm/5154/2025-10-31T07:38:47Z'

ERROR: VM 5154 qmp command 'backup' failed - got timeout

INFO: aborting backup job

ERROR: VM 5154 qmp command 'backup-cancel' failed - got timeout

INFO: resuming VM again

ERROR: Backup of VM 5154 failed - VM 5154 qmp command 'cont' failed - got timeoutThe PBS node gets into this 'state' almost daily. Whenever this happens, every VM I backup hangs like this, even a newly created VM with no previous backups. The only fix I've found is to reboot the node, then backups start working normally again. This has been happening almost every day since I upgraded to 4.0.18. This is on an S3 datastore using Backblaze B2.