Hello everyone,

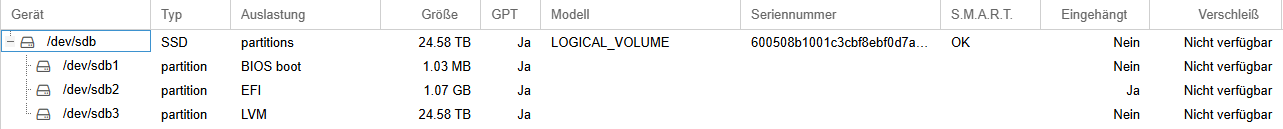

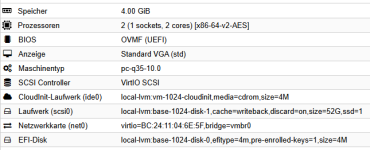

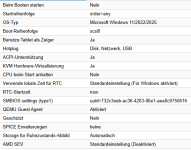

I am experiencing a reproducible issue with Proxmox VE 9.0. After a fresh installation, I am using the default LVM-Thin datastore that PVE creates during setup.

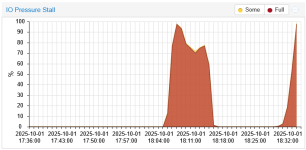

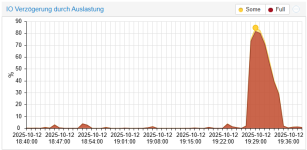

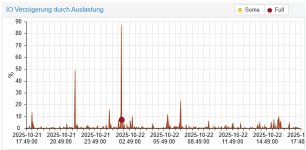

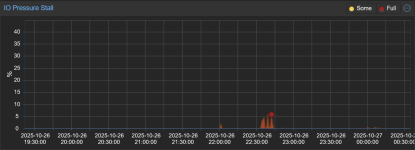

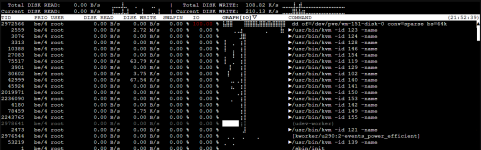

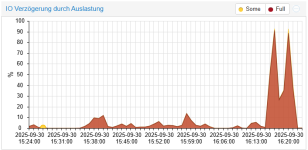

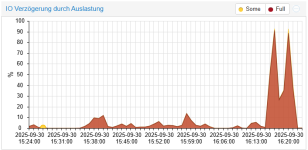

Whenever I create a full clone from a VM template (disk format is raw), the I/O delay immediately spikes to 100%. As a result, other running VMs freeze completely and no longer respond. Even the QEMU guest agent does not respond anymore. The only way to recover is to issue a Stop command on the affected VMs.

Steps to reproduce:

Any suggestions or workarounds would be greatly appreciated.

Thanks in advance!

I am experiencing a reproducible issue with Proxmox VE 9.0. After a fresh installation, I am using the default LVM-Thin datastore that PVE creates during setup.

Whenever I create a full clone from a VM template (disk format is raw), the I/O delay immediately spikes to 100%. As a result, other running VMs freeze completely and no longer respond. Even the QEMU guest agent does not respond anymore. The only way to recover is to issue a Stop command on the affected VMs.

Steps to reproduce:

- Fresh install of PVE 9.0

- Keep the default LVM-Thin datastore

- Create a VM template

- Perform a full clone from the template (disk = raw)

- I/O delay spikes to 100% → other VMs freeze → only “Stop” helps

- The problem occurs on multiple reinstalled hosts, always the same result.

- No special configuration changes were made to storage or VMs.

- Using full clone, not linked clone.

- Disk format = raw.

Any suggestions or workarounds would be greatly appreciated.

Thanks in advance!

Last edited: