That is a very good question, and my testing so far indicates the wisdom that led me to consider this is backwards. Assigning the VM to use an e1000e nic made all the problems go away for me. I'll do further testing later. But using the VirtIO option seems to me to be what was causing the crashes. Although I did disable TSO as well, curious if you would experience no problems just using the e1000e nic without doing any further changes. Please let us know!So I'm planning to rebuild my Home Assistant server over the weekend, using Proxmox so that I can build HAOS in a VM and be considered "supported" by HA (today I run HA supervised, but it's fallen out of favour for prod builds). My HA server is an Intel NUC (NUC8I3BEH 8th Gen Core i3). The NIC shows up as:

Code:% lspci -v | grep Ethernet 00:1f.6 Ethernet controller: Intel Corporation Ethernet Connection (6) I219-V (rev 30) Subsystem: Intel Corporation Ethernet Connection (6) I219-V

I assume, therefore, that I am going to join the club of e1000e driver hangs, which doesn't fill me with joy (the NIC has been 100% stable running bare metal for the last 6 years).

My question: can I avoid this grief if I use the VirtIO network driver instead of Intel E1000? I'm not doing anything fancy with networking - no VLANs or weird IP config. I'll just have separate IP addresses for the host and each of the VM guests. I do have Gbps fibre from my ISP, so would like to maintain full Gbps performance.

[SOLVED] Intel NIC e1000e hardware unit hang

- Thread starter md127

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

OK - that's super interesting. And I've twigged that perhaps I've missed the point a bit. I was thinking of this as a problem that materialised on VM guests, but now I'm thinking the workaround (disabling GSO / TSO) is probably applied to the host, not the guests. This means the problem must be a complex interaction between config of the interface on the host and network driver chosen for the guests. Ouch.

In any case, I'll certainly share my experience. Although I have to confess I am considering alternatives to Proxmox, if it means I can avoid this issue. It occurs to me that Proxmox is arguably far more capable than I need (two VM guests with super-vanilla config).

In any case, I'll certainly share my experience. Although I have to confess I am considering alternatives to Proxmox, if it means I can avoid this issue. It occurs to me that Proxmox is arguably far more capable than I need (two VM guests with super-vanilla config).

Don't let me talk you out of it. Two hours of iperf3 so far without issues. And just now, I edited the config file to be as the last poster suggested, or atleast similar, and I'm going to test it again. I'm now testing:OK - that's super interesting. And I've twigged that perhaps I've missed the point a bit. I was thinking of this as a problem that materialised on VM guests, but now I'm thinking the workaround (disabling GSO / TSO) is probably applied to the host, not the guests. This means the problem must be a complex interaction between config of the interface on the host and network driver chosen for the guests. Ouch.

In any case, I'll certainly share my experience. Although I have to confess I am considering alternatives to Proxmox, if it means I can avoid this issue. It occurs to me that Proxmox is arguably far more capable than I need (two VM guests with super-vanilla config).

Code:

auto lo

iface lo inet loopback

iface eno1 inet manual

# Disable offloads for e1000e driver issues

# post-up /sbin/ethtool -K eno1 tso off gso off gro off

post-up ethtool -K eno1 tso off

auto vmbr0

iface vmbr0 inet static

address 10.10.10.1/24

gateway 192.168.1.1

bridge-ports eno1

bridge-stp off

bridge-fd 0

post-up ethtool -K vmbr0 tso off

# iface wls3f3 inet manual

# Wireless interface (Wi-Fi client)

auto wls3f3

iface wls3f3 inet dhcp

wpa-ssid "backup"

wpa-psk "backup"

source /etc/network/interfaces.d/*and I'm not experiencing the same issues so far. I'll let it run Iperf3 overnight and see what happens.

Nice but can we be certain there is no hidden backdoor (even without using the cloud option)? Is the software open source and can it be checked by a community and compiled yourself? If I would use this, I would lock it down extremely tight. No traffic to the internet, for instance. I would make sure on my router/fw/gateway it only reacts to a few internal IP addresses, including a few that get handed out when I connect with VPN to my network (for remote access). Opening up physical access to the outside world is a really, really, REALLY, creepy thing, however nice it is.I am stable with all updates installed. At least for the moment. I am using the offloading fix.

But to be sure, I bought two of these gadgets: https://store-eu.gl-inet.com/products/comet-gl-rm1-remote-keyboard-video-mouse

(They have a global and a US store)

And even locked down, what if I connect using a web interface and that web interface that is running on the client machine connects to the outside world as well through whatever is on the web page getting around my nicely locked down setup...?

Last edited:

Just to update you guys.

If you experienced this issue before, other than just waiting and seeing if the problem was fixed, would you recommend any tests or benchmarks that would crash a nic with the hardware hang? How can I test it before I put it in a production environment, so to speak? It seems the windows 10 VM running a VirtIO nic and iperf3 was reliably able to make it crash previously, but after the changes, so far, so good. I might call it done after a few more days of testing.

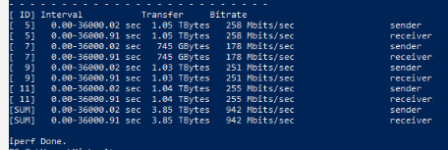

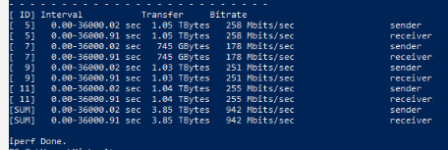

- Ubuntu VM with an e1000e nic successfully ran IPerf3 for 10 hours at gigabit speeds with 0 retransmissions and no crashing.

- Windows 10 VM with a VirtIO nic has been running IPerf3 for 8 hours at gigabit speeds. No stats until it's done.

If you experienced this issue before, other than just waiting and seeing if the problem was fixed, would you recommend any tests or benchmarks that would crash a nic with the hardware hang? How can I test it before I put it in a production environment, so to speak? It seems the windows 10 VM running a VirtIO nic and iperf3 was reliably able to make it crash previously, but after the changes, so far, so good. I might call it done after a few more days of testing.

I would certainly lock it down HARD too.Nice but can we be certain there is no hidden backdoor (even without using the cloud option)? Is the software open source and can it be checked by a community and compiled yourself? If I would use this, I would lock it down extremely tight. No traffic to the internet, for instance. I would make sure on my router/fw/gateway it only reacts to a few internal IP addresses, including a few that get handed out when I connect with VPN to my network (for remote access). Opening up physical access to the outside world is a really, really, REALLY, creepy thing, however nice it is.

What makes that different from all the existing Server IPMI's thats already on most decent server MoBo's ?And even locked down, what if I connect using a web interface and that web interface that is running on the client machine connects to the outside world as well through whatever is on the web page getting around my nicely locked down setup...?

IMHO - Browser control is mostly on you.

That said:

I have been eyeballing this one a bit, and they just released a PoE model too.

But i think they're a bit pricey for their functionality.

The mentioned above, has a "small dataflash" ... Might not contain an install ISO.

The Poe model has a bigger "ok" dataflash, but price went high up ..... Considering we're just talking about a ~$6..10 increased hw cost.

Might running an iperf3 on PVE itself concurrently with VM(s) be a good test? This would cover the client VMs running network traffic concurrently with for instance running backups of clients on the host. Also, running iperf3 in both directions?Just to update you guys.

After this VM finishes up this Iperf3 10 hour test, I'll do another test with multiple VMs running Iperf3 at the same time, as well as the proxmox host. My best settings so far are the ones I last posted in this thread, with whatever miscellaneous changes I may have made elsewhere.

- Ubuntu VM with an e1000e nic successfully ran IPerf3 for 10 hours at gigabit speeds with 0 retransmissions and no crashing.

- Windows 10 VM with a VirtIO nic has been running IPerf3 for 8 hours at gigabit speeds. No stats until it's done.

If you experienced this issue before, other than just waiting and seeing if the problem was fixed, would you recommend any tests or benchmarks that would crash a nic with the hardware hang? How can I test it before I put it in a production environment, so to speak? It seems the windows 10 VM running a VirtIO nic and iperf3 was reliably able to make it crash previously, but after the changes, so far, so good. I might call it done after a few more days of testing.

(I ran into this also quite suddenly earlier this year after using PVE since 2022 with a very simple setup: single PVE+PBS host, single Ubuntu VM (lshw says I am running the Virtio network device). I haven't had the problem anymore after turning off the hardware with "post-up /sbin/ethtool -K eno1 tso off gso off" on the PVE host)

Last edited:

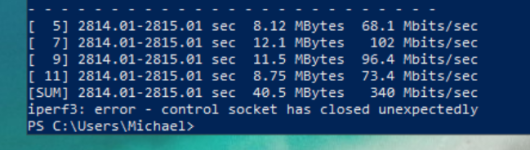

Over the extended test with the windows VM iperf3 experienced an issue, but proxmox and the VMs are all still responsive.

On the win10 client with a VirtIO nic I got:

iperf3: error - control socket has closed unexpectedly

On the server, I got: (remember not the proxmox server, a different server in the network)

iperf3: error - select failed: Bad file descriptor

So far this has solved the issue for the most part. I'll keep trying to see if VirtIO can pass a 10+ hour iperf3 test. I might be sticking to e1000e nic assignments in proxmox, we'll see.

On the win10 client with a VirtIO nic I got:

iperf3: error - control socket has closed unexpectedly

On the server, I got: (remember not the proxmox server, a different server in the network)

iperf3: error - select failed: Bad file descriptor

So far this has solved the issue for the most part. I'll keep trying to see if VirtIO can pass a 10+ hour iperf3 test. I might be sticking to e1000e nic assignments in proxmox, we'll see.

Update: Iperf3 completed on the Windows VM with a VirtIO nic. First failure must've been a fluke.

After the change, I have not been able to get it to fail as quickly or in the same way as before. I have not yet experienced a "hardware hang" on the proxmox host in the same way.

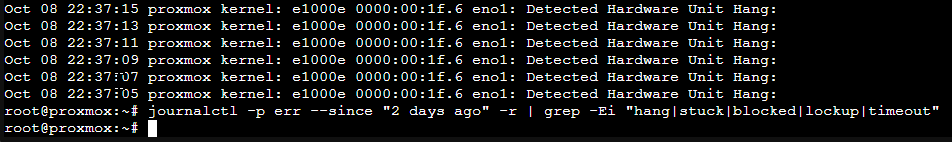

In the proxmox shell when I search:

I'm now greeted with no results over the last 2 days:

So, it's looking hopeful. Firing up multiple iperf3 instances across virtual machines now.

After the change, I have not been able to get it to fail as quickly or in the same way as before. I have not yet experienced a "hardware hang" on the proxmox host in the same way.

In the proxmox shell when I search:

Code:

journalctl -p err --since "2 days ago" -r | grep -Ei "hang|stuck|blocked|lockup|timeout"

So, it's looking hopeful. Firing up multiple iperf3 instances across virtual machines now.

Great to hear you have been having some success. So I went ahead with my HA server rebuild. I decided to go with proxmox and just see how it goes. I got two solid days before I got any grief, but this morning the NIC hung, and I had to reboot the machine to get it back up again. Yay, I've joined the club.

Config at this point:

What's interesting is that I gave the NIC a pretty heavy workout during the build and it didn't miss a beat. When it hung this morning, there really wasn't anything interesting happening.

For the record - here is my hang error. These appear in the logs every 2 seconds until I rebooted the machine.

I've decided to begin with the minimalist approach per your suggestion. Just:

Which I've only run on the host so far. I'll worry about making it permanent another day. And I will probably order a USB ethernet adapter because my patience for this kind of nonsense is pretty limited. Suggestions welcome for a known good adapter (it needs to be USB-A; 1 Gbps is fine).

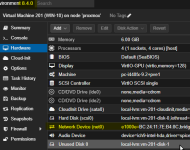

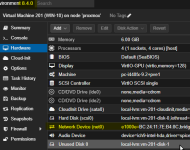

Config at this point:

- Proxmox 9

- HAOS 16.2 in VM

- Debian 13 in VM

- Intel NUC (NUC8I3BEH 8th Gen Core i3)

- NIC: I219-V (rev 30)

- virtio network devices in VMs

- No

ethtoolmodifications - ASPM features turned off in BIOS

What's interesting is that I gave the NIC a pretty heavy workout during the build and it didn't miss a beat. When it hung this morning, there really wasn't anything interesting happening.

For the record - here is my hang error. These appear in the logs every 2 seconds until I rebooted the machine.

Bash:

# journalctl -b -1 -kg "hardware unit hang|e1000"

Oct 13 07:03:13 proxmox kernel: e1000e 0000:00:1f.6 eno1: Detected Hardware Unit Hang:

TDH <7b>

TDT <28>

next_to_use <28>

next_to_clean <7a>

buffer_info[next_to_clean]:

time_stamp <10828fd8d>

next_to_watch <7b>

jiffies <1082bc700>

next_to_watch.status <0>

MAC Status <40080083>

PHY Status <796d>

PHY 1000BASE-T Status <3c00>

PHY Extended Status <3000>

PCI Status <10>I've decided to begin with the minimalist approach per your suggestion. Just:

Bash:

# ethtool -K eno1 tso off

# ethtool -K vmbr0 tso offWhich I've only run on the host so far. I'll worry about making it permanent another day. And I will probably order a USB ethernet adapter because my patience for this kind of nonsense is pretty limited. Suggestions welcome for a known good adapter (it needs to be USB-A; 1 Gbps is fine).

Last edited:

^ That's very interesting. I've been downloading a Kali Everything torrent, withhout issue. 12+gb, but it's not stressing it as far as speed goes. I'll do more testing with iperf3 later.

It may be of interest that our mac statuses and 1000BASE-T statuses are different. Not sure if that indicates in any way the fix that would be required. I hope to continue having positive results.

It may be of interest that our mac statuses and 1000BASE-T statuses are different. Not sure if that indicates in any way the fix that would be required. I hope to continue having positive results.

BTW, neponn, I should have mentioned, if you have an extra m.2 slot, perhaps an m.2 to network adapter would work. Anything should be better than a USB adapter. However, I have also heard less than positive reviews about many m.2 network adapters, so YMMV.

Also in my case I was able to put wifi up as a backup. If you have a reverse proxy between you and the instance you should be able to configure it so that it tries to go through the ethernet port, but it will fall back to the wifi IP address as needed. IIRC many NUCs have built in wifi. Unfortunately, they're integrated into the MOBO sometimes, so you can't swap them out with another m.2 device.

I'm not sure how you would configure mqtt settings per device, as I'm not sure if you can put a host name vs. an IP address into certain devices like Tasmota flashed smart plugs. On my network I use nginx proxy manager as a reverse proxy running on a Raspberry Pi. Not all home assistant devices need to be configured to tell it the HA MQTT IP address, so you might luck out depending on the devices you have.

Also I was experimenting at home running HA on a proxmox host, but I found it much easier and more power efficient to just put a "managed" instance on a raspberry pi 5. Usually idles ~6 watts, and HA OS offers easy frigate installation & setup. Just running it on a proxmox host ate up a minimum of 10 watts just to have it idling. So even when you already have proxmox up and running it might be more efficient to just use the pi. Currently the most energy efficient proxmox hardware I have ever found (with ecc ram) idles with no VMs running right around 10-15 watts.

This is with no GPU, two ecc ddr4 ram sticks, and a few more caveats. But you just can't beat the pi's efficiency with most x86/x64 hosts.

Also in my case I was able to put wifi up as a backup. If you have a reverse proxy between you and the instance you should be able to configure it so that it tries to go through the ethernet port, but it will fall back to the wifi IP address as needed. IIRC many NUCs have built in wifi. Unfortunately, they're integrated into the MOBO sometimes, so you can't swap them out with another m.2 device.

I'm not sure how you would configure mqtt settings per device, as I'm not sure if you can put a host name vs. an IP address into certain devices like Tasmota flashed smart plugs. On my network I use nginx proxy manager as a reverse proxy running on a Raspberry Pi. Not all home assistant devices need to be configured to tell it the HA MQTT IP address, so you might luck out depending on the devices you have.

Also I was experimenting at home running HA on a proxmox host, but I found it much easier and more power efficient to just put a "managed" instance on a raspberry pi 5. Usually idles ~6 watts, and HA OS offers easy frigate installation & setup. Just running it on a proxmox host ate up a minimum of 10 watts just to have it idling. So even when you already have proxmox up and running it might be more efficient to just use the pi. Currently the most energy efficient proxmox hardware I have ever found (with ecc ram) idles with no VMs running right around 10-15 watts.

This is with no GPU, two ecc ddr4 ram sticks, and a few more caveats. But you just can't beat the pi's efficiency with most x86/x64 hosts.

Thanks mr.hollywood - some great comments there. Sadly, I don't have another m.2 slot, so it is USB or bust. WiFi fallback is interesting, although trying to keep a consistent IP address at fallback sounds challenging - maybe it can be done with clever scripting (probably a bit beyond my capability).

Pi is also an interesting suggestion. I originally invested in the NUC, because I outgrew the Pi3 I was running on at the time: heavy MySQL and vscode loads were too much for it. But time has passed, and you make a great point that a Pi5 is probably quite comparable with the (now old) NUC I have. I'll give it some thought.

Pi is also an interesting suggestion. I originally invested in the NUC, because I outgrew the Pi3 I was running on at the time: heavy MySQL and vscode loads were too much for it. But time has passed, and you make a great point that a Pi5 is probably quite comparable with the (now old) NUC I have. I'll give it some thought.

I'm happy to report that proxmox can handle iperf3 tests now for hours at a time without the hardware lockup. This was between two VMs, a windows and ubuntu vm and the proxmox VE / host and 3 other devices on my network.

Now, I'm going to try and find some other way of testing it. It would sometimes lock up when not under a load before, so I'll report back if I experience any further issues or require more fixes.

Not to get too sidetracked, the only drawback of the pi5 for home assistant is you'll have to figure to add a minimum of $60 to the purchase price for a case, nvme hat, and a cheap drive. You can rough it out with a 3d printed case and a microsd card, but you can begin to feel the difference in speed between the two. I just upgraded from microsd to nvme on my instance, and the improvement was tremendous. That, and you'll want to spring for an 8gb+ pi if you want to use frigate or something else with larger AI models. The 4gb runs out of space fast when AI becomes involved.

I've been considering pimox7 on a 16gb pi5, as I'd love an Arm64 virtualization environment too. Home Assistant doesn't consume lots of CPU on the pi5 so it could definitely share resources with other services, but certain projects like frigate recommend running bare metal for performance reasons.

Now, I'm going to try and find some other way of testing it. It would sometimes lock up when not under a load before, so I'll report back if I experience any further issues or require more fixes.

Not to get too sidetracked, the only drawback of the pi5 for home assistant is you'll have to figure to add a minimum of $60 to the purchase price for a case, nvme hat, and a cheap drive. You can rough it out with a 3d printed case and a microsd card, but you can begin to feel the difference in speed between the two. I just upgraded from microsd to nvme on my instance, and the improvement was tremendous. That, and you'll want to spring for an 8gb+ pi if you want to use frigate or something else with larger AI models. The 4gb runs out of space fast when AI becomes involved.

I've been considering pimox7 on a 16gb pi5, as I'd love an Arm64 virtualization environment too. Home Assistant doesn't consume lots of CPU on the pi5 so it could definitely share resources with other services, but certain projects like frigate recommend running bare metal for performance reasons.

I'm happy to report that proxmox can handle iperf3 tests now for hours at a time without the hardware lockup. This was between two VMs, a windows and ubuntu vm and the proxmox VE / host and 3 other devices on my network.

Now, I'm going to try and find some other way of testing it. It would sometimes lock up when not under a load before, so I'll report back if I experience any further issues or require more fixes.

Not to get too sidetracked, the only drawback of the pi5 for home assistant is you'll have to figure to add a minimum of $60 to the purchase price for a case, nvme hat, and a cheap drive. You can rough it out with a 3d printed case and a microsd card, but you can begin to feel the difference in speed between the two. I just upgraded from microsd to nvme on my instance, and the improvement was tremendous. That, and you'll want to spring for an 8gb+ pi if you want to use frigate or something else with larger AI models. The 4gb runs out of space fast when AI becomes involved.

I've been considering pimox7 on a 16gb pi5, as I'd love an Arm64 virtualization environment too. Home Assistant doesn't consume lots of CPU on the pi5 so it could definitely share resources with other services, but certain projects like frigate recommend running bare metal for performance reasons.

That's very encouraging. On the Pi5, a cursory glance at Geekbench 6 results suggest that my NUC is still slightly ahead of the Pi5; it's just a question of power consumption - ARM has it all over Intel in that respect. So I don't think I'll bother changing now, but an interesting data point for the future.

Unfortunately my windows VM cannot pass an extended iperf3 test yet with the virtio nic. It always fails right towards the end with the message:

I'll try again with an e1000e nic specified. The UBUNTU vm never experiences a problem, and neither does the proxmox host.

The problem could be something else, but I have only had this error with the VirtIO nic specified.

I'll try again with an e1000e nic specified. The UBUNTU vm never experiences a problem, and neither does the proxmox host.

The problem could be something else, but I have only had this error with the VirtIO nic specified.

Humph. Another hang. Once gain, not much network activity at the time. So

Out of curiosity - has anyone found a way to reset the hung e1000 controller without rebooting the system? Wondering whether a supervisor script could be developed that detected a hang and took action to reinstate the controller? Given how infrequently hangs occur, this could be workable...

tso off isn't enough. Now trying tso off gso off gro off. USB ethernet adapter on the way (Axagon ADE-SR).Out of curiosity - has anyone found a way to reset the hung e1000 controller without rebooting the system? Wondering whether a supervisor script could be developed that detected a hang and took action to reinstate the controller? Given how infrequently hangs occur, this could be workable...

^ Out of curiousity neponn, can you post your Hardware Unit Hang error from today and see if it's any different than before?

I'm not seeing any documentation about the MAC or PHY statuses, but with enough information it might help lead us down the right path. I'm wondering if even one bit in the MAC or PHY status can indicate which settings need to change.

I'm not seeing any documentation about the MAC or PHY statuses, but with enough information it might help lead us down the right path. I'm wondering if even one bit in the MAC or PHY status can indicate which settings need to change.

After switching the network hardware for the VM to e1000e, it can complete an extended iperf3 session

Here's what I had to do for the windows VM:

Going to fire up another test of the windows VM to the same iperf3 server to ensure stability. It seems I always had the most issues using the VirtIO network devices for the VMs.

Here's what I had to do for the windows VM:

Going to fire up another test of the windows VM to the same iperf3 server to ensure stability. It seems I always had the most issues using the VirtIO network devices for the VMs.

I'm happy to report that switching every VM to use an e1000e nic has resolved 100% of the problems. I no longer experience hardware hangs, or iperf3 failures on extended runs.

Admittedly my data points are still rather limited (only two 10 hour runs succeeded with the e1000e nics on the windows VM) but, it has given me enough faith to begin deployment with a "wait and see" attitude. Fingers crossed no more issues, if I experience anything I'll let you know.

Admittedly my data points are still rather limited (only two 10 hour runs succeeded with the e1000e nics on the windows VM) but, it has given me enough faith to begin deployment with a "wait and see" attitude. Fingers crossed no more issues, if I experience anything I'll let you know.

Last edited: