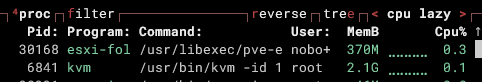

Okay, I'm going to preface this with that I do not know Rust, but if I'm reading this correctly, it looks like the ESXi import can do 4 calls at once for pulling data.

This is what I think is happening:

It kicks off the make_request function

code_language.rust:

async fn make_request<F>(&self, mut make_req: F) -> Result<Response<Body>, Error>

where

F: FnMut() -> Result<http::request::Builder, Error>,

{

let mut permit = self.connection_limit.acquire().await;

....

In there you see where it says, self.connection_limit.acquire, I believe that's pulling the value from the ConnectionLimit, here

code_language.rust:

impl ConnectionLimit {

fn new() -> Self {

Self {

requests: tokio::sync::Semaphore::new(4),

retry: tokio::sync::Mutex::new(()),

}

}

/// This is supposed to cover an entire request including its retries.

async fn acquire(&self) -> SemaphorePermit<'_> {

// acquire can only fail when the semaphore is closed...

self.requests

.acquire()

.await

.expect("failed to acquire semaphore")

}

Which, if I'm reading correctly, is hard coded to 4.

This is from the PVE-ESXI-IMPORT-TOOLS repo

https://git.proxmox.com/?p=pve-esxi-import-tools.git;a=summary

And I'm guessing the download_do function picks the next chunk of data to be pulled and asks for it

code_language.rust:

async fn download_do(

&self,

query: &str,

range: Option<Range<u64>>,

) -> Result<(Bytes, Option<u64>), Error> {

let (parts, body) = self

.make_request(|| {

let mut req = Request::get(query);

if let Some(range) = &range {

req = req.header(

"range",

&format!("bytes={}-{}", range.start, range.end.saturating_sub(1)),

)

}

Ok(req)

})

.await?

.into_parts();

They may have picked a really low number for the import just to be on the safe side, similar to how the backup jobs were originally being done to PBS a few months ago.

Again, if I'm reading this right, maybe a test would be to change the 4 to a 6 or to 8 and see if the speed scales with the increased number of calls.