Hi.

We have PowerEdge that we installed in the hopes of abandoning ESXi for Proxmox, but it seems to be having performance issues under relatively light loads. Can anyone recommend different hardware going forward (that's working better for them)? Or are there Proxmox configuration issues that I can investigate?

Dell PowerEdge R740XD

Processors: 2 x 18-core Xeon Gold 6140 2.3GHz

12 x 32GB PC4-21300-R ECC (DDR4-2666) (384GB Total)

2x500GB, 8x4TB

RAID Controller: PERC H740P 8GB cache

(All drives are SSD, the 500GB are the OS and the 4TBs are for the guests.)

Initially we installed this with zfs, but as we moved forward we found that the performance for backup restoration was untenable (we do not have Proxmox Backup Server). Restoring a backup of a virtual machine would freeze all or some other VMs for 40-45 minutes (load ran away on Proxmox and on a couple of occasions we had to reboot it). (The backups are made to an external box, but the freeze did not happen during the 1% 2% 3% part of the file transfer, but rather in the long hang step that happens after 100%. Messing with "bandwidth" only seemed to slow down the 1% 2% 3% part, there were some lesser stalls during backup taking and restoring snapshots as well) (And frankly zfs is confusing.)

We wiped it and started over with it just relying on the PERC RAID (as I recall to make zfs work we had to set "Enhanced HBA" to bypass the Dell PERC card's RAID).

Now (using .qcow2) I have begun migrating (by copying from ESXi) Windows 10 remote-desktop boxes and upgrading them to Windows 11 (which our ESXi does not natively support). Backup restoration seemed to freeze my Windows 11 test user for 30-or-40 seconds, which is not great for business hours but is definitely more workable than 40 minutes and a possible reboot. (Also snapshots work much more nicely with .qcow2 in that they allow for hopping around). Everything else works rightly procedure side but now I am hearing rumblings about performance of the VMs.

Currently there are 16 VMs on this Proxmox.

4 - Windows 11s with regular use

1 - Windows 10 with regular use (starting today though)

4 - Windows 11s with little or no use (they were the test install and migration of no-longer or only occasionally used examples for test upgrades from Windows 10)

1 - Windows 2019 SQL server (unused, no databases, also to test migration)

1 - Rocky server (our virus scanner's network controller) (it sees very little use but presumably does some stuff in the background)

4 - Ubuntu server tests (minimal use, but use)

1 - Ubuntu actual server (but our least used, chosen for that as a test)

The Windows 10 and 11 users are now complaining about the performance of their boxes, in what seem to them like system-resource issues (long times to open Windows, task manager never comes up, etc). These particularly align with migrations of new boxes (which I now do after hours) but their new VMs are generally performing worse than their old Windows 10 version on ESXi6, which was a fairly similar box:

PowerEdge R730xd

2 x 14-core E5-2695v3 2.20GHz Xeon

16 x 16GB PC4-17000 (DDR4-2133) (256GB Total)

12 x 2TB SSD SATA 2.5" 6GB/s

RAID Controller: PERC H730P Mini 2GB Cache

(And I was hoping to be able to empty that ESXi box on to this Proxmox machine, then wipe it, and put Proxmox on it too.)

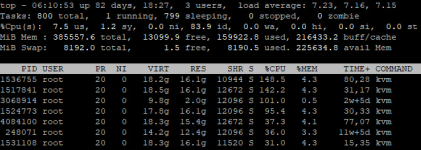

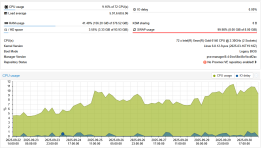

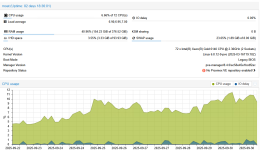

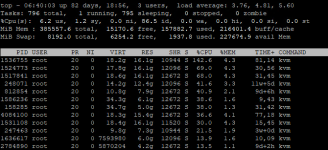

With no-one in yet this morning I see 7's for load across top. When I see that get to 12-16 or so, I will start getting complaints.

During the writing of this I noticed that one of those Ubuntu server tests (that I haven't looked at in months) was in kernel panic, using 13% of its CPU and more importantly 6GB of the system's 8GB SWAP (not its own internal, but Proxmox's) so turning that off made the system SWAP and apparently load go down, it was 3068914 here:

3068914 kvm 6383808 kB

4084100 kvm 730368 kB

247463 kvm 628956 kB

2784890 kvm 122688 kB

1804 pve-ha-crm 88128 kB

1989 pvescheduler 72000 kB

1814 pve-ha-lrm 57600 kB

2249270 pvedaemon 49968 kB

2195454 pvedaemon 49968 kB

3614976 pvedaemon 49536 kB

2092611 pvedaemon 47692 kB

1772 pvestatd 44928 kB

1768 pve-firewall 41472 kB

247554 kvm 20736 kB

158285 kvm 17044 kB

250795 kvm 16128 kB

248071 kvm 13824 kB

812854 kvm 10944 kB

1955101 kvm 5760 kB

1536755 kvm 4032 kB

1636617 kvm 2304 kB

1622629 (sd-pam) 704 kB

2026 esxi-folder-fus 576 kB

1652 pmxcfs 576 kB

And now my load seems more reasonable for basically no users (so maybe that was a big part of it?).

Still, I am concerned that I have chosen the wrong server hardware or Proxmox configuration for this. I was planning to move another 17 VMs or so to this (to clear out the original ESXi) and though only 3 of them see real traffic, almost all of them are production for someone (even if their use is low).

Can anyone recommend different hardware going forward (that's working better for them)? Or are there Proxmox configuration issues that I can investigate? As it stands I can only migrate anything in the middle of the night and now I am scared to take a snapshot, much less attempt restoring a backup, during business hours... even though that was a much bigger problem with zfs before, disrupting desktop users is immediate trouble for everyone (and the fact that I am means that I am causing server troubles that I won't hear about right away but that will translate to "it's been slower the last few months" stuff).

Thanks.

RJD

We have PowerEdge that we installed in the hopes of abandoning ESXi for Proxmox, but it seems to be having performance issues under relatively light loads. Can anyone recommend different hardware going forward (that's working better for them)? Or are there Proxmox configuration issues that I can investigate?

Dell PowerEdge R740XD

Processors: 2 x 18-core Xeon Gold 6140 2.3GHz

12 x 32GB PC4-21300-R ECC (DDR4-2666) (384GB Total)

2x500GB, 8x4TB

RAID Controller: PERC H740P 8GB cache

(All drives are SSD, the 500GB are the OS and the 4TBs are for the guests.)

Initially we installed this with zfs, but as we moved forward we found that the performance for backup restoration was untenable (we do not have Proxmox Backup Server). Restoring a backup of a virtual machine would freeze all or some other VMs for 40-45 minutes (load ran away on Proxmox and on a couple of occasions we had to reboot it). (The backups are made to an external box, but the freeze did not happen during the 1% 2% 3% part of the file transfer, but rather in the long hang step that happens after 100%. Messing with "bandwidth" only seemed to slow down the 1% 2% 3% part, there were some lesser stalls during backup taking and restoring snapshots as well) (And frankly zfs is confusing.)

We wiped it and started over with it just relying on the PERC RAID (as I recall to make zfs work we had to set "Enhanced HBA" to bypass the Dell PERC card's RAID).

Now (using .qcow2) I have begun migrating (by copying from ESXi) Windows 10 remote-desktop boxes and upgrading them to Windows 11 (which our ESXi does not natively support). Backup restoration seemed to freeze my Windows 11 test user for 30-or-40 seconds, which is not great for business hours but is definitely more workable than 40 minutes and a possible reboot. (Also snapshots work much more nicely with .qcow2 in that they allow for hopping around). Everything else works rightly procedure side but now I am hearing rumblings about performance of the VMs.

Currently there are 16 VMs on this Proxmox.

4 - Windows 11s with regular use

1 - Windows 10 with regular use (starting today though)

4 - Windows 11s with little or no use (they were the test install and migration of no-longer or only occasionally used examples for test upgrades from Windows 10)

1 - Windows 2019 SQL server (unused, no databases, also to test migration)

1 - Rocky server (our virus scanner's network controller) (it sees very little use but presumably does some stuff in the background)

4 - Ubuntu server tests (minimal use, but use)

1 - Ubuntu actual server (but our least used, chosen for that as a test)

The Windows 10 and 11 users are now complaining about the performance of their boxes, in what seem to them like system-resource issues (long times to open Windows, task manager never comes up, etc). These particularly align with migrations of new boxes (which I now do after hours) but their new VMs are generally performing worse than their old Windows 10 version on ESXi6, which was a fairly similar box:

PowerEdge R730xd

2 x 14-core E5-2695v3 2.20GHz Xeon

16 x 16GB PC4-17000 (DDR4-2133) (256GB Total)

12 x 2TB SSD SATA 2.5" 6GB/s

RAID Controller: PERC H730P Mini 2GB Cache

(And I was hoping to be able to empty that ESXi box on to this Proxmox machine, then wipe it, and put Proxmox on it too.)

With no-one in yet this morning I see 7's for load across top. When I see that get to 12-16 or so, I will start getting complaints.

During the writing of this I noticed that one of those Ubuntu server tests (that I haven't looked at in months) was in kernel panic, using 13% of its CPU and more importantly 6GB of the system's 8GB SWAP (not its own internal, but Proxmox's) so turning that off made the system SWAP and apparently load go down, it was 3068914 here:

for f in /proc/*/status; do awk '/^Name:/ {name=$2} /^Pid:/ {pid=$2} /^VmSwap:/ {swap=$2} END{ if(!swap) swap=0; print pid, name, swap }' "$f"; done | sort -k3 -n -r | awk '$3>0 {printf "%s %s %s kB\n",$1,$2,$3}'3068914 kvm 6383808 kB

4084100 kvm 730368 kB

247463 kvm 628956 kB

2784890 kvm 122688 kB

1804 pve-ha-crm 88128 kB

1989 pvescheduler 72000 kB

1814 pve-ha-lrm 57600 kB

2249270 pvedaemon 49968 kB

2195454 pvedaemon 49968 kB

3614976 pvedaemon 49536 kB

2092611 pvedaemon 47692 kB

1772 pvestatd 44928 kB

1768 pve-firewall 41472 kB

247554 kvm 20736 kB

158285 kvm 17044 kB

250795 kvm 16128 kB

248071 kvm 13824 kB

812854 kvm 10944 kB

1955101 kvm 5760 kB

1536755 kvm 4032 kB

1636617 kvm 2304 kB

1622629 (sd-pam) 704 kB

2026 esxi-folder-fus 576 kB

1652 pmxcfs 576 kB

And now my load seems more reasonable for basically no users (so maybe that was a big part of it?).

Still, I am concerned that I have chosen the wrong server hardware or Proxmox configuration for this. I was planning to move another 17 VMs or so to this (to clear out the original ESXi) and though only 3 of them see real traffic, almost all of them are production for someone (even if their use is low).

Can anyone recommend different hardware going forward (that's working better for them)? Or are there Proxmox configuration issues that I can investigate? As it stands I can only migrate anything in the middle of the night and now I am scared to take a snapshot, much less attempt restoring a backup, during business hours... even though that was a much bigger problem with zfs before, disrupting desktop users is immediate trouble for everyone (and the fact that I am means that I am causing server troubles that I won't hear about right away but that will translate to "it's been slower the last few months" stuff).

Thanks.

RJD